AMD Announces hUMA, Heterogeneous Uniform Memory Access For Future APUs

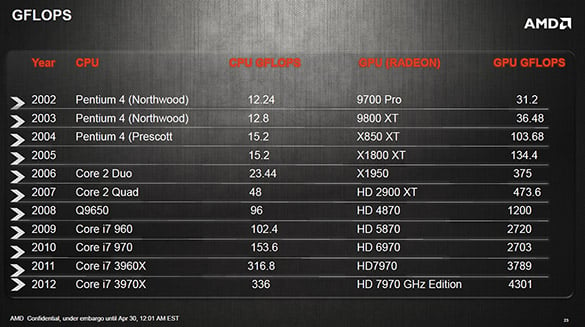

The dawn of GPU computing came about in large part due to the immense gap in compute performance between traditional CPUs and programmable GPUs. Whereas CPUs excel with serial workloads, modern GPUs perform best with highly parallel operations. If you look at the slide below, it shows an array of Intel processors and AMD (and ATI) GPUs dating back to 2002, along with each part’s compute performance, measured in GFLOPS (or Gigaflops—billions of floating point operations per second). It gives a number of examples that show the clear disparities in compute performance we’re talking about.

As you can see, even today’s fastest desktop processor, the 6-core / 12-thread Intel Core i7-3970X, offers much lower floating point compute performance than the roughly seven year old Radeon HD X1950. And in comparison to a contemporary GPU like the Radeon HD 7970 GHz Edition, which is outfitted with 2,048 individual stream processors, there is simply no contest. With a highly parallel, floating point intensive workload, a GPU like the Radeon HD 7970 GHz Edition can offer almost 13x the performance of the Core i7-3970X.

Although GPUs can theoretically offer significant performance, and sometimes power efficiency, benefits to software developers, leveraging those capabilities can often be difficult because today’s CPUs and GPUs require their own memory spaces and programming models. We’re going to simplify this explanation a bit, but what that means is that if a developer would like to leverage both CPU and GPU compute resources with a particular piece of software, the CPU must first operate within its own memory space, then the CPU must copy the requisite data to the GPU’s memory space across a relatively slow IO bus, where the GPU then completes any necessary computation, and finally the CPU must copy the results back to its memory space. Needless to say, there’s lots of inefficiency and latency introduced in a non-unified memory architecture like this, which in turn leaves plenty of performance on the table. AMD wants to change that.

Today AMD is announcing hUMA or Heterogeneous Unified Memory Access. AMD been talking about some of the features of hUMA for a while, but is now ready to disclose more specifics and they've got a product that employs the technology already waiting in the wings.

To better understand hUMA, we should first talk a bit about the UMA (Uniform Memory Access) and NUMA (Non-Unified Memory Access) architectures prevalent today. The original meaning of UMA is Uniform Memory Access and it refers to how the processing cores in a system view and access memory. In a UMA-capable architecture, all processing cores share a single memory address space. There is a single memory heap and all CPU cores can access any address.

The introduction of GPU computing, however, created systems with Non-Uniform Memory Access, or NUMA. NUMA requires data to be managed across multiple heaps with different address spaces for the CPUs and GPUs, which adds programming complexity due to frequent copies, synchronization, and address translation, as we mentioned above. There are also power and performance inefficiencies inherent to NUMA architectures because data is often duplicated and shuttled on and off chips over external busses.

In the slide shown here, there are a couple of basic block diagrams that illustrate UMA, NUMA, and hUMA. The top row in the slide shows four CPUs accessing a single pool of memory in a bi-directional, cohesive manner in a UMA configuration. The next row represents a NUMA architecture, with CPUs and GPUs accessing separate memory blocks. In the NUMA configuration, there are discrete pools or heaps of memory for the CPUs and GPUs and the they each operate only within their own pools of memory. Things come full circle with hUMA though, with both the CPUs and GPUs accessing the entire memory space with bi-directional coherency.

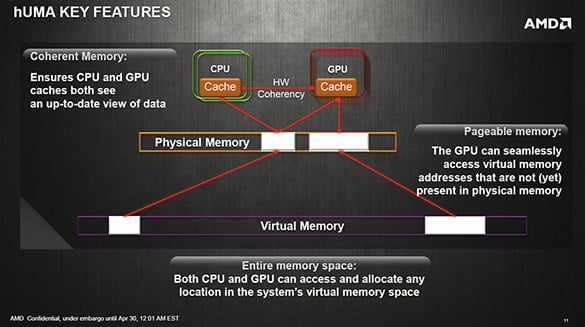

The main features of hUMA are:

- Bi-Directional Coherent Memory - Any updates made by one processing element will be seen by all other processing elements, GPU or CPU

- Pageable Memory - GPU can take page faults, and is no longer restricted to page locked memory

- Entire Memory Space - CPU and GPU processes can dynamically allocate memory from the entire memory space

This is how current NUMA architectures operate...

With hUMA, the CPU and GPU and share data in a uniform memory space.

AMD claims that programming for hUMA-enabled platforms should ease software development and potentially lower development costs as well. The technology is supported by mainstream programming languages like Python, C++, and Java, and should allow developers to more simply code for a particular compute resource with no need for special APIs. AMD also points out that there’s a level of power efficiency introduced with hUMA because parallel code that used to run on CPU can now be run on the GPU, and vice versa.

If you’ve wondering when hUMA-enabled products will hit the market, we’re told Kaveri will be the first APU to support the technology. Kaveri is the codename of AMD’s first APU built around the company’s Steamroller cores, which should offer significant performance improvements over current Piledriver-based products. Expect Kaveri to launch some time in the second half of 2013.