AMD Unveils Monster Instinct MI300 Hybrid CPU-GPU AI Accelerator And Skynet Smiles

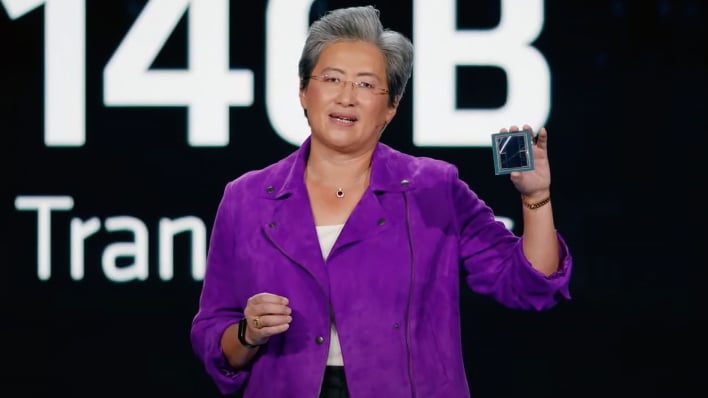

AMD's RDNA 3 GPU architecture found in the Radeon RX 7900 family of graphics cards was a tremendous leap in performance over the previous generation found in the Radeon RX 6000 series. Therefore, it only stands to reason that the next generation of AMD's high-performance and adaptive computing accelerators would represent a similarly enormous leap over the previous generation. Tonight during the company's presentation on the opening night of CES, CEO Dr. Lisa Su announced the Instinct MI300, the latest in AMD's AI and other compute accelerators with a big focus on sustainability and controlling energy consumption while delivering massive performance.

Dr. Su started AMD's presentation with a discussion of AI, calling it the most important "mega-trend" driving technology. AMD's stance is that AI is most helpful if it's available to all devices, whether in private datacenters, the public cloud, PCs, or mobile devices. AMD made a big deal about AI acceleration across all of its product announcements tonight, but it's those server-focused applications where the Instinct MI300, which combines CPU and HPC compute resources on a single package, will make its home. Let's take a look at this new and more energy-efficient compute monster, the architecture of which was first detailed back in June.

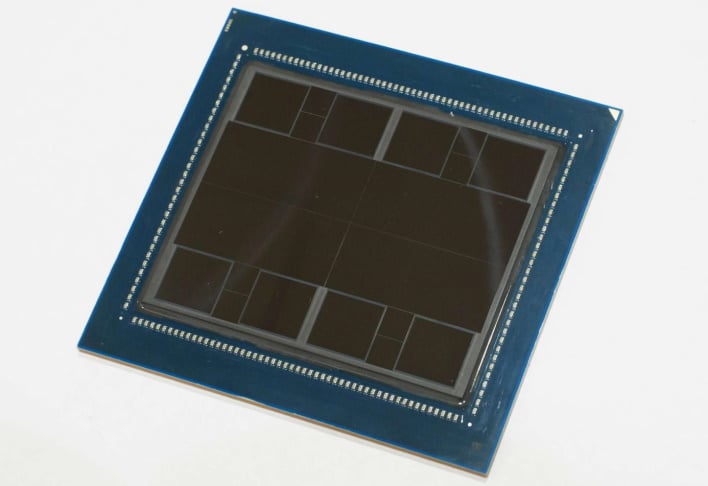

The Instinct MI300 is an enormous package, if you couldn't tell from the photo of Dr. Su holding one at CES tonight. Su says this is the most complex processor that AMD has ever built. First up, there are 24 Zen 4 CPU cores—also found in the latest EPYC datacenter CPUs—and an unspecified number of next-gen acceleration cores based on the company's next-generation CDNA 3 architecture. There are nine 5nm chiplets 3D-stacked on top of four 6nm chiplets and a connection to 128 GB of HBM 3 memory shared between the CPU and CDNA 3 resources. All told, the MI300 has over 146 billion transistors and it apparently almost fits in the palm of your hand, well maybe, if you have large hands.

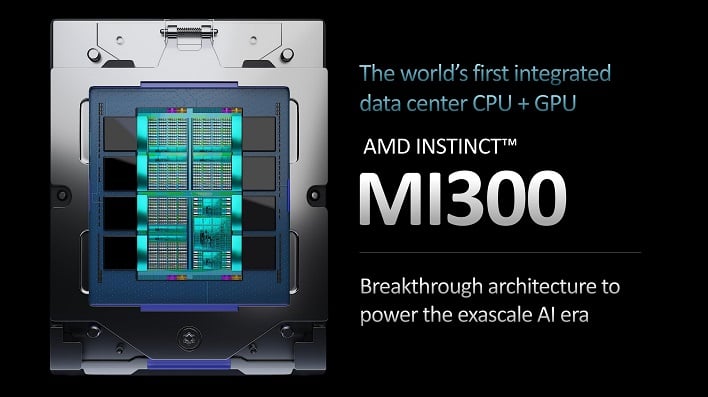

Previous Instinct products, like the MI200, were GPU-based resources only, rather than the new hybrid design shown here. AMD says that using the hybrid CPU-and-GPU chiplet approach not only saves energy for a given compute load, but also makes programming the chip easier than before. AMD says this is the world's first datacenter processor integrating CPU and GPU resources with all of this memory bandwidth on hand into a single package.

In terms of performance, the MI300 is apparently quite stacked, and AMD expects that it can turn the six months or so that large language models like OpenAI Chat GPT 3 require for training into just a few weeks. Dr. Su says the AI compute performance of its latest HPC accelerator is a whopping eight times that of its predecessor, the Instinct MI250X. Not only that but AMD says that combining CPU and AI acceleration units in this fashion saves enough energy that MI300's AI performance per watt is five time that of the previous generation. According to Su, the Instinct 300 will ship in the second half of this year.

Dr. Su started AMD's presentation with a discussion of AI, calling it the most important "mega-trend" driving technology. AMD's stance is that AI is most helpful if it's available to all devices, whether in private datacenters, the public cloud, PCs, or mobile devices. AMD made a big deal about AI acceleration across all of its product announcements tonight, but it's those server-focused applications where the Instinct MI300, which combines CPU and HPC compute resources on a single package, will make its home. Let's take a look at this new and more energy-efficient compute monster, the architecture of which was first detailed back in June.

The Instinct MI300 is an enormous package, if you couldn't tell from the photo of Dr. Su holding one at CES tonight. Su says this is the most complex processor that AMD has ever built. First up, there are 24 Zen 4 CPU cores—also found in the latest EPYC datacenter CPUs—and an unspecified number of next-gen acceleration cores based on the company's next-generation CDNA 3 architecture. There are nine 5nm chiplets 3D-stacked on top of four 6nm chiplets and a connection to 128 GB of HBM 3 memory shared between the CPU and CDNA 3 resources. All told, the MI300 has over 146 billion transistors and it apparently almost fits in the palm of your hand, well maybe, if you have large hands.

Previous Instinct products, like the MI200, were GPU-based resources only, rather than the new hybrid design shown here. AMD says that using the hybrid CPU-and-GPU chiplet approach not only saves energy for a given compute load, but also makes programming the chip easier than before. AMD says this is the world's first datacenter processor integrating CPU and GPU resources with all of this memory bandwidth on hand into a single package.

In terms of performance, the MI300 is apparently quite stacked, and AMD expects that it can turn the six months or so that large language models like OpenAI Chat GPT 3 require for training into just a few weeks. Dr. Su says the AI compute performance of its latest HPC accelerator is a whopping eight times that of its predecessor, the Instinct MI250X. Not only that but AMD says that combining CPU and AI acceleration units in this fashion saves enough energy that MI300's AI performance per watt is five time that of the previous generation. According to Su, the Instinct 300 will ship in the second half of this year.