AMD Radeon Instinct MI100 Leaks With 120 CUs, 32GB HBM2 And Claimed Ampere-Rivaling Performance

The second half of 2020 is going to be interesting, as it pertains to graphics products. AMD, NVIDIA, and even Intel are all planning to come out with new discrete GPU solutions. For AMD, that includes its Big Navi launch, based on its forthcoming second generation Radeon DNA (RDNA 2) graphics architecture. While we wait, some leaked slides purportedly showcase what AMD has up its sleeve for the datacenter market.

That would be its upcoming Radeon Instinct MI100 accelerated. If past leaks prove true, it will rock an Arcturus GPU based on AMD's Radeon CDNA graphics architecture, which is optimized for compute workloads (whereas RDNA is aimed at gaming), including machine learning and high performance computing (HPC) segments. AMD's next-generation Radeon Instinct will compete against NVIDIA's Ampere-based A100 solutions.

The folks at AdoredTV got their mitts on some slides that they claim represent AMD's internal benchmarking results. Before we get to those, let's have a look at the leaked specifications...

- 120 Compute Units (CUs)

- 32GB HBM2 with ECC, 1.2TB per second bandwidth

- 300W typical power consumption

- Link up to 8 GPUs with 6 links, 600GB per second total

- PCI Express 4.0 support

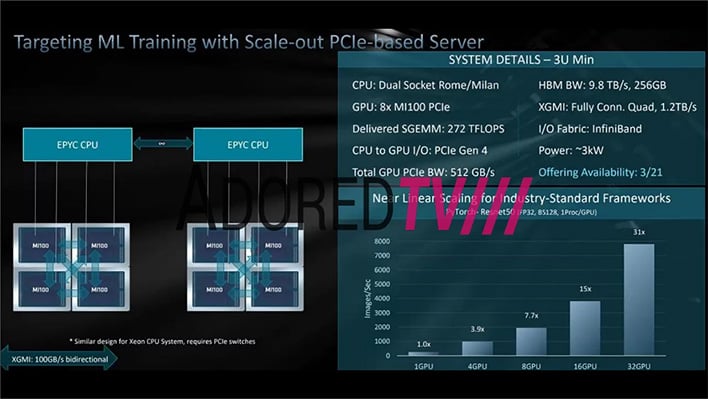

That's a beastly accelerator. In one of the slides, AMD supposedly shows benchmarking results from a dual socket system, with each CPU running a cluster of four GPUs, for a total of eight GPUs. Have a look...

Good stuff—notice the claimed scaling as the number of GPUs increases, around 100 percent at 32 GPUs according to the slide. Only eight GPUs can be linked together, so this suggests that clusters can be linked together as well.

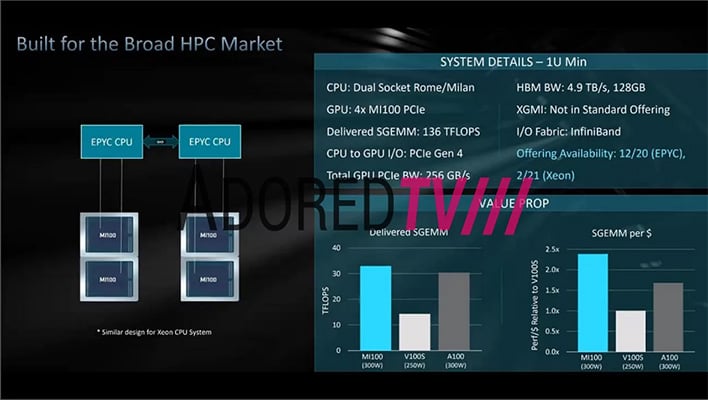

However, it is the second slide that is more interesting. That's because it compares the performance and value of AMD's Radeon instinct MI100 with NVIDIA's Ampere-based A100 and Volta-based V100 GPUs. Have a look...

Bear in mind that we have not confirmed if any of this is real. Disclaimer out of the way, the above slide shows two Radeon Instinct MI100 GPU clusters per CPU.

In the performance section, AMD is purportedly claiming a better bang-for-buck can be had with its Radeon Instinct MI100 accelerators compared to NVIDIA's Ampere solutions, as it applies single precision floating general matrix multiply (SGEMM) performance.

We'll have to wait and see how things actually play out. It's also worth noting that Intel will make a run for the same market with Arctic Sound. Fun times ahead.