Edge Of Chaos Network Tech Leverages Nanowire Pathways For Brain-Like Skynet AI

Scientists and researchers are forever on the pursuit of increasingly sophisticated artificial intelligence technologies, especially today, with AI playing such a big role in so many different products and services. One of the more interesting developments in AI has to do with nanowire networks being trained to solve simple problems in a chaotic state.

The underlying concept is based on the scientific theory that human brains function best when they are "at the edge of chaos," otherwise known as a "critical state." This is where some neuroscientists say humans can achieve maximum brain performance.

As such, an international team of scientists at the University of Sydney and Japan's National Institute for Material Science (NIMS) say they have discovered that electrical stimulation can cause an artificial network of tuned nanowires to respond similar to how a human brain does.

What they have done is taken PVP-coated wires measuring 10 micrometers long and up to 500 nanometers thick, and arranged them in random fashion on a two-dimensional plane. Electrochemical junctions form where the wires overlap, similar to synapses between neurons in a human brain.

"We found that electrical signals put through this network automatically find the best route for transmitting information. And this architecture allows the network to ‘remember’ previous pathways through the system," said Joel Hochstetter, lead author of the study and a doctoral candidate in the University of Sydney Nano Institute and School of Physics.

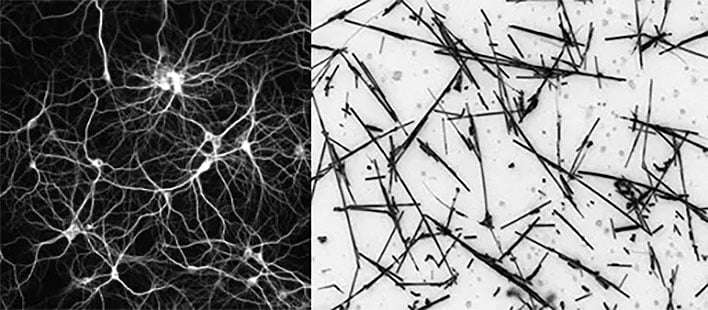

Drawing of a neural network (left) and an optical micograph image of a nanowire network (right) - Source: Adrian Diaz-Alvarez/NIMS Japan

The team published their results in Nature, which is ripe with mathematical equations and, to my eyeballs, a whole bunch scientific technobabble (I mean that in a good way). It's not light reading, in other words. The gist of it is, the nanowire network performs optimally at the edge of chaos.

When stimulated with low electrical signals, the nanowire network initiated pathways that were both predictable and too simple to be of any real use for working on complex problems. And at the other end of the spectrum, overwhelming the network with electrical signals resulted in chaotic outputs that are equally unusable.

The solution was not to find a happy medium, though. Instead, as the scientists theorized, the best results were found at the very edge of the chaotic state. You can sort of think of it as overclocking to achieve the highest stable performance. In doing so, you typically want push a component as far as it will go, then dial things back a touch to maintain stability.

It's not a perfect analogy, but it should give an idea of the general concept at play here. In this case, the upshot is this could potentially reduce energy consumption in AI networks, by doing away with algorithms designed to train networks to determine the proper load and weight for different junctions.

"We just allow the network to develop its own weighting, meaning we only need to worry about signal in and signal out, a framework known as ‘reservoir computing’. The network weights are self-adaptive, potentially freeing up large amounts of energy," explains Professor Zdenka Kuncic.

You check out the full edge-of-chaos paper for all the geeky details. And then cross your fingers that super-intelligent AI never becomes impossible to contain.