The Godfather Of AI Issues An Ominous Warning After Quitting Google

In a recent interview, Google CEO Sundar Pichai admitted that the company does not fully understand how AI works and that society is not prepared for the upcoming rapid development of the technology. Those comments came after an open letter warning about the potential risks and asserting that the race to develop AI technology is dangerously out of control was signed by people like Elon Musk and Steve Wozniak. Now, Dr. Geoffry Hinton is speaking out about how he now regrets his life's work.

Shortly after the first open letter was issued online, a second open letter was sent out by current and former leaders of the Association for the Advancement of Artificial Intelligence. That letter included the likes of Eric Horvitz, Chief Scientific Officer at Microsoft. Dr. Hinton did not sign either of the letters, saying he did not want to speak out until after his departure from Google.

Dr. Hinton pioneered artificial intelligence with two of his graduate students, Ilya Sutskever and Alex Krishevsky, back in 2012 at the University of Toronto. The technology that the trio developed is now the intellectual foundation for the AI we are now seeing being developed by companies like OpenAI.

"It is hard to see how you can prevent the bad actors from using it for bad things," Dr. Hinton remarked.

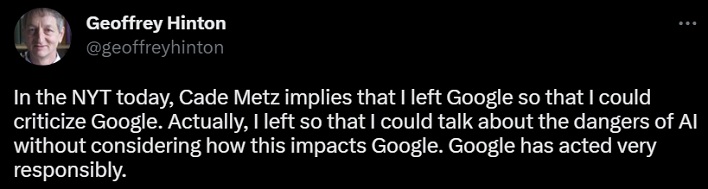

In a tweet following his interview with the New York Times, Dr. Hinton wanted to make it clear why he left Google. He tweeted, "In the NYT today, Cade Metz implies that I left Google so that I could criticize Google." He continued, "Actually, I left so that I could talk about the dangers of AI without considering how this impacts Google. Google has acted very responsibly."

In his interview, Dr. Hinton stated, "Look at how it was five years ago and how it is now." He further stated, "Take the difference and propagate it forwards. That's scary."

In terms of how AI could impact the job market in the coming years, Dr. Hinton is concerned that while the technology can take "away the drudge work," it could also "take away more than that." He added that while he once thought artificial intelligence was 30 to 50 years or more out from being smarter than humans, he no longer believes that.

The interview concluded with Dr. Hinton being asked how he could work on something that could potentially be so dangerous. He paraphrased the man who led the effort to build the atomic bomb, Robert Oppenheimer, in saying, "When you see something that is technically sweet, you go ahead and do it."