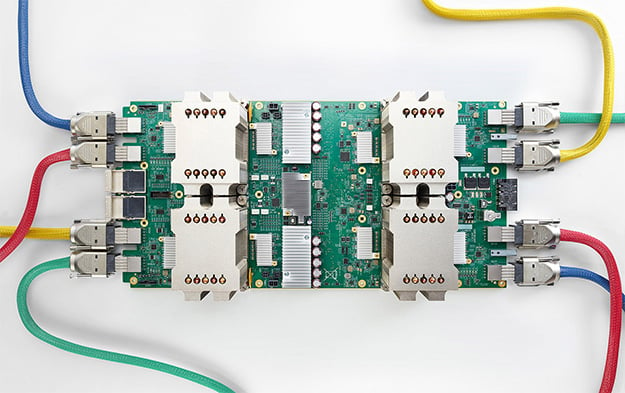

Google Second Gen Tensor Processing Unit Rivals NVIDIA GPUs In Cloud AI Computing

Stop and think for a moment how much of the Internet is powered by Google. Many of you reading this have at least one Gmail account, if not several, and for many people Google is the go-to search engine. It is pretty amazing when you think about it, and even more so when you consider the underlying hardware and technologies involve. One of those bits includes Google's Tensor Processing Unit (TPU), a mighty chip that just became even mightier.

These custom ASICs that Google built help power the company's machine learning efforts. Services such as Street View and Inbox Smart Reply lean heavily on machine learning to deliver a seamless experience to customers. But as Google points out, training start-of-the-art machine learning models requires considerable computation, oftentimes resulting in researchers, engineers, and data scientists having to wait weeks for results. Google's second generation TPU aims to solve that.

TPUs are essentially machine learning accelerators. In this case, Google's new generation of TPUs can deliver up to 180 teraflops of floating-point performance. They're also designed to be connected into larger systems for massive number crunching and data processing—a pod with 64 TPUs can apply up to 11.5 petaflops (yes, PETAFLOPS!) of computation to a single machine learning task.

To ensure that everyone can benefit from this kind of machine learning power, Google is bringing its second generation TPUs to the cloud.

"You’ll be able to mix-and-match Cloud TPUs with Skylake CPUs, NVIDIA GPUs, and all of the rest of our infrastructure and services to build and optimize the perfect machine learning system for your needs. Best of all, cloud TPUs are easy to program via TensorFlow, the most popular open-source machine learning framework," Google states in a blog post.

As part of this initiative, Google is creating what it calls the TensorFlow Research Cloud, which is a cluster of 1,000 cloud TPUs that it will make available to top researchers at no cost. The idea is to give promising researchers the computing muscle they need to "explore bold new ideas" that might otherwise be impractical, or cost prohibitive.

Google is in the process of setting up a program to vet potential researchers and projects. It will be interesting to see where this goes.

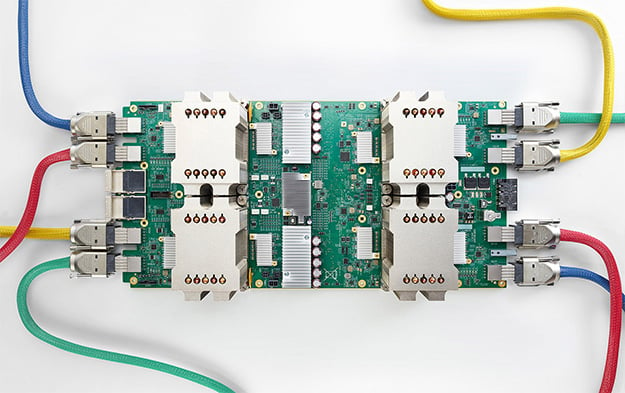

These custom ASICs that Google built help power the company's machine learning efforts. Services such as Street View and Inbox Smart Reply lean heavily on machine learning to deliver a seamless experience to customers. But as Google points out, training start-of-the-art machine learning models requires considerable computation, oftentimes resulting in researchers, engineers, and data scientists having to wait weeks for results. Google's second generation TPU aims to solve that.

TPUs are essentially machine learning accelerators. In this case, Google's new generation of TPUs can deliver up to 180 teraflops of floating-point performance. They're also designed to be connected into larger systems for massive number crunching and data processing—a pod with 64 TPUs can apply up to 11.5 petaflops (yes, PETAFLOPS!) of computation to a single machine learning task.

To ensure that everyone can benefit from this kind of machine learning power, Google is bringing its second generation TPUs to the cloud.

"You’ll be able to mix-and-match Cloud TPUs with Skylake CPUs, NVIDIA GPUs, and all of the rest of our infrastructure and services to build and optimize the perfect machine learning system for your needs. Best of all, cloud TPUs are easy to program via TensorFlow, the most popular open-source machine learning framework," Google states in a blog post.

As part of this initiative, Google is creating what it calls the TensorFlow Research Cloud, which is a cluster of 1,000 cloud TPUs that it will make available to top researchers at no cost. The idea is to give promising researchers the computing muscle they need to "explore bold new ideas" that might otherwise be impractical, or cost prohibitive.

Google is in the process of setting up a program to vet potential researchers and projects. It will be interesting to see where this goes.