Google Teaches Robots To Walk On Their Own, Skynet Overlords Are Pleased

While today's autonomous robots use reinforcement-learning algorithms that rely more on trial and error (with a lot of human intervention during this process) in a preset virtual environment to navigate obstacles and perform certain tasks, Google researchers have taken things to the next level with robots that are able to perform basic functions that we take for granted completely on their own.

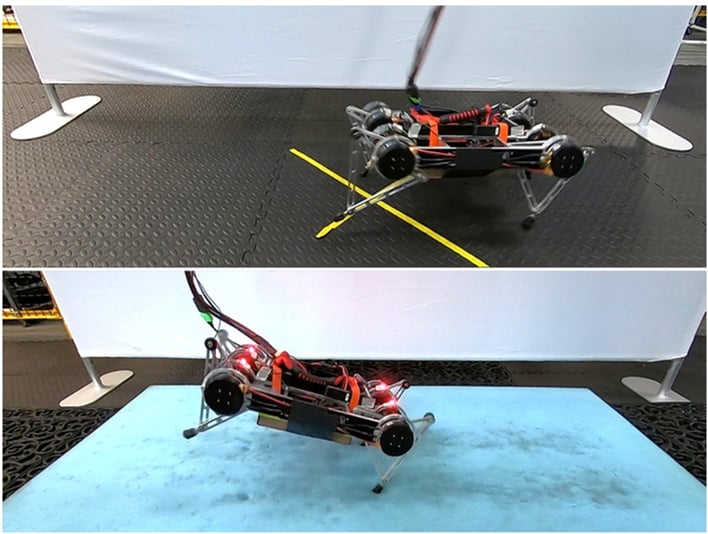

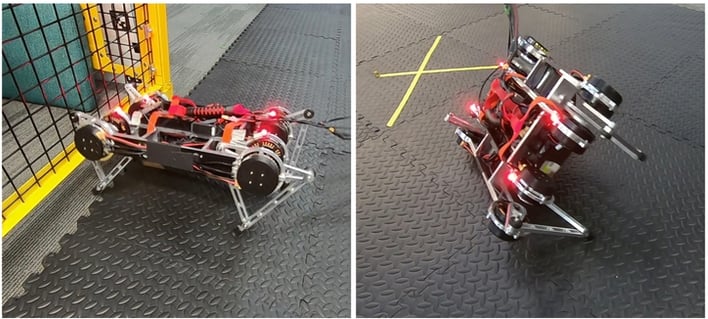

For example, a four-legged robot within a few hours of being "born" was able to stand up, and walk (both forward and backwards) on its own accord using deep reinforcement learning (Deep RL). "[Deep RL] can learn control policies automatically, without any prior knowledge about the robot or the environment," write the Google researchers [PDF]. "In principle, each time the robot walks on a new terrain, the same learning process can be applied to acquire an optimal controller for that environment."

Placing the robot in an environment with no preset routines allowed it to more quickly adapt to many difficult situations. For example, prior modeling to allow a robot to adapt to inclines, gravel, steps, or uneven/slippery surfaces could take considerable time to compute, and lengthy trial and error runs would also be needed. Allowing the robot to adapt to these situations in real-time, however, proved to be a much more efficient way to navigate through its new environment.

Even with all of "on the job" training going on, humans still had to intervene "hundreds of times" during the real-time training process. But researchers were eventually able to set boundaries to restrict where the robot could go. When hitting a boundary while walking forward, for example, the robot then was then able to acquire a new skill: walking backwards to escape. After these minor tweaks were in place, the robot’s ability to navigate without further human intervention only increased.

“Removing the person from the process is really hard. By allowing robots to learn more autonomously, robots are closer to being able to learn in the real world that we live in, rather than in a lab," the researchers added.

Eventually, the Google researchers hope to use its new Deep RL algorithms to allow multiple robots to operate within the same environment, and even expand beyond the four-legged form-factor.