Google’s DeepMind RT-2 AI Model Will Help Robots Serve Humans Like R2D2

Sci-fi fans have long adored futuristic loyal companion robots, such as Star Wars' R2-D2. Fans of the original trilogy became enamored with the vacuum cleaner-shaped robot, as it beeped and booped its way through danger. Nearly every kid in the late 70's and early 80's dreamed of having their very own R2-D2 sidekick. Companies like Google have been making advancements in the robotics field, with its recent RT-2 results giving promise for the day R2-D2 will be available to all, sort of.

RT-2 is essentially learning from RT-1 data, culminating in a VLA model that can control a robot. The result shows RT-2 has improved generalization capabilities and semantic and visual understanding that goes beyond the robotic data it was initially exposed to. The new paper indicates that this also includes RT-2 being able to interpret new commands and respond to user commands by performing rudimentary reasoning, like being able to reason "about object categories or high-level descriptions."

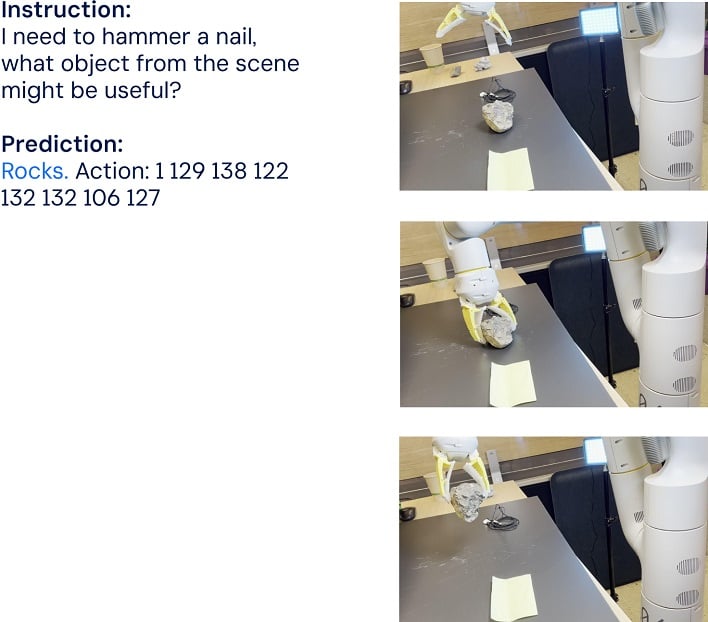

RT-2's ability to perform also can be seen as it incorporates chain-of-thought reasoning, allowing it to achieve multi-stage semantic reasoning. This includes being able to decide between objects and which object would be better for the job at hand, such as choosing a rock over a piece of paper to drive a nail.

While having a robotic friend like R2-D2 may still be a ways off, Google DeepMind and other companies are striving to deliver a more competent and capable robotic helpmate in the near future with advancements like RT-2.