OpenAI's GPT-4 Can Play Doom But Your Grandma Could Give It A Beat Down

Along similar lines, people have been trying to get large language models like GPT-4 to try and do a great many things that they really aren't suited for. We've seen GPT-4 play Minecraft with some human assistance, but that's really about as far as it goes for a generalized language model. All of the other success stories you've read about AI-powered automation use specially-trained models built and taught for task, often with human help.

As a joke, Microsoft AI scientist Adrian de Wynter decided to see if GPT-4 could run DOOM. The answer is "mostly no, but kind of, maybe." It would be possible to make it work to the degree that projects like "running DOOM in Notepad" work, which is to say that it would simply be feeding the output of the DOOM engine through GPT-4. Failing that, he decided to instead see if GPT-4 could play DOOM—that is, whether it could actually navigate and complete a level on its own.

It took quite a bit of fiddling to get things going. Essentially, he rigged up a setup where a computer screenshots every frame of DOOM gameplay, then runs it through the GPT-4 with Vision ("GPT-4V") API which converts the screenshot into text explaining the current state of the game, and then sends that information to a second instance of GPT-4 which uses the description to generate game inputs that are finally sent back to the game.

This process works—the language model was able to navigate levels and engage in combat, if rather clumsily. However, as de Wynter himself said, "my grandma has played it wayyy better than this model." GPT-4 struggles with the game, having a hard time aiming and firing at enemies, activating switches and doors, and even just navigating the simple spaces of DOOM's E1M1: Hangar stage. The researcher says that after much work, he was able to get the AI to find the final room, but that it was unable to complete the stage.

If you're not up for reading the whole academic paper on the topic, de Wynter created a "TL;DR" page for the paper that you can read over. It's actually quite an interesting read even if you aren't an AI researcher, as de Wynter explains that people really overestimate the capabilities of these large language models like GPT-4. It isn't really capable of reasoning outside of extremely small contexts, and it lacks basic ideas like object permanence. The idea that GPT-4 can replace human workers instead of simply being a tool to assist them is pretty laughable.

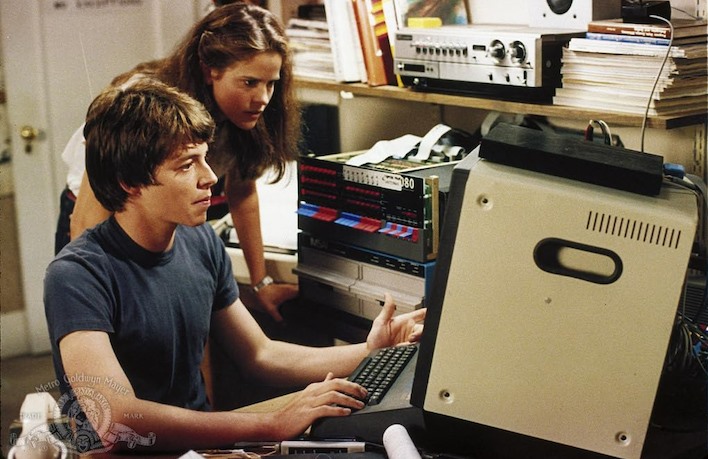

Still, de Wynter expresses concern at how easily he was able to get the neural network to engage in wanton violence, opening fire on human-like targets. It's a decent point; in theory, GPT-4's guardrails should have made that particular step of the process quite challenging. Perhaps the model understood that it's playing a game? That'd be a fair step up over Joshua in Wargames, anyway.