HBM3 Specification Leaves HBM2E In The Dust With 819GB/s Of Bandwidth

At long last, there's an official and finalized specification for the next generation of High Bandwidth Memory. JEDEC Solid State Technology Association, the industry group that develops open standards for microelectronics, announced the publication of the HBM3 specification, which nearly doubles the bandwidth of HBM2E. It also increase the maximum package capacity.

So what are we looking at here? The HBM3 specification calls for a doubling (compared to HBM2) of the per-pin data rate to 6.4 gigabits per second (Gb/s), which works out to 819 gigabytes per second (GB/s) per device.

To put those figures into perspective, HBM2 has a per-pin transfer rate of 3.2Gb/s equating to 410GB/s of bandwidth, while HBM2E pushes a little further with a 3.65Gb/s data rate and 460GB/s of bandwidth. So HBM3 effectively doubles the bandwidth of HBM2, and offers around 78 percent more bandwidth than HBM2E.

What paved the way for the massive increase is a doubling of the independent memory channels from eight (HBM2) to 16 (HBM3). And with two pseudo channels per channel, HBM3 virtually supports 32 channels.

Once again, the use of die stacking pushes capacities further. HBM3 supports 4-high, 8-high, and 12-high TSV stacks, and could expand to a 16-high TSV stack design in the future. Accordingly, it supports a wide range of densities from 8Gb to 32Gb per memory layer. That translates to device densities ranging from 4GB (4-high, 8Gb) all the way to 64GB (16-high, 32Gb). Initially, however, JEDEC says first-gen devices will be based on a 16Gb memory layer design.

"With its enhanced performance and reliability attributes, HBM3 will enable new applications requiring tremendous memory bandwidth and capacity," said Barry Wagner, Director of Technical Marketing at NVIDIA and JEDEC HBM Subcommittee Chair.

There's little-to-no chance you'll see HBM3 in NVIDIA's Ada Lovelace or AMD's RDNA 3 solutions for consumers. AMD dabbled with HBM on some of its prior graphics cards for gaming, but GDDR solutions are cheaper to implement. Instead, HBM3 will find its way to the data center.

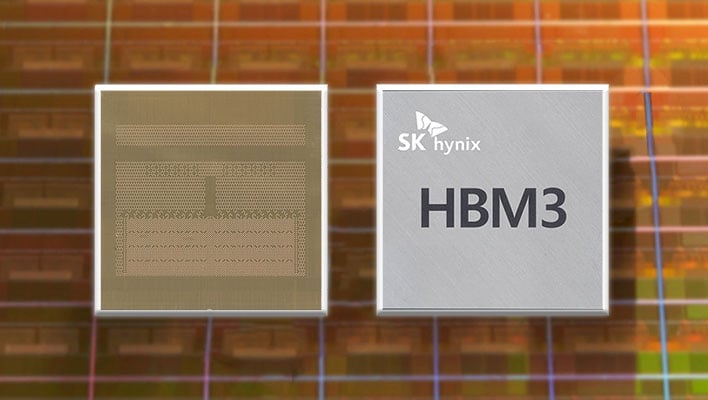

SK Hynix pretty much said as much last year when it flexed 24GB of HBM3 at 819GB/s, which can transmit 163 Full HD 1080p movies at 5GB each in just one second. SK Hynix at the time indicated the primary destination will be high-performance computer (HPC) clients and machine learning (ML) platforms.