Advancing AI And ‘Huang’s Law’ Could Be Why NVIDIA Moved To Acquire Arm

Many in the tech world are familiar with the term “Moore’s Law.” Moore’s Law has served as a guiding light for many tech companies over the last few decades when it comes to increasing transistor density. However, both scientists and tech industries have been concerned about when Moore’s Law will cease to be relevant. What will then come next? It was recently proposed that “Huang’s Law” may be the next big thing, especially with respect to artificial intelligence.

Moore’s Law is an observation named after Intel co-founder Gordon Moore. Moore observed in the 1960s that transistor densities doubled every year and that this process would last for at least ten years. A greater number of transistors leads to more powerful devices. He later revised his observation in 1975 and instead stated that transistor densities doubled every two years. Moore’s observation has proven to be accurate and has since been crowned a “law.” Some have argued that the pace at which transistor densities double has slowed down as we reach the physical limits of silicon. Others have contended that Moore’s Law is still in place. Regardless of these debates, Moore’s Law will eventually no longer be applicable in the tech world.

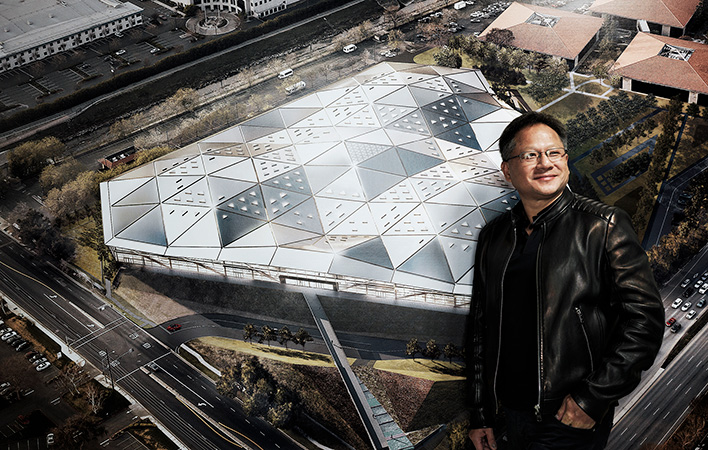

“Huang’s Law” may succeed the famous Moore’s Law. Huang’s Law is a term coined by The Wall Street Journal journalist Christopher Mims. The “law” is named for NVIDIA president, CEO, and co-founder Jensen Huang. It posits that the power of the chips that are used in artificial intelligence (AI) at least double every year. This law is made possible by countless software and hardware developments.

A traditional CPU on its own is generally ineffective at processing all the necessary tasks AI requires. GPUs are better suited for these tasks due to their significantly higher core counts and ability to crunch small numbers in parallel. However, even GPUs have their limits, particularly with regards to power consumption.

NVIDIA’s GPUs are already commonly found in data centers and are used for deep learning, which is a general term for teaching AI to perform a task. This can include processes like pattern recognition. This is distinct from AI inference which takes place on an end device. Inference is the application of the learned task.

GPUs can and are used for inference, but an AI accelerator can be even better, since it is purposely built to “accelerate” AI workloads. Arm has been working on AI accelerators for several years as well as a library of machine learning software. This may be one of the reasons that Nvidia acquired Arm for $40 billion USD last week.

This theory is unquestionably not the only reason that NVIDIA purchased Arm. Nevertheless, this acquisition gives NVIDIA access to Arm’s AI capabilities. Huang’s Law may not necessarily have the longevity of Moore’s Law, but it may certainly have provided some motivation for this kind of acquisition. AI is the future and companies are acting accordingly.

Moore’s Law is an observation named after Intel co-founder Gordon Moore. Moore observed in the 1960s that transistor densities doubled every year and that this process would last for at least ten years. A greater number of transistors leads to more powerful devices. He later revised his observation in 1975 and instead stated that transistor densities doubled every two years. Moore’s observation has proven to be accurate and has since been crowned a “law.” Some have argued that the pace at which transistor densities double has slowed down as we reach the physical limits of silicon. Others have contended that Moore’s Law is still in place. Regardless of these debates, Moore’s Law will eventually no longer be applicable in the tech world.

“Huang’s Law” may succeed the famous Moore’s Law. Huang’s Law is a term coined by The Wall Street Journal journalist Christopher Mims. The “law” is named for NVIDIA president, CEO, and co-founder Jensen Huang. It posits that the power of the chips that are used in artificial intelligence (AI) at least double every year. This law is made possible by countless software and hardware developments.

A traditional CPU on its own is generally ineffective at processing all the necessary tasks AI requires. GPUs are better suited for these tasks due to their significantly higher core counts and ability to crunch small numbers in parallel. However, even GPUs have their limits, particularly with regards to power consumption.

NVIDIA’s GPUs are already commonly found in data centers and are used for deep learning, which is a general term for teaching AI to perform a task. This can include processes like pattern recognition. This is distinct from AI inference which takes place on an end device. Inference is the application of the learned task.

GPUs can and are used for inference, but an AI accelerator can be even better, since it is purposely built to “accelerate” AI workloads. Arm has been working on AI accelerators for several years as well as a library of machine learning software. This may be one of the reasons that Nvidia acquired Arm for $40 billion USD last week.

This theory is unquestionably not the only reason that NVIDIA purchased Arm. Nevertheless, this acquisition gives NVIDIA access to Arm’s AI capabilities. Huang’s Law may not necessarily have the longevity of Moore’s Law, but it may certainly have provided some motivation for this kind of acquisition. AI is the future and companies are acting accordingly.