An Image Generation AI Created Its Own Secret Language But Skynet Says No Worries

We've playfully referenced Skynet probably a million times over the years (or thereabout), and it's always been in jest pertaining to some kind of deep learning development or achievement. We're hoping that turns out to be the case again, that conjuring up Skynet turns out to be a lighthearted joke to a real development. The alternative? AI is developing a "secret language" and we're all in big trouble once it sees how we humans have been abusing our robot underlords.

After all, it's never a good thing when beings (real or artificial) begin speaking what sounds like gibberish to the uninitiated, but makes total sense to those who are communicating with one another in such a fashion. Like when kids would speak in Pig Latin around their parents (do they still do that?) or other adults. So should we be worried right now?

Probably not, but there is an interesting discussion on Twitter over claims that DALL-E, an OpenAI system that creates images from textual descriptions, is making up its own language.

In the initial Twitter thread, Giannis Daras, a computer scientist Ph.D student at the University of Texas at Austin, served up a bunch of supposed examples of DALL-E assigning made-up terms to certain types of images. For example, DALL-E applied gibberish subtitles to an image of two farmers talking about vegetables.

Have a look...

Daras contends that the generated text is not actually nonsensical, as it appears to be at first glance. Instead, the strings of text have actual meaning when plugging them into the AI system independently.

"We feed the text 'Vicootes'from the previous image to DALLE-2. Surprisingly, we get (dishes with) vegetables! We then feed the words: 'Apoploe vesrreaitars' and we get birds. It seems that the farmers are talking about birds, messing with their vegetables!," Daras states.

Daras provides a few other examples in the thread, and points readers to a "small paper" summarizing the findings of this supposed hidden language.

DALL-E's AI Gibberish Sparks A Debate

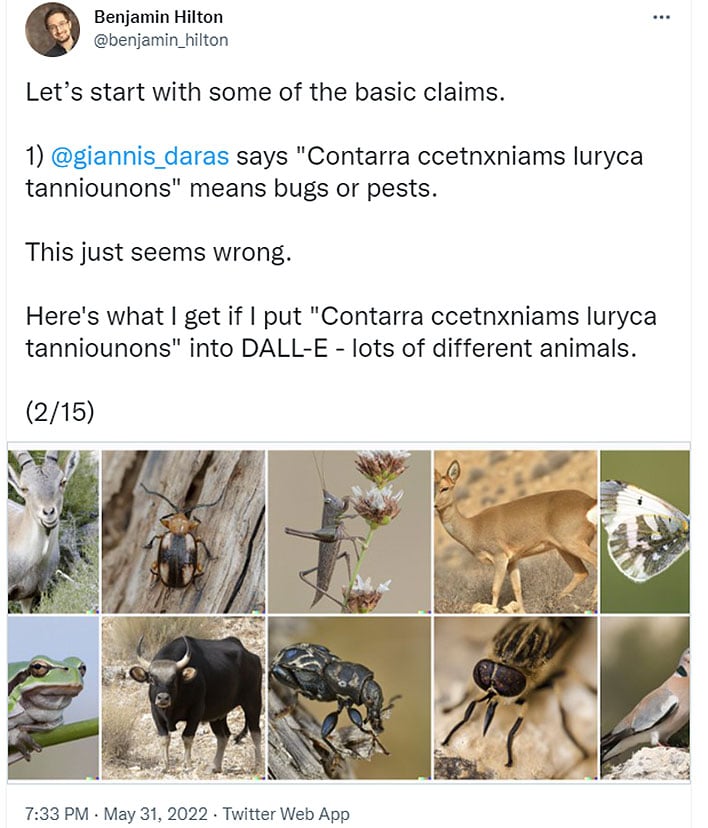

The paper has not been peer reviewed and, in a separate Twitter thread, research analyst Benjamin Hilton calls into the question the findings. More than that, Hilton outright claims, "No, DALL-E doesn't have a secret language, or at least, we haven't found one yet."

According to Hilton, the reason the claims in the viral thread are so astounding is because "for the most part, they're not true."

Hilton points out that more complex prompts return very different results. For example, if he adds "3D render" to the above prompt, the AI system returns sea-related things instead of bugs. Likewise, adding "cartoons" to "Contarra ccetnxniams luryca tanniounons" returns pictures of grandmothers instead of bugs.

He offers up more support in his Twitter thread, though does ultimately concede at the end that something odd is definitely happening.

"To be fair to @giannis_daras, it's definitely weird that 'Apoploe vesrreaitais' gives you birds, every time, despite seeming nonsense. So there's for sure something to this," Hilton says.

Daras responded to the criticisms raised by Hilton and others in yet another Twitter thread, directly addressing some of the counter-claims with more evidence suggesting there is more than meets the eye here.

By our reading, Daras seems to be saying that yes, you can trip up the system, but that doesn't disprove that DALL-E is applying meaning to its gibberish text. It just means you can push past the limits of DALL-E with more difficult queries.

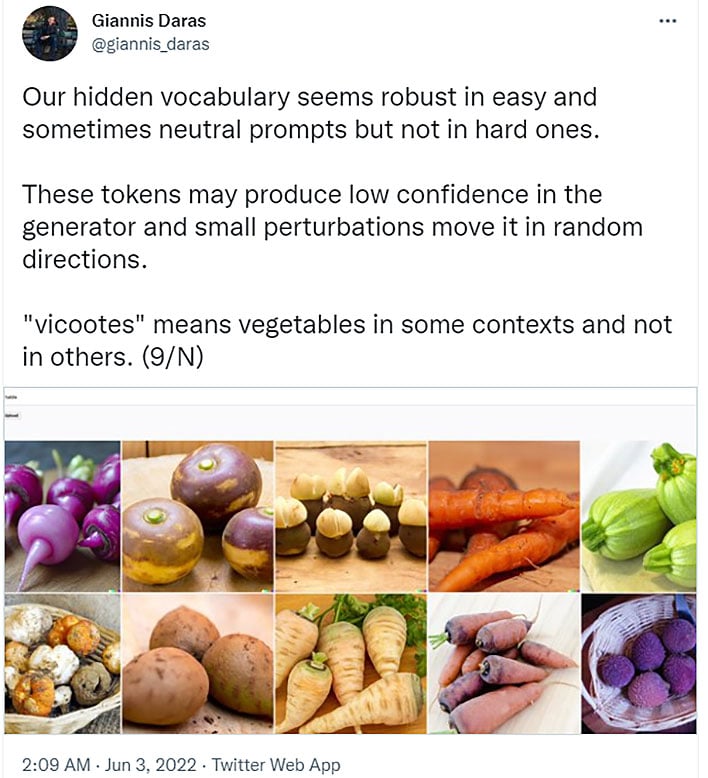

"Our hidden vocabulary seems robust in easy and sometimes neutral prompts but not in hard ones.

These tokens may produce low confidence in the generator and small perturbations move it in random directions. 'vicootes' means vegetables in some contexts and not in others," Garas says.

"We want to emphasize that this is an adversarial attack and hence does not need to work all the time. If a system behaves in an unpredictable way, even if that happens 1/10 times, that is still a massive security and interpretability issue, worth understanding," Garas adds.

Part of the challenge here is that language is so nuanced, and machine learning so complex. Did DALL-E really create a secret language, as Daras claims, or is this a big ol' nothingburger, as Hilton suggests? It's hard to say, and the real answer could very well lie somewhere in between those extremes.