Intel Sapphire Rapids 10nm Xeons And Ponte Vecchio Xe-HPC GPU Prepare For EPYC Battle

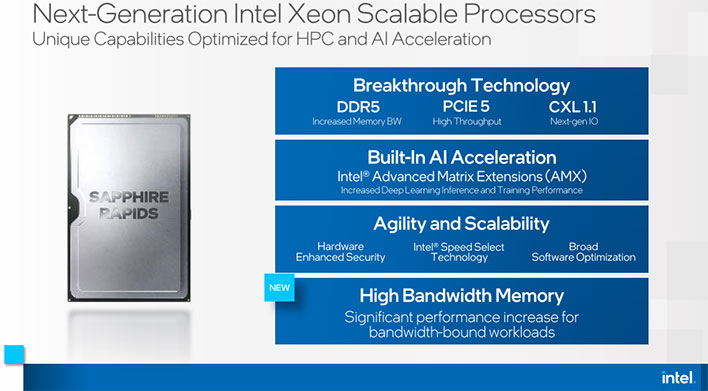

The next generation of Intel's Xeon Scalable processors codenamed Sapphire Rapids will feature high bandwidth memory (HBM), the company confirmed at the 2021 International Supercomputing Conference (ISC). This is intended to provide "a dramatic boost in memory bandwidth," as well as offer a "significant" jump in high performance computing (HPC) applications that deal with memory bandwidth-sensitive workloads, Intel says.

It was long rumored that Sapphire Rapids would bring on-die HBM to Intel's big iron for datacenters, and today's announcement lays to rest any doubt. This will potentially benefit a wide range of workloads, including modeling and simulation, artificial intelligence, big data analytics, in-memory databases, and others that could ultimately lead to scientific breakthroughs.

According to Intel, customer momentum for Sapphire Rapids is strong. As was previously announced, the US Department of Energy's Aurora supercomputer at Argonne National Laboratory will leverage Sapphire Rapids silicon, and so too will the Crossroads supercomputer at Los Alamos National Laboratory.

"Achieving results at exascale requires the rapid access and processing of massive amounts of data," said Rick Stevens, associate laboratory director of Computing, Environment and Life Sciences at Argonne National Laboratory. "Integrating high-bandwidth memory into Intel Xeon Scalable processors will significantly boost Aurora’s memory bandwidth and enable us to leverage the power of artificial intelligence and data analytics to perform advanced

simulations and 3D modeling."

Sapphire Rapids essentially grabs the baton from Ice Lake-SP and is built on a 10-nanometer Enhanced SuperFin process, underpinned by Golden Cove cores. This is the same architecture that will power Alder Lake when it arrives, though for Sapphire Rapids, it is optimized specifically for servers.

It features support for DDR5 memory, as well as PCI Express (PCIe) 5.0 and Compute Express Link (CXL) 1.1. And in addition to the memory and I/O advancements, Intel says Sapphire Rapids is optimized for HPC and AI workloads, courtesy of a new built-in AI acceleration engine called Intel Advanced Matrix Extensions (AMX).

According to Intel, AMX is primed to deliver a significant performance increase for deep learning inference and training.

Intel did not announce any specific SKUs or pricing—the closest they came was reiterating in one of the slides that its 3rd Gen Xeon Scalable processors based on Ice Lake scale to 40 cores and 80 threads. As for Sapphire Rapids, previous rumors suggest it might scale to 56 cores and 112 threads (a previous leak of a stripped Sapphire Rapids CPU highlighted a quad-chiplet design that could technically house up to 80 cores and 160 threads).

The company also doubled down on previous performance claims, saying its 3rd Gen Xeon Scalable processors crush AMD's latest generation EPYC chips at equal core counts. By how much? According to Intel, its big iron bests AMD's datacenter silicon by up to 23 percent across a dozen leading HPC applications an benchmarks.

A big part of the claim is rooted in applications and benchmarks that tap into Intel's AVX-512 instructions, however, such as Monte Carlo (up to 105 percent better performance), Relion (up to 68 percent), LAMMPS (up to 57 percent), NAMD (up to 62 percent), and Binomial Options (up to 37 percent). AVX-512 gives Intel a performance advantage in specific workloads (hence why Intel likes to showcase these applications), though not across the board. As always, real-world performance evaluations when these chips become available will tell the whole story.

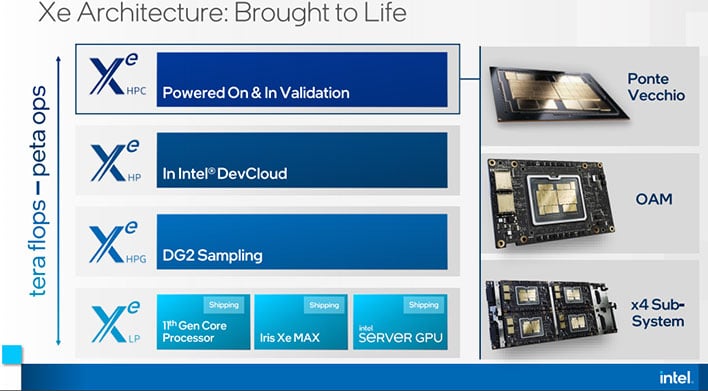

Intel's Xe-HPC (Ponte Vecchio) GPU Is Currently Being Validated

Intel also provided an update on its Xe-HPC GPU codenamed Ponte Vecchio. After powering it on earlier this year, Ponte Vecchio is now in the process of system validation.

Built on a 7-nanometer manufacturing process, Ponte Vecchio figures to be Intel's first exascale GPU, aimed squarely at the HPC segment. It is also a physically big chip featuring over 100 billion transistors and 47 compute tiles (or "magical tiles," as calls them) serving different purposes.

"Ponte Vecchio is an Xe architecture-based GPU optimized for HPC and AI workloads. It will leverage Intel’s Foveros 3D packaging technology to integrate multiple IPs in-package, including HBM memory and other intellectual property," Intel says. "The GPU is architected with compute, memory, and fabric to meet the evolving needs of the world’s most advanced supercomputers, like Aurora."

The plan is to release Ponte Vecchio in an OCP Accelerator Module (OAM) form factor and subsystems, though no release date or pricing were given.

It's going to be an interesting year with Sapphire Rapids and Ponte Vecchio entering the datacenter scene. The datacenter sector is a lucrative one, and competition between Intel and AMD has never been higher. It will be interesting what SKUs are made available, and how pricing for Sapphire Rapids compares to AMD's EPYC 7003 series lineup.