Intel Unveils Xeon CPU Max And Data Center GPU Max For An AI And HPC 1-2 Punch

Lately, Intel faces stiff competition in what have traditionally been its strongest markets: data center and the enterprise. NVIDIA muscled in on Intel's territory a long time ago with its powerful GPU compute accelerators, but now the green team is pushing its own CPUs along with its GPUs. On the other side, it has AMD, whose Instinct accelerators pair well with its own massive EPYC CPUs. Intel needs a solid one-two punch to regain lost ground in its most important market, but it looks like that's coming in the form of Sapphire Rapids HBM Xeons and Ponte Vecchio accelerators.

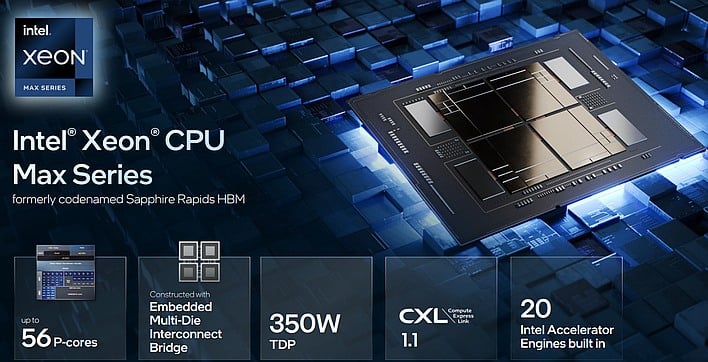

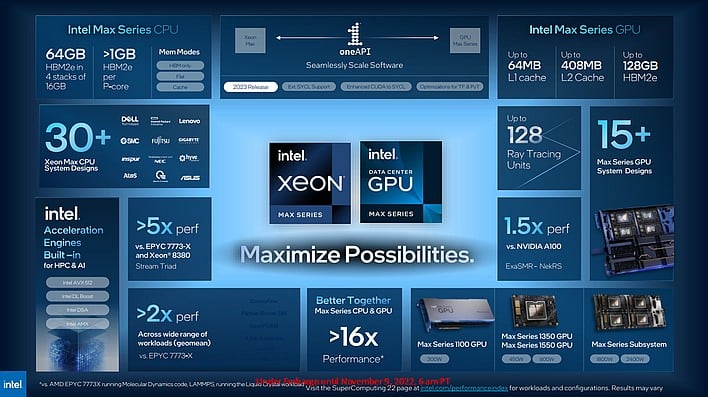

The Intel Xeon Max processors, formerly codenamed Sapphire Rapids HBM, offer you up to 56 P-cores across four tiles. Power targets are in the 350-watt range, and you get 64 GB of on-package HBM2e memory, as well as PCIe 5.0 and support for Compute Express Link 1.1. The HBM2e provides "about 1 TB/sec" memory bandwidth to the CPU cores, and while Intel isn't talking clock rates—we'd expect them to be relatively low given the presence of 56 "Golden Cove" cores and a 350W TDP—it does claim that the chips can deliver "up to 4.8x better performance compared to the competition on real-world HPC workloads."

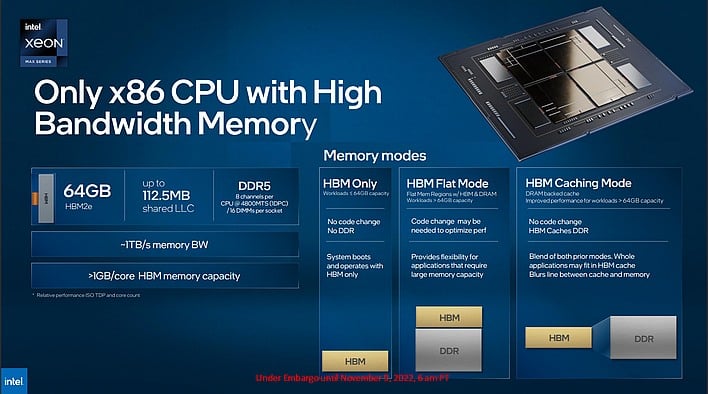

The onboard HBM2e memory isn't the only RAM available to these chips, to be clear. They can also be connected to up to 16 DIMMs per socket of DDR5, but that's totally optional. Intel explains that the chips can be run in HBM-only mode, HBM caching mode, or HBM flat mode. HBM-only is self-explanatory, while HBM caching mode effectively uses the 64GB of HBM as a humongous cache for the larger DDR4 capacity—up to 6TB per socket. Meanwhile, HBM flat mode allows you to use the full capacity of both interfaces, but Intel says it may require platform-specific optimizations to ensure the stuff that needs to stay in HBM, does.

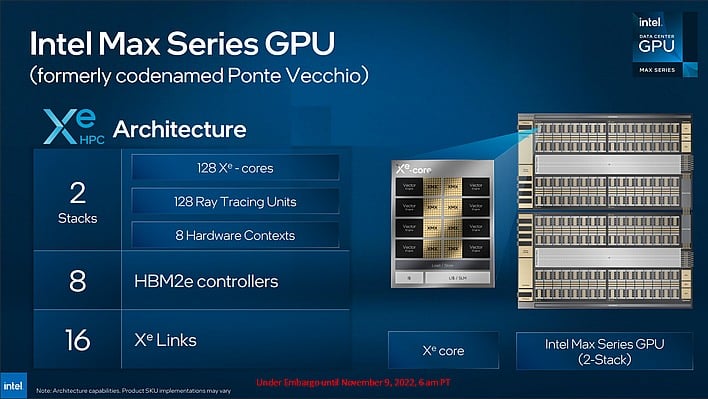

Meanwhile, the Intel Max Series GPU is a whole other animal. It's based on the Xe-HPC architecture, which is a close relative of the Xe-HPG design used in Intel's Arc Alchemist gaming GPUs. The processor formerly known as Ponte Vecchio sports up to 128 Xe-cores with 8 separate hardware contexts, eight HBM2e controllers, and 16 Xe-Link connections to link up with its siblings.

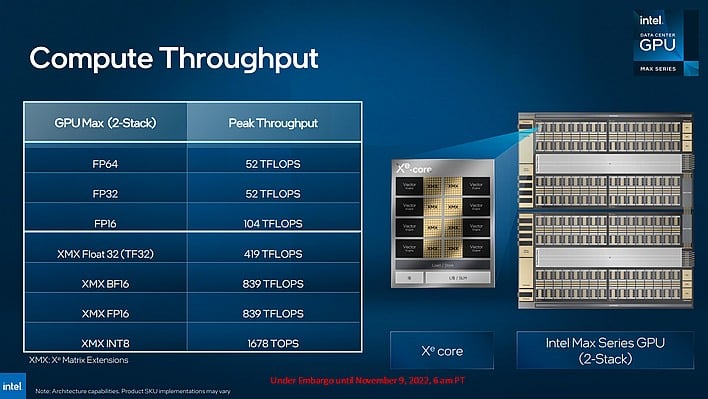

A single Max Series Data Center GPU can have two Ponte Vecchio GPUs on the package, doubling up on resources for essentially double the performance in compute workloads. Intel says that one of these packages can pull out 104 TFLOPS in FP16 compute, or half that amount in FP32 or FP64. For tensor operations, its XMX units can propel it to 839 TFLOPS in BF16 or FP16 format, or 1678 TOPS in INT8.

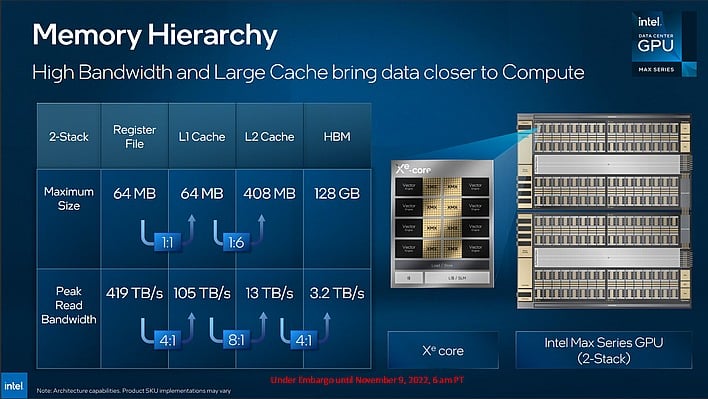

The Max Series Data Center GPU has twice the HBM2e memory controllers of its CPU cousin, so it comes with as much as twice the HBM2e memory: up to 128GB. That interface moves data at up to 3.2 TB/sec, but Intel also boasts that the chips have 408MB of L2 cache moving data at 13 TB/sec. Intel claims that the increased cache size offers a 50% jump in 4Kx4K Fast Fourier Transforms. In specific workloads, Intel says that the Max Series Data Center GPU can outpace NVIDIA's A100 accelerator by 50%.

Ponte Vecchio is coming to market in three forms: a 300-watt PCIe AIC with 56 Xe cores and 48GB of HBM2e memory, a 450W OAM module with 112 Xe cores and 96GB of HBM2e, and the full-fat 600-watt OAM device with the above-spec'd 128 Xe cores and 128GB of HBM2e. Intel will also be offering OAM carrier boards with four Max Series GPUs connected via Xe Link for even greater throughput.