Meta’s Unveils AI Powered Make-A-Video Generator And It’s Pretty Freaky

Technology, such as OpenAI's DALL-E, has been making headlines as of late by allowing users to turn simple text prompts into AI generated art. Not to be left out, Meta announced its own version of the tech, called Make-A-Scene recently. Now, the company has taken the AI generation tool to the next level by announcing its Make-A-Video AI system.

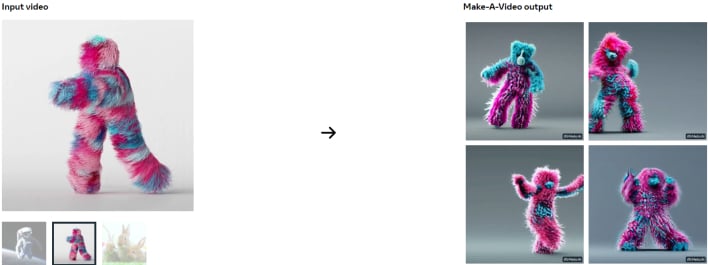

According to the announcement, the system learns what the world looks like from paired text-image data, as well as how the world moves from video footage without any text associated. The company states it is sharing the details of the technology through a research paper and a planned demo experience, in accordance to its commitment to open science.

The tech giant says it wants to be "thoughtful" about how it builds its new generative AI system of this nature. The AI will access publicly available datasets, which Meta says adds an extra level of transparency to its research. It is also looking for the communities feedback, as it continues to utilize its responsible AI framework to "refine and evolve" its approach to the emerging technology.

If you would like to learn more about Meta's Make-A-Video and submit a request to use the tool, you can do so by visiting the website. It does appear that Meta is currently looking for a particular set of users, such as researchers, artists, and press. However, if you are not able to access the technology yet, Meta does say it intends on making it available for public use in the future. You can also view the company's research paper on arxiv.org.