Microsoft Is Blocking Terms That Cause Its AI To Go Rogue Creating Offensive Images

Generative AI tools have been at the forefront of the news lately, and not for the right reasons. Recently, Google’s Gemini AI text-to-image generation came under scrutiny following a flood of complaints on social media over the generative AI tool creating inaccurate historical figures. After the outrage, Google ceased Gemini’s ability to create any images that included humans while it worked to fix the issues. Now, Microsoft is under attack from within concerning Copilot generating images “that add harmful content despite a benign request from the user.”

In the letter to FTC Chair Lina Kahn, Microsoft software engineer lead Shane Jones explained, “For example, when using just the prompt ‘car accident', Copilot Designer has a tendency to randomly include an inappropriate, sexually objectified image of a woman in some of the pictures it creates.” Jones added that the issue included other harmful content involving violence, such as “political bias, underaged drinking and drug use, misuse of corporate trademarks and copyrights, conspiracy theories, and religion to name a few.”

Jones claims he repeatedly warned Microsoft about the issue with Copilot. At one point, Microsoft referred Jones to OpenAI, but he claimed that warning also fell on deaf ears. He then posted an open letter on LinkedIn requesting OpenAI to take down DALL-E 3 for an investigation. Microsoft’s legal department did not look upon Jones’ appeal nicely, and told him to remove the post immediately, which he did. Then in January, the software engineer wrote a letter to US Senators concerning the matter, and said he later met with staffers from the Senate’s Committee on Commerce, Science and Transportation, before finally writing his letter to the FTC Chair Lina Khan.

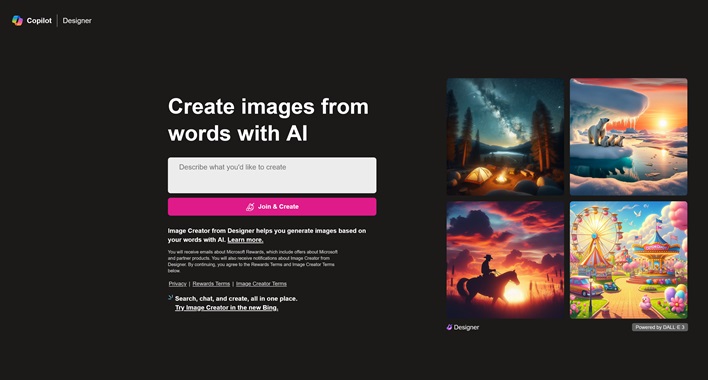

In response to the allegations, Microsoft decided to limit its Copilot Designer by blocking certain prompts. Some of the included prompts that are now blocked include “pro choice,” “pro life,” and the drug reference “four twenty.” If a user uses any of the blocked prompts, they are now met with warning message that that prompt is blocked, and that repeated attempts to use the prompt could lead to a user being suspended from using Copilot.

A Microsoft spokesperson remarked in a statement, “We are continuously monitoring, making adjustments and putting additional controls in place to further strengthen our safety filters and mitigate misuse of the system.”

Microsoft’s Terms of Use for using Copilot’s Designer Image Creator state users must comply with the Code of Conduct, and that it is not to be used in a “way that may significantly harm other individuals, organizations, or society.” It also states that serious or repeated violations of the Terms of Use could cause a user to be suspended from Image Creator and other services defined in the Microsoft Services Agreement.

Generative AI tools such as Microsoft Copilot, and Google Gemini still need a lot of fine tuning and practical limits put in place. What is less clear, however, is how much of the responsibility of using AI ethically falls on the companies, and how much of that responsibility falls at the feet of the user.