NVIDIA Unveils 144-Core Grace CPU Superchip, NVLink-C2C Interconnect To Shred HPC Workloads

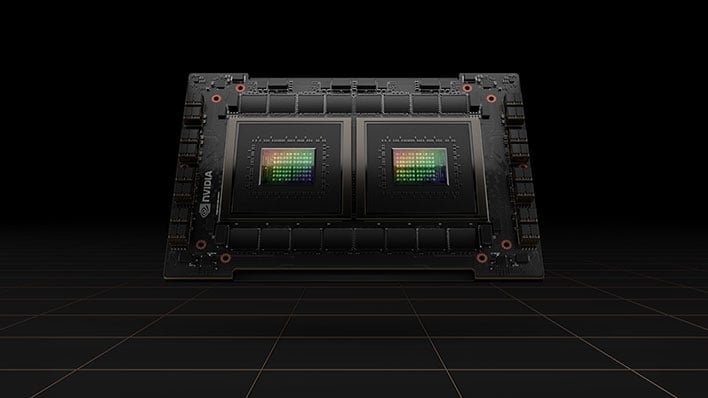

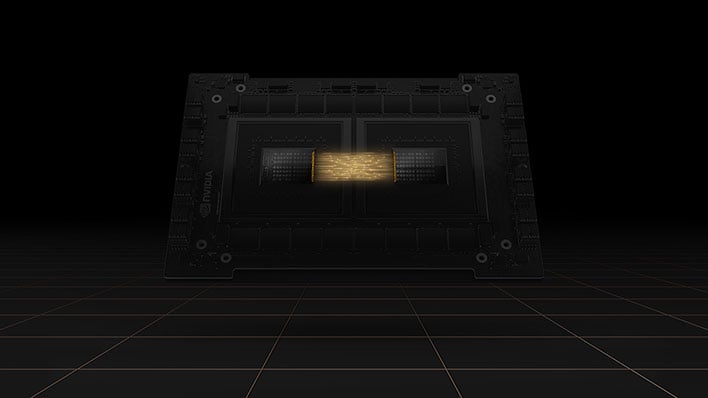

NVIDIA is expanding its CPU design efforts in with the introduction of its Grace CPU Superchip, its first discrete data center CPU for high performance computing (HPC) workloads. It's actually two processors linked together in the same package. The chips connect coherently over NVLink-C2C, a new high-speed, low-latency, chip-to-chip interconnect that NVIDIA also unveiled today at GTC 2022.

The Grace CPU Superchip is intended to complement NVIDIA's Grace Hopper Superchip, a hybrid CPU-GPU integrated module introduced last year for giant-scale HPC and AI applications. Both solutions share the same underlying Arm Neoverse CPU architecture, and both also employ NVIDIA's new high-speed interconnect.

"A new type of data center has emerged—AI factories that process and refine mountains of data to

produce intelligence," said Jensen Huang, founder and CEO of NVIDIA. "The Grace CPU Superchip offers

the highest performance, memory bandwidth and NVIDIA software platforms in one chip and will shine

as the CPU of the world’s AI infrastructure."

By wedging two CPUs in the same package, the Grace CPU Superchip serves up 144 Arm cores in a single socket. NVIDIA claims a 1.5x performance uplift compared to the dual-CPUs shipping with the DGX A100 currently, based on an estimated performance score of 740 on the SPECrate 2017-int-base benchmark.

Outside of the raw performance metrics, NVIDIA is also touting substantial memory bandwidth gains. The Grace CPU Superchip employs the first LPDDR5X memory with Error Correcting Code (ECC). It delivers 1TB/s of memory bandwidth (double the bandwidth of traditional DDR5 designs), while consuming less power—the entire CPU (including memory) consumers 500 watts, NVIDIA says.

"The Grace CPU Superchip is based on the latest data center architecture, Arm v9. Combining the

highest single-threaded core performance with support for Arm’s new generation of vector extensions,

the Grace CPU Superchip will bring immediate benefits to many applications," NVIDIA adds.

NVIDIA NVLink-C2C Interconnect Enables Coherent Bandwidth Of Up To 900GB/s

A key part of the design is the NVLink-C2C interconnect, which enables coherent bandwidth of up to 900GB/s. It's built on top of NVIDIA's SERDES and LINK design technologies, and is extensible from PCB-level integrations and multi-chip modules to silicon imposer and wafer-level connections. According to NVIDIA, its home-brewed interconnect is up to 25x more energy efficient and 90x more area-efficient than PCIe Gen 5 on its chips with advanced packaging.

"Chiplets and heterogeneous computing are necessary to counter the slowing of Moore’s law," said Ian

Buck, vice president of Hyperscale Computing at NVIDIA. "We’ve used our world-class expertise in

high-speed interconnects to build uniform, open technology that will help our GPUs, DPUs, NICs, CPUs

and SoCs create a new class of integrated products built via chiplets."

Interconnect designs are a big deal, and perhaps underappreciated compared to the CPU and GPU specs that hog the limelight. But they're what enable fancy and viable multi-chip module (MCM) designs, like Apple's M1 Ultra (aimed at a very different market) and NVIDIA's Grace CPU and Grace Hopper CPU-GPU Superchips.

NVIDIA's also touting industry standard support—its interconnect works with Arm's AMBA CHI and Intel's CXL protocols for interoperability between devices. And what of the developing Universal Chiplet Interconnect Express (UCIe) standard announced earlier this month? It has the backing of Arm, AMD, Intel, and others, and NVIDIA confirmed it will support it as well.

"Custom silicon integration with NVIDIA chips can

either use the UCIe standard or NVLink-C2C, which is optimized for lower latency, higher bandwidth and

greater power efficiency," NVIDIA said in no uncertain terms.

NVLink-C2C and UCIe are two different interconnect protocols, and the Grace CPU Superchip supports both. That affords customers added flexibility for semi-custom solutions. For example if the companion chip/IP block has a UCIe interface, a customer would not be precluded from choosing NVIDIA's Grace CPU Superchip. NVLink-C2C figures to be the better performing solution, though, with pin speeds up to 45Gbps on the PCB/MCM versus 31.5Gbps on UCIe. And it offers greater power efficiency, based on NVIDIA's claims.

Either way, the Grace CPU Superchip promises to run all of NVIDIA's computing software stacks, including NVIDIA RTX, HPC, AI, and Omniverse. Both it and the previously announced Grace Hopper Superchip are expected to be available in the first half of 2023.