NVIDIA A100 Ampere GPU Early Benchmarks Crush Turing In Rendering Performance

There is already plenty of excitement in anticipation of NVIDIA porting its Ampere GPU architecture over to the consumer market with its next round of GeForce RTX graphics cards, and adding to the hype is an early look at some benchmarks, albeit for the machine learning version (A100). Compared to Turing, the benchmark data shows Ampere way out ahead.

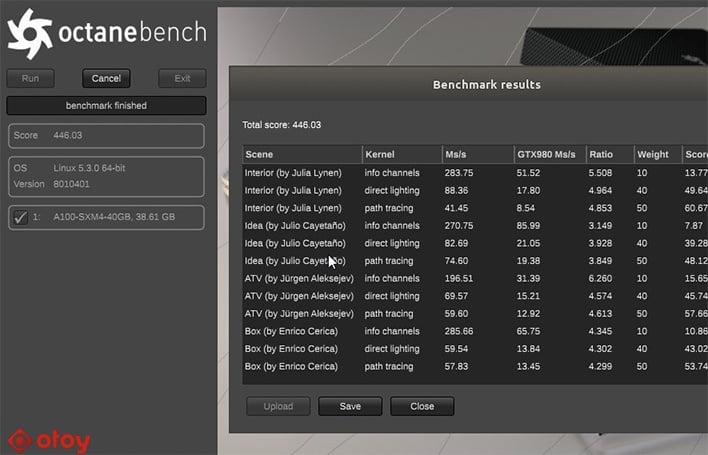

The benchmark data was shared on Twitter by Jules Urbach, CEO and co-founder of of OTOY, a cloud graphics company. He ran the Ampere GPU through a round of testing in OctaneBench, which is based on OTOY's OctaneRender application.

Here is a look at the results...

"A record breaking week.

The NVIDIA

A100 has now become the fastest GPU ever recorded on #OctaneBench: 446 OB4*

#Ampere appears to be ~43 percent faster than #Turing in #OctaneRender - even w/ #RTX off! (*standard Linux OB4 benchmark, RTX off, recompiled for CUDA11, ref. 980=102 OB)," Urbach wrote on Twitter.

As far as we know, this is the first real and verified (read: not leaked) benchmark data for Ampere. That said, it's not entirely clear which Turing GPU Urbach is basing the 43 percent gain with Ampere, but assuming a relatively even playing field, that is impressive.

At present, Ampere only exists in machine learning form, with the A100 that NVIDIA introduced in May. It represents a shift to 7-nanometer manufacturing, and is an absolute beast on paper with 54 billion transistors, 108 streaming multiprocessors, 432 Tensor cores, and 40GB of HBM2e memory with a maximum memory bandwidth of 1.6 terabytes per second.

What manifests in the consumer space for gaming will be a bit different, but still based on the same 7nm architecture. Will we see a performance uptick in the neighborhood of 43 percent in rasterized rendering performance, compared to Turing? That might be overly optimistic, but we'll have to wait and see.