NVIDIA A100 Ampere GPU Launches With Massive 80GB HBM2e For Data Hungry AI Workloads

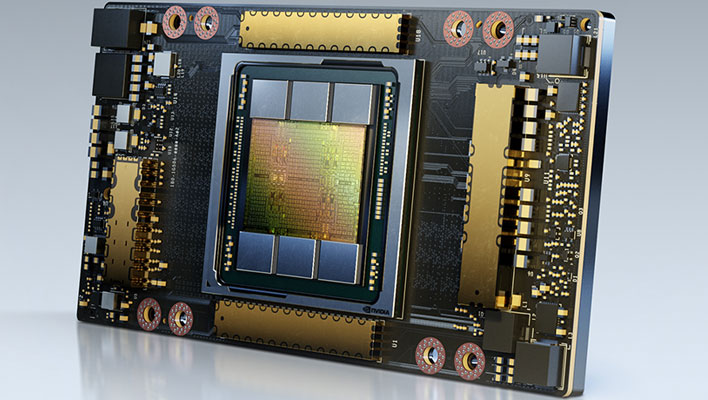

NVIDIA's A100 based on Ampere was already considered the go-to GPU for high performance computing (HPC), but lest any challengers seek to knock the part off its perch, they will now have to contend with a version that has twice as much high bandwidth memory as before, with a massive 80GB HBM2e memory buffer. Yeah, it's like that.

The first version of the A100 with 40GB of HBM2 debuted in May and marked the official introduction of Ampere, NVIDIA's latest generation GPU architecture. It is still available, of course, and boasts 1.6TB/s of memory bandwidth to tackle HPC applications. The latest iteration, however, kicks things up a notch with a lot more memory and more bandwidth.

According to NVIDIA, the A100 80GB delivers over 2 TB/s of memory bandwidth "to unlock the next wave of AI and scientific breakthroughs." NVIDIA is also pitching it as the world's fastest data center GPU, saying it is ideal for a wide range of applications with enormous data memory requirements, like weather forecasting and quantum chemistry.

"Achieving state-of-the-art results in HPC and AI research requires building the biggest models, but these demand more memory capacity and bandwidth than ever before," said Bryan Catanzaro, vice president of applied deep learning research at NVIDIA. "The A100 80GB GPU provides double the memory of its predecessor, which was introduced just six months ago, and breaks the 2TB per second barrier, enabling researchers to tackle the world’s most important scientific and big data challenges."

It is a big upgrade in memory. Even just doubling the allotment of memory would be a big deal, but it's also faster (hence the HBM2e designation), with a 3.2Gbps clock versus 2.4Gbps on the A100 40GB. That is where the added bandwidth comes into play. Other specs remain the same, including 19.5 TFLOPS of single-precision (FP16) performance and 9.7 TFLOPS of double-precision (FP32) performance. The memory bus width remains at 5,120-bit as well.

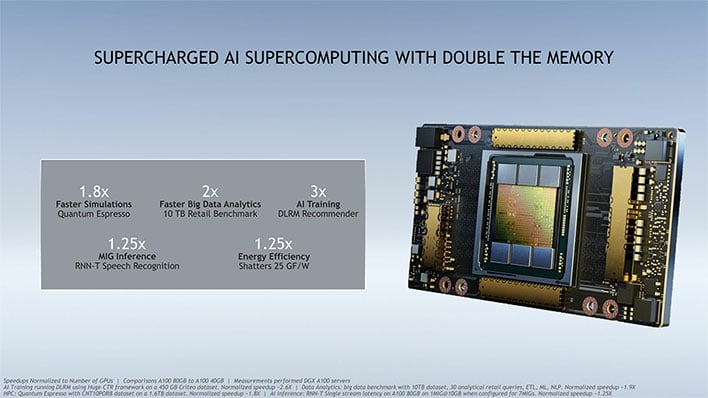

NVIDIA provided some performance examples based on a handful of benchmarks. The biggest gain is a 3x improvement in AI training/deep learning as represented by DLRM Recommender, followed by a 2x gain in big data analytics. These are no doubt cherry-picked benchmarks, but it is a welcome upgrade for customers who can tap into the performance potential of the A100 80GB.

The upgraded GPU has already found its way into NVIDIA's new DGX Station A100. Actually, four of them—it is basically a data center in a box, and the four A100 80GB GPUs deliver 2.5 petaflops of AI performance, kept cool through a "maintenance free refrigerant cooling system."

The DGX Station A100 will be available this quarter.