NVIDIA Calls BS On AMD's H100 Versus MI300X Performance Claims, Shares Benchmarks

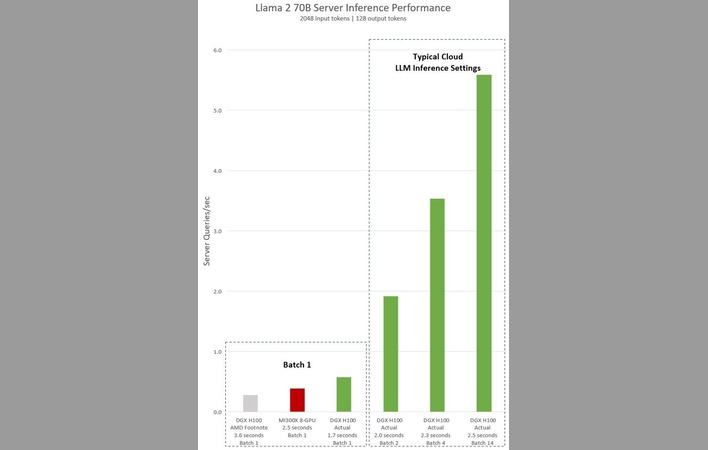

NVIDIA shares that “DGX H100 can process a single inference in 1.7 seconds using a batch size of one—in other words, one inference request at a time. A batch size of one results in the fastest possible response time for serving a model.”

In addition to refuting AMD's claims, NVIDIA brought receipts, too. Sharing a graph that shows what it says is the actual performance of its combined hardware and software stack. The data highlights the performance results of a DGX H100 server using eight H100 GPUs on the Llama 2 70B model.

Figure 1. Llama 2 70B server inference performance in queries per second with 2,048 input tokens and 128 output tokens for “Batch 1” and various fixed response time settings. Courtesy of NVIDIA.

Anyone wanting to validate these claims will be able to do so because NVIDIA is sharing the information necessary to reproduce the results. The blog post contains the command lines for the scripts used by NVIDIA to build its model, alongside the benchmarking scripts used to gather the data.

It’s surprising that AMD didn’t make sure that the data it shared was as accurate as necessary, as it was only a matter of time until NVIDIA or LLM enthusiasts fact checked it. If AMD wants to gain ground on NVIDIA in the race for AI market share these types of mistakes need to be eliminated.