NVIDIA Confirms Beastly Ampere A100 PCIe GPU Accelerator With 80GB HBM2e Memory

NVIDIA kicked off its 7-nanometer Ampere party with the A100, a massively powerful machine learning GPU that began shipping to customers a little over a year ago. The company would later flesh out its machine learning lineup with an 80GB model, though up until now, it has only been offered in the SXM4 form factor. That is about to change with the introduction of a PCI Express variant.

The A100 is the biggest and baddest (in a good way) version of Ampere. NVIDIA packed 54 billion transistors onto a die size measuring 826mm2. It has a total of 6,912 FP32 CUDA cores, 432 Tensor cores, and 108 streaming multiprocessors. FP32 performance checks in at a staggering 19.5 teraFLOPS, which is only half the story.

There is also memory performance to consider. Out of the gate, the A100 rocked 40GB of HBM2 memory with a maximum memory bandwidth of 1.6TB/s—probably enough to run Crysis, if you will oblige us to revive a stale joke.

“NVIDIA A100 GPU is a 20x AI performance leap and an end-to-end machine learning accelerator—from data analytics to training to inference," NVIDIA founder and CEO Jensen Huang said last year. "For the first time, scale-up and scale-out workloads can be accelerated on one platform. NVIDIA A100 will simultaneously boost throughput and drive down the cost of data centers."

A few months after the initial launch, NVIDIA came out with a version of the A100 rocking twice as much memory—80GB of upgraded HBM2e, with a memory clock increase from 2.4Gbps to 3.2Gbps. This cranked up the memory bandwidth to 2TB/s. And now it's going to offered in a PCIe form factor.

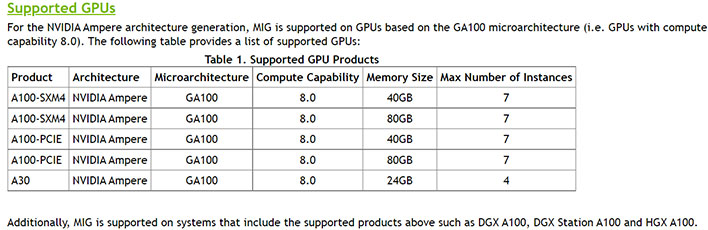

NVIDIA has not actually announced the new PCIe option for its A100 with 80GB of HBM2e memory, but it has updated its table of supported GPUs in its Data Center Documentation to reflect the new part, as shown above. Rumor has it the PCIe version will officially launch sometime next week.

What's the big deal here? By offering the higher end part in a PCIe form factor, it expands the A100's potential footprint by now including those who want to harness the power of NVIDIA's top Ampere solution in a standard server.

The core specifications are the same between the SXM4 and PCIe variants, though the latter has a lower TDP—250W, compared to 400W. We assume the same will be true of the 80GB model that is headed to PCIe land. It makes sense, given that PCIe models end up in spaces where cooling is not as quite as robust.

Having a much lower TDP opens the door to a drop in sustained performance for the PCIe model compared to the SXM4 variants. It should not be a drastic reduction, though—according to NVIDIA, the PCIe models should deliver 90 percent of the performance of the SXM4 models. Not too shabby when you're dealing with a 38 percent lower TDP.