NVIDIA Is Working On A GPU Technology That Delivers A Massive Ray Tracing Performance Lift

When NVIDIA first released its "RTX" real-time ray-tracing graphics cards onto the world, the general reaction to the "what's-old-is-new-again" hybrid rendering paradigm was mostly a resounding "meh" because of the hefty performance hit it incurred on the then-new Turing-based GeForce RTX 2000 series. Most players felt that the fancy effects weren't worth the cost of half (or more) of your framerate.

The GeForce RTX 3000 series based on "Ampere" softened the blow considerably, with nearly double the ray tracing performance of "Turing." Likewise, newer titles have implemented ray tracing in smarter ways, whether by using it to more visible effect like in Fortnite, or by basing the whole rendering pipeline on it, like in Metro Exodus Enhanced Edition.

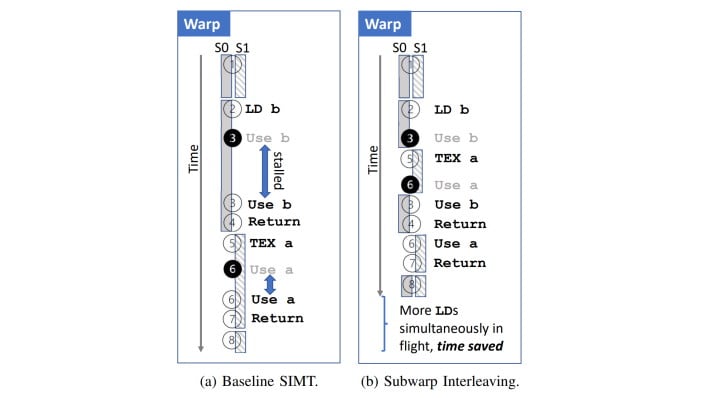

To break it down just a little, GPUs are massively-parallel processors that compute things in threads just like CPUs, although they run a lot more threads than a CPU typically does. These threads are grouped into units called "warps" which perform "single instruction, multiple thread" (SIMT) execution from a single program counter. GPUs hide stalls in the pipeline by scheduling lots of warps simultaneously.

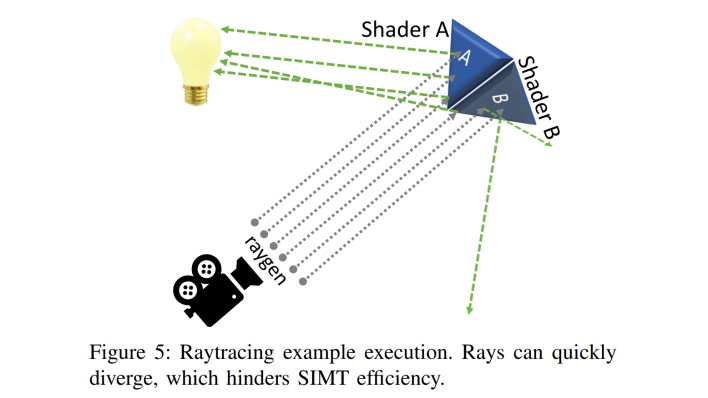

The issues with ray tracing, as described in the paper, are twofold. For one, ray tracing is inherently divergent, which means they don't necessarily share the same program counter. That means they can't be processed using SIMT, requiring serialization and seriously crippling performance. The other issue is that ray tracing doesn't generate a lot of warps, which means that the traditional approach for hiding stalls doesn't work.

The paper goes on to say that while developers in the past have worked on minimizing this divergence, NVIDIA's researchers have instead figured out a way to leverage the divergence to the GPU's benefit. Put simply, their new technique, called "Sub-warp Interleaving," increases occupancy of the GPU's functional units, reducing overall execution time and thusly improving performance.

How much? In the researchers' own tests using a modified Turing architecture in a bare-metal simulator, they found improvements of approximately 6.3% on average, with a maximum benefit of around 20%. That's not huge in terms of the average upflit, but it's not nothing; a 6.3% bump in ray-tracing performance would certainly be welcome, and a 20% bump at the top end is pretty massive. Unfortunately, we're unlikely to see sub-warp interleaving in a shipping product anytime soon.

As mentioned, the researchers demonstrated the technique using a modified Turing architecture. That's right: this isn't something that can be shipped in a driver update. It requires implementation at the hardware level, and given that the paper was just published, it's fairly unlikely to make its way into NVIDIA's next-generation Ada Lovelace architecture, or possibly even the one after that. It's still encouraging to see researchers pursuing algorithmic improvements to performance instead of just pushing the power envelope, though.