NVIDIA's Beastly Grace CPU Superchip Arm Servers To Thrash AI And HPC Workloads In 1H 2023

We tend to think of NVIDIA as a gaming GPU company because of its robust and constantly evolving GeForce lineup, but it's data center business is nearly as big, in terms of revenue. So it should come as no surprise that at Computex 2022, NVIDIA was eager to talk about its Grace CPU Superchip and Grace Hopper Superchip design wins.

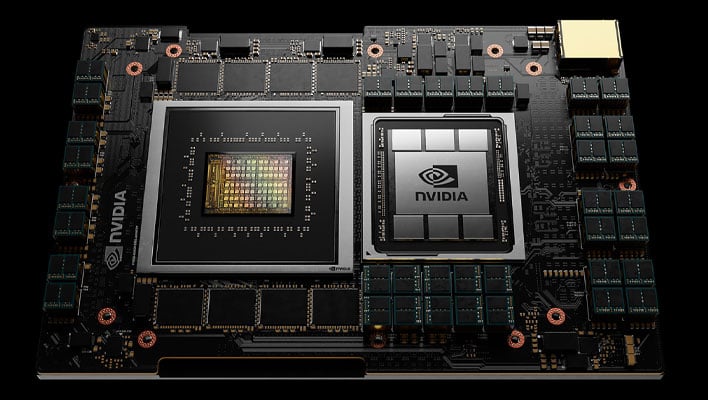

Announced just a few weeks ago, NVIDIA's Grace CPU Superchip is the company's first discrete data center CPU for high performance computing (HPC) workloads. It's actually comprised of two processors in the same package, which are connected coherently over NVLink-C2C, a new high-speed, low-latency, chip-to-chip interconnect.

"A new type of data center has emerged—AI factories that process and refine mountains of data to produce intelligence," Jensen Huang, founder and CEO of NVIDIA, said at the time. "The Grace CPU Superchip offers the highest performance, memory bandwidth and NVIDIA software platforms in one chip and will shine as the CPU of the world’s AI infrastructure."

That comment was made at GTC 2022. Fast forward to Computex 2022 and NVIDIA is showing off a handful of reference designs, which have already been adopted by some pretty big names in the tech industry.

More specifically, NVIDIA says dozens of Grace CPU Superchip and Grace Hopper Superchip models based on its reference designs will ship in the first half of next year by the likes of ASUS, Foxconn, Gigabyte, QCT, Supermicro, and Wiwynn.

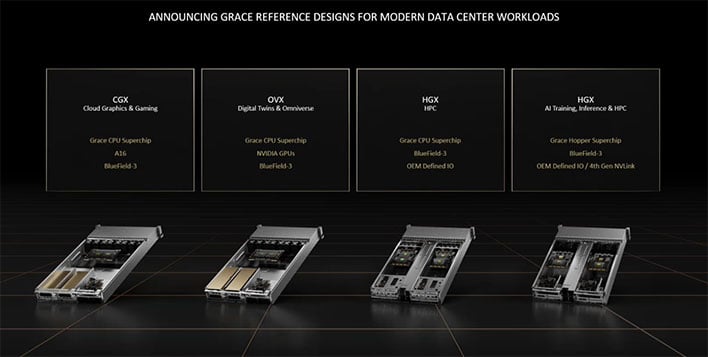

There are four reference designs providing the blueprints for server baseboards for ODMs and OEMs to both tailor to their needs, and quickly bring to market. Here's a high level overview of the four designs...

- NVIDIA HGX Grace Hopper systems for AI training, inference and HPC are available with the Grace Hopper Superchip and NVIDIA BlueField-3 DPUs.

- NVIDIA HGX Grace systems for HPC and supercomputing feature the CPU-only design with Grace CPU Superchip and BlueField-3.

- NVIDIA OVX systems for digital twins and collaboration workloads feature the Grace CPU Superchip, BlueField-3 and NVIDIA GPUs.

- NVIDIA CGX systems for cloud graphics and gaming feature the Grace CPU Superchip, BlueField-3 and NVIDIA A16 GPUs.

There are a range of data center workloads that these 1U and 2U rack systems can facilitate, from cloud gaming and omniverse applications, to AI and inference.

NVIDIA provided some additional details on two of the 2U high density reference designs. On the left, the HGX Grace blade wields a dual Grace CPU Superchip with up to 1TB of LPDDR5x memory providing up to 1TB/s of memory bandwidth. This can be air or liquid cooled, and supports up to 84 nodes per rack.

Then on the right, there's the HGX Grace Hopper Superchip blade with 512GB of LPDDR5x and 80GB of HBM3 memory providing up to a whopping 3.5TB/s of memory bandwidth. It has a 1000W TDP and can likewise be air or liquid cooled, with support for up to 42 nodes per rack.

In case anyone is wondering, while this is a major play on NVIDIA's part, it's not abandoning x86 solutions. It will continue to offer and support CGX, OVX, and HGX systems with x86 CPUs from AMD and Intel.

As for its Grace CPU Superchip and Grace Hopper Superchip reference designs, NVIDIA expects the first certifications of OEM servers will come shortly after partner systems ship next year.