NVIDIA Hopper H100 Data Center GPU With Monstrous 120GB HBM3 Spotted In The Wild

NVIDIA's Hopper GPU isn't coming to a graphics card near you. Despite being called a "GPU", it's really a compute accelerator meant for supercomputers and massive workstations. The chip was announced by NVIDIA way back in March, but it only recently entered full production, and the first parts from the family have yet to actually hit the market. Despite that, one fellow claims to have a Hopper PCIe add-in card with 120GB of RAM onboard.

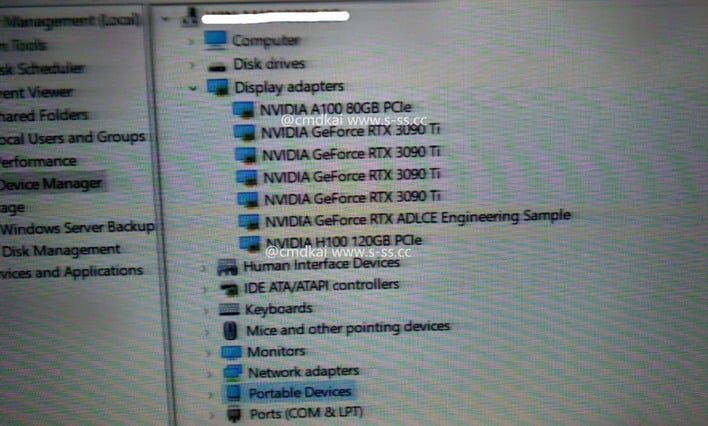

This screenshot was posted up to s-ss.cc by the blog's author, Kaixin (@cmdkai on Twitter). The author claims to have acquired it from a "mysterious friend," and it's quite a shocking screenshot. It appears to show a Windows Device Manager window for a system with no less than seven NVIDIA GPUs: four GeForce RTX 3090 Ti cards, an 80GB A100 accelerator, an "RTX ADLCE Engineering Sample", and finally, an "NVIDIA H100 120GB PCIe".

The RTX ADLCE Engineering Sample entry is interesting, particularly considering that this screenshot is supposedly a few weeks old. While we could easily surmise ADLCE denotes it is an Ada Lovelace sample, Kaixin says that this is an RTX 4090 engineering sample limited to 350 watts. According to him, this puts the single-precision compute performance at "only" around 70 TFLOPS, which is about what we predicted for that GPU running at 2 GHz.

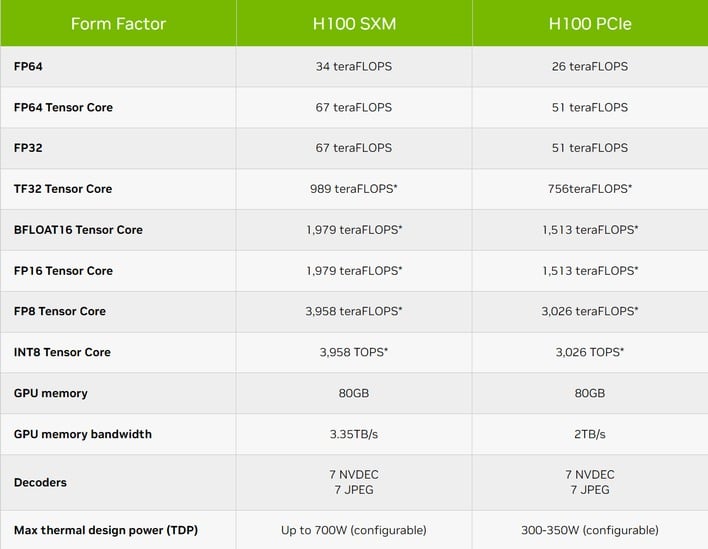

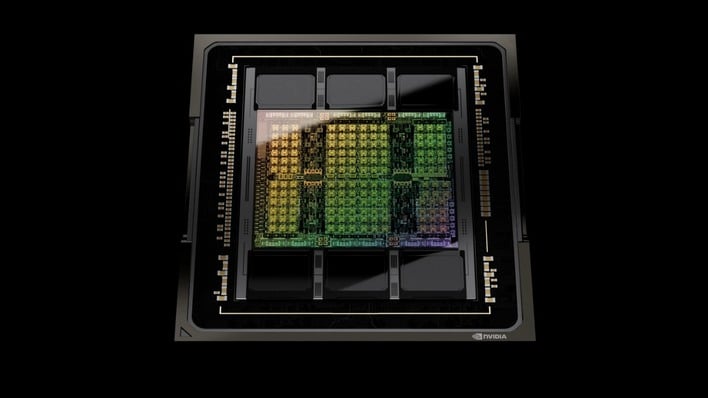

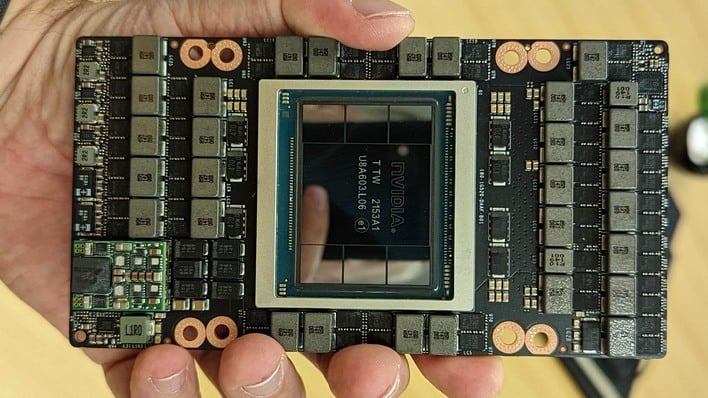

The more interesting part is the "H100 120GB" card. NVIDIA did announce Hopper H100 with a PCI Express add-in card version, but it's significantly slower than the SXM mezzanine version—and neither version has been announced with 120GB of memory onboard, but rather 80GB. Hopper comes as a multi-chip module (MCM) with the Hopper GPU surrounded by five stacks of HBM3 memory (plus one dummy stack for physical stability.)

Kaixin's "mysterious friend" also claims that this H100 120GB PCIe card is in fact using the same version of the H100 chip used on the SXM mezzanine interface H100 cards. He lists off some specifications: 16896 CUDA cores, and 3TB/s memory bandwidth—both matching the specs for the faster SXM H100 card.

Now, that GPU is designed for enterprise enclosures with extreme amounts of airflow, and as a result it comes with an extremely high 700-watt TDP; we doubt this PCIe card has the same, so the performance still wouldn't be the same. It does supposedly hit about 60 TFLOPs in single-precision, though. That's partway between the NVIDIA specs for the H100 SXM and the H100 PCIe, and makes sense if it's a slightly wider GPU running at the same clocks compared to the standard PCIe card version.

Of course, we've no way to verify the leak's veracity; this could simply be an attention-seeking photoshop. There is merit, though; NVIDIA's enterprise customers always want more memory capacity, and a 120GB H100 GPU would surely be just the thing those customers are looking for. NVIDIA's enterprise customers are supposed to start selling systems with H100s any time now—we'll see if one of them starts shipping 120GB GPUs soon.