NVIDIA Launches HGX-2 AI Cloud Server Platform For High Performance Computing

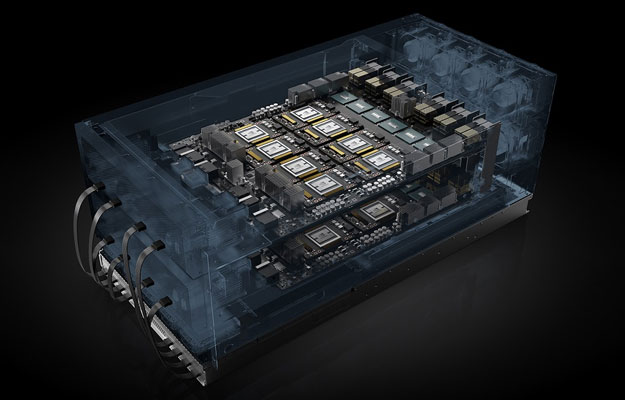

Forget about running two graphics cards in SLI, imagine having 16 GPUs working in tandem to crunch through intensive workloads. That would be pretty awesome, right? It's also attainable, at least to certain audiences. NVIDIA today unveiled its HGX-2, a cloud server platform outfitted with 16 Tesla V100 Tensor Core GPUs working as a single, giant GPU to deliver a staggering two petaflops of performance geared towards artificial intelligence.

Yes folks, this thing could run Crysis, if that's what it was designed for. It's not, of course, nor is it for playing games in general. NVIDIA is pitching the HGX-2 as the first unified computing platform for both AI and high performance computing (HPC). To that end, it allows high-precision calculations using FP64 and FP32 for scientific computing and simulations, while also enabling FP16 and Int8 for AI training and inference.

"The world of computing has changed," said Jensen Huang, founder and chief executive officer of NVIDIA, speaking at the GPU Technology Conference Taiwan, which kicked off today. "CPU scaling has slowed at a time when computing demand is skyrocketing. NVIDIA’s HGX-2 with Tensor Core GPUs gives the industry a powerful, versatile computing platform that fuses HPC and AI to solve the world’s grand challenges."

NVIDIA's HGX-2 set a record in AI training speed by processing 15,500 images per second on the ResNet-50 training benchmark. According to NVIDIA, it can replace up to 300 CPU-only servers.

It also features various NVSwitch interconnect fabric technology to seamlessly link all those GPUs together a single workhorse. The HGX-2 is essentially a stacked system with two baseboards, each one containing eight Tesla V100 32GB Tensor Core GPUs and half a dozen NVSwitches. Each NVSwitch is a fully non-blocking NVLink switch with 18 ports so any port can communicate with any other port at full NVLink speed. There are 48 NVLink ports between the two baseboards.

"Using the GPU baseboard approach allows us to focus on delivering the most performance optimized GPU sub-system, while our server partners focus on system-level design, such as mechanicals, power, and cooling. Partners can tailor the platform to meet the specific cloud data center requirements. This reduces our partners’ resource usage; together, we can bring the latest solution to the market much faster," NVIDIA explains.

Added up, the HGX-2 boasts 512GB of GPU memory and 2,400GB/s of bisection bandwidth via 48 NVLinks, versus 256GB and 300GB/s on the HGX-1. Importantly, each GPU in the HGX-2 can access the entire 512GB GPU memory allotment at the full 300GB/s NVLink speed.

Customers who need this kind of GPU computing power will soon be able to have it—Lenovo, QCT, Supermicro, and Wiwynn have all announced plans to bring their own HGX-2 systems to market later this year. In addition, four of the world's top original design manufacturers (ODMs) are expected to build out HGX-2 systems for use in some of the world's largest cloud datacenters, also later this year.