NVIDIA Jetson AGX Orin: The Next-Gen Platform That Will Power Our AI Robot Overlords Unveiled

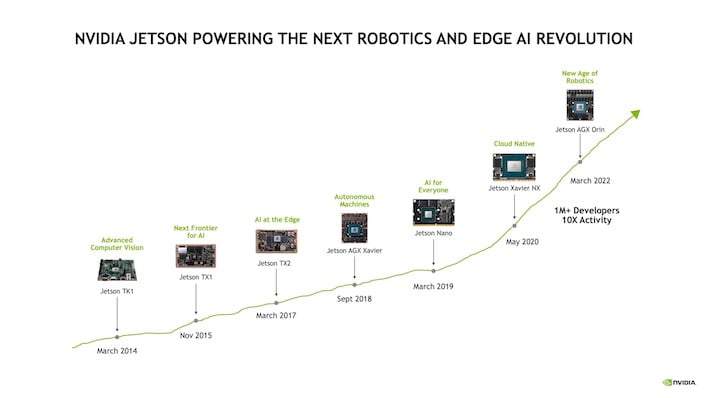

At the heart of every autonomous machine, modern industrial robot, and automated manufacturing apparatus is some form artificial intelligence. These things are amazingly complex, too—not only are there models to train, but there are sensors to interpret and reactions to formulate. NVIDIA has been at the forefront of the recent robotics revolution with its Jetson family of single-board computers, going all the way back to the original Jetson TK1 in 2014. Eight years later, the latest member of the lineup has arrived: Jetson AGX Orin. The Orin just piles onto what NVIDIA claims to be a huge lead in AI; by its own estimation, 2020's Jetson AGX Xavier is still the fastest AI application solution on the market, yet progress marches on.

This was originally meant to be a review. We were due to receive a development kit, but COVID-19 related delays overseas and the general pain of the supply chain that everybody has experienced recently have gotten in the way. That review and overview of the development kit are still coming, but for today we have to settle for a drool-worthy AI-accelerating hardware sneak peek.

The 64 A100 third-generation Tensor cores are one of the main reasons one might invest in NVIDIA's AI architecture. Their main purpose is to drive AI training using NVIDIA's mixed-precision Tensor Float 32 (TF32) format. Remember that AI training, both the training and inference stages, doesn't need the precision that traditional graphics rendering requires, and so it can jam multiple values into a single register and perform operations on them, offering a huge speed-up in calculations. The DLAs, on the other hand, are what you might think of as the neural network. They can be implemented in FPGAs or any number of CPU architectures and in NVIDIA's case, it's actually a RISC-V design. They keep the tensor cores fed with data and accelerate training operations.

What's with all the CUDA cores, you might ask? To put it simply, NVIDIA believes every robot needs a face. It's also likely that it needs to display text, static graphics, animations, or 3D scenery. Whatever the robot calls for, there's enough raster graphics horsepower under the hood to draw it. It might seem like overkill, but a friendly UI or virtual "person" can go a long way towards making robotics more human-accessible. If you're wondering, the CUDA and Tensor core counts are the same allocations found in the mobile version of the GeForce RTX 3050, which is befitting to a slim thermal and power budget. The mobile RTX 3050's 16 ray tracing cores are absent, however.

Once we get outside of that, the Jetson AGX Orin module has up to 64GB of LPDDR5 memory with a bandwidth of 204.8GB per second. There are several configurations of the notebook GeForce RTX 3050, but that's actually a bit faster than the majority. The reason for that is that memory is shared between the CPU and GPU components. Speaking of CPU, there's a multi-core Arm Cortex A78 block with 3MB of L2 and 6MB of L3 cache. NVIDIA assured us that these cores offer sufficient resources to do development directly on the development kit.

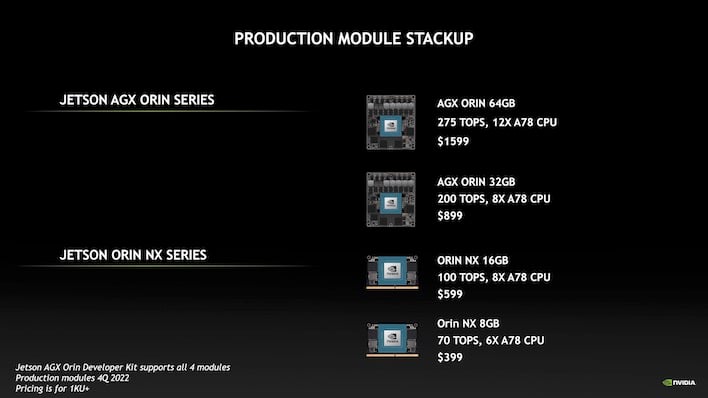

There are several versions of the Jetson AGX Orin which will come in two flavors, with either 32GB or 64GB of memory, where the larger pool of memory is accompanied by a higher power budget and better overall performance. The AGX Orin 32GB has an 8-core Cortex A78 CPU block, the 32GB of LPDDR5 indicated by its name, and can pull off 200 TOPs of AI compute power for $899. The higher-end AGX Orin 64GB has an 12-core A78 CPU, 64GB of LPDDR5, and checks in with 275 TOPs of performance for $1,599. Power scales anywhere from 15 to 60 Watts. There are also some much smaller modules in lower price brackets under the Orin NX series that pare the performance, power draw, and available memory back down to earth in either 8GB or 16GB configurations, too.

The performance implications of Jetson AGX Orin are enormous compared to Jetson AGX Xavier, let alone the diminutive Jetson Nano. The company says that today, Orin is more than three times as fast at vision and conversational AI algorithms than Xavier. Furthermore, with future software enhancements in the pipeline, NVIDIA says that advantage that will grow to nearly five times as fast. New robots need all this to keep up with the increased number of sensors that go into their housing. It used to be a couple of cameras and a microphone were about it, but modern robots have LiDAR, radar, ultrasonic sensors, and much more, and all that extra information has to be processed just as quickly as a few sensors did in the past. Extra input adds up really quickly, requiring hardware to scale with it.

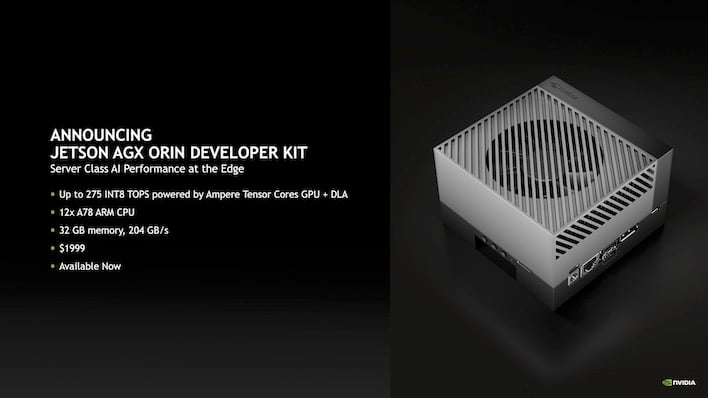

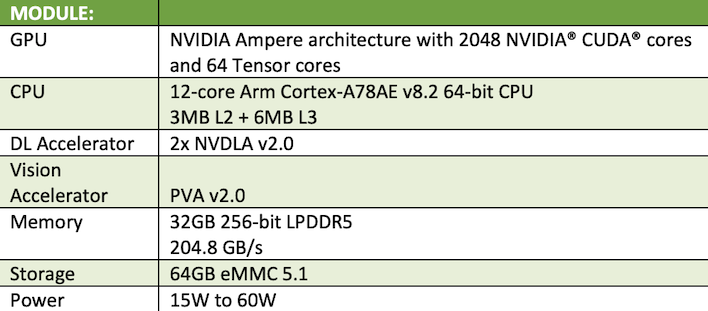

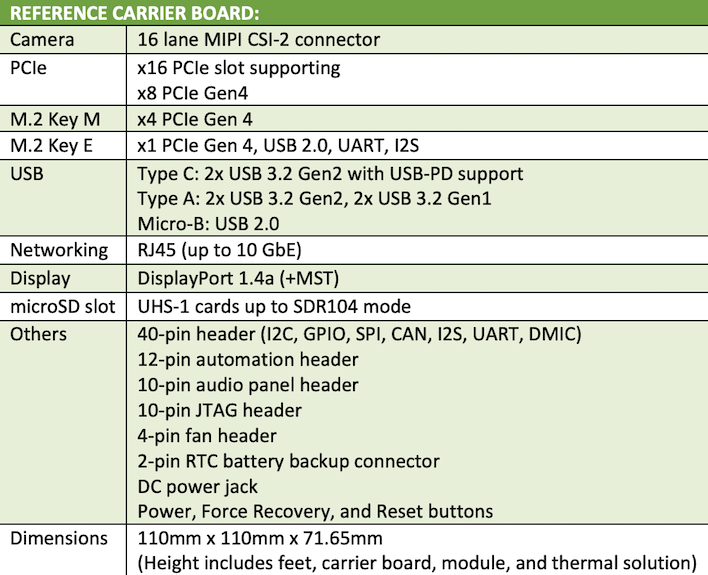

The dev board has quite a bit of extra IO, befitting a development kit. There's the expected JTAG and 40-pin GPIO header, additional headers for sensors, and enough USB to connect not only cameras and microphones, but keyboards and mice as well. But on top of that, there's 10-Gigabit Ethernet and even a pair of PCI Express slots. We're quite eager to dig into this little kit and see what it can do when it arrives, and you can be sure there will be follow-up coverage on that. This kit is available for order today for $1,999 as a mini PC for developers who are ready to tackle the world of robotics. It's all summed up in these tables below.

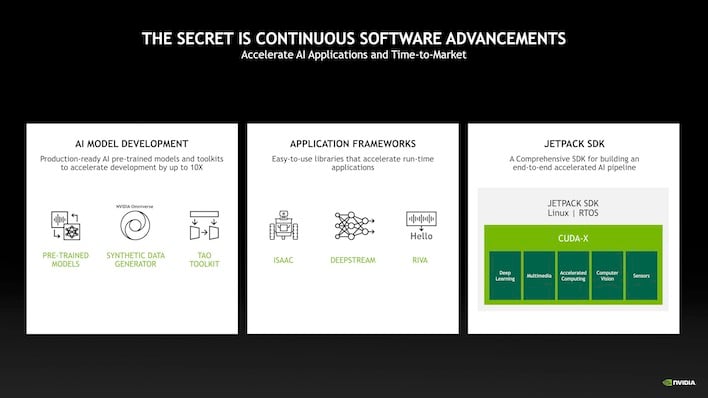

Hardware is nice to have, but great hardware can only go as far as developers can carry it. There needs to be new tools, and NVIDIA is releasing new versions of all of its various APIs and a new Jetpack SDK. Version 5.0 is going to be a very big release, but all the same NVIDIA says it's worked hard to maintain backwards compatibility so that developers working in the previous 4.6 release won't have to make many changes to code to migrate to the new platform. There's a new real-time OS too, as the Orin family was built on Ubuntu 20.04 LTS, whereas previous RTOSes were back on Ubuntu 18.04.

NVIDIA has also prepared a couple of demos that show off the new platform's capabilities and aid developers looking to get started. The TAO demo showcases NVIDIA's pre-trained libraries that are part of the TAO toolkit. TAO can simulate data for inference, effectively using an AI model to train another AI model, or use data provided by developers to speed along the training process. Meanwhile, the RIVA demo shows off NVIDIA's Riva Automatic Speech Recognition (ASR) and Text-to-Speech (TTS) models for natural language processing. There's a load of new models and algorithms

This was originally meant to be a review. We were due to receive a development kit, but COVID-19 related delays overseas and the general pain of the supply chain that everybody has experienced recently have gotten in the way. That review and overview of the development kit are still coming, but for today we have to settle for a drool-worthy AI-accelerating hardware sneak peek.

Meet Jetson AGX Orin

The Jetson AGX Orin straps NVIDIA's current Ampere architecture to just enough CPU power to keep it fed, and then opens the floodgates with tons of memory, both in terms of the size of the pool and the bandwidth which is available. Normally, this is where we'd write "the {{ insert CPU name here }} sits at the heart," but this time, the really heavy lifting comes from the GPU. NVIDIA has given the Jetson AGX Orin a pretty impressive amount of hardware resources: 2,048 CUDA cores, 64 Tensor cores, and a pair of NVIDIA's Deep Learning Accelerator engines (DLAs) based on the NVDLA specification.The 64 A100 third-generation Tensor cores are one of the main reasons one might invest in NVIDIA's AI architecture. Their main purpose is to drive AI training using NVIDIA's mixed-precision Tensor Float 32 (TF32) format. Remember that AI training, both the training and inference stages, doesn't need the precision that traditional graphics rendering requires, and so it can jam multiple values into a single register and perform operations on them, offering a huge speed-up in calculations. The DLAs, on the other hand, are what you might think of as the neural network. They can be implemented in FPGAs or any number of CPU architectures and in NVIDIA's case, it's actually a RISC-V design. They keep the tensor cores fed with data and accelerate training operations.

What's with all the CUDA cores, you might ask? To put it simply, NVIDIA believes every robot needs a face. It's also likely that it needs to display text, static graphics, animations, or 3D scenery. Whatever the robot calls for, there's enough raster graphics horsepower under the hood to draw it. It might seem like overkill, but a friendly UI or virtual "person" can go a long way towards making robotics more human-accessible. If you're wondering, the CUDA and Tensor core counts are the same allocations found in the mobile version of the GeForce RTX 3050, which is befitting to a slim thermal and power budget. The mobile RTX 3050's 16 ray tracing cores are absent, however.

Once we get outside of that, the Jetson AGX Orin module has up to 64GB of LPDDR5 memory with a bandwidth of 204.8GB per second. There are several configurations of the notebook GeForce RTX 3050, but that's actually a bit faster than the majority. The reason for that is that memory is shared between the CPU and GPU components. Speaking of CPU, there's a multi-core Arm Cortex A78 block with 3MB of L2 and 6MB of L3 cache. NVIDIA assured us that these cores offer sufficient resources to do development directly on the development kit.

There are several versions of the Jetson AGX Orin which will come in two flavors, with either 32GB or 64GB of memory, where the larger pool of memory is accompanied by a higher power budget and better overall performance. The AGX Orin 32GB has an 8-core Cortex A78 CPU block, the 32GB of LPDDR5 indicated by its name, and can pull off 200 TOPs of AI compute power for $899. The higher-end AGX Orin 64GB has an 12-core A78 CPU, 64GB of LPDDR5, and checks in with 275 TOPs of performance for $1,599. Power scales anywhere from 15 to 60 Watts. There are also some much smaller modules in lower price brackets under the Orin NX series that pare the performance, power draw, and available memory back down to earth in either 8GB or 16GB configurations, too.

The performance implications of Jetson AGX Orin are enormous compared to Jetson AGX Xavier, let alone the diminutive Jetson Nano. The company says that today, Orin is more than three times as fast at vision and conversational AI algorithms than Xavier. Furthermore, with future software enhancements in the pipeline, NVIDIA says that advantage that will grow to nearly five times as fast. New robots need all this to keep up with the increased number of sensors that go into their housing. It used to be a couple of cameras and a microphone were about it, but modern robots have LiDAR, radar, ultrasonic sensors, and much more, and all that extra information has to be processed just as quickly as a few sensors did in the past. Extra input adds up really quickly, requiring hardware to scale with it.

NVIDIA's Advanced Developer Tools

The Jetson AGX Orin launch includes specialized development hardware. This impressive-looking little system fits in something close to a mini ITX format, but customized to fit its own enclosure. The full kit includes the AGX Orin developer board, which is a middle ground of the two production boards. It gets 32GB of memory, but has the 12-core CPU and 275 TOPs performance spec of the higher-end configuration. That should definitely be enough to start coding proofs of concept and even production implementations without having to go off-board.The dev board has quite a bit of extra IO, befitting a development kit. There's the expected JTAG and 40-pin GPIO header, additional headers for sensors, and enough USB to connect not only cameras and microphones, but keyboards and mice as well. But on top of that, there's 10-Gigabit Ethernet and even a pair of PCI Express slots. We're quite eager to dig into this little kit and see what it can do when it arrives, and you can be sure there will be follow-up coverage on that. This kit is available for order today for $1,999 as a mini PC for developers who are ready to tackle the world of robotics. It's all summed up in these tables below.

Hardware is nice to have, but great hardware can only go as far as developers can carry it. There needs to be new tools, and NVIDIA is releasing new versions of all of its various APIs and a new Jetpack SDK. Version 5.0 is going to be a very big release, but all the same NVIDIA says it's worked hard to maintain backwards compatibility so that developers working in the previous 4.6 release won't have to make many changes to code to migrate to the new platform. There's a new real-time OS too, as the Orin family was built on Ubuntu 20.04 LTS, whereas previous RTOSes were back on Ubuntu 18.04.

NVIDIA has also prepared a couple of demos that show off the new platform's capabilities and aid developers looking to get started. The TAO demo showcases NVIDIA's pre-trained libraries that are part of the TAO toolkit. TAO can simulate data for inference, effectively using an AI model to train another AI model, or use data provided by developers to speed along the training process. Meanwhile, the RIVA demo shows off NVIDIA's Riva Automatic Speech Recognition (ASR) and Text-to-Speech (TTS) models for natural language processing. There's a load of new models and algorithms