NVIDIA Is Using Machine Learning To Transform 2D Images Into 3D Models

Researchers at NVIDIA have come up with a clever machine learning technique for taking 2D images and fleshing them out into 3D models. Normally this happens in reverse—these days, it's not all that difficult to take a 3D model and flatten it into a 2D image. But to create a 3D model without feeding a system 3D data is far more challenging.

"In traditional computer graphics, a pipeline renders a 3D model to a 2D screen. But there’s information to be gained from doing the opposite—a model that could infer a 3D object from a 2D image would be able to perform better object tracking, for example.," NVIDIA explains.

What the researchers came up with is a rendering framework called DIB-R, which stands for differentiable interpolation-based renderer. The goal was to design a framework that could accomplish this task while integrating seamlessly with machine learning techniques.

"The result, DIB-R, produces high-fidelity rendering by using an encoder-decoder architecture, a type of neural network that transforms input into a feature map or vector that is used to predict specific information such as shape, color, texture and lighting of an image," NVIDIA says.

According to NVIDIA, this type of thing could be especially helpful in robotics. By processing 2D images into 3D models, an autonomous robot would be in a better position to interact with its environment more safely and efficiently, as it could "sense and understand it surroundings" using this technology.

NVIDIA says it takes two days to train this kind of model on a single Tesla V100 Tensor Core GPU, versus several weeks to train the same kind of thing without its GPUs. What that ultimately translates to is being able to produce a 3D object from a 2D image in less than 100 milliseconds.

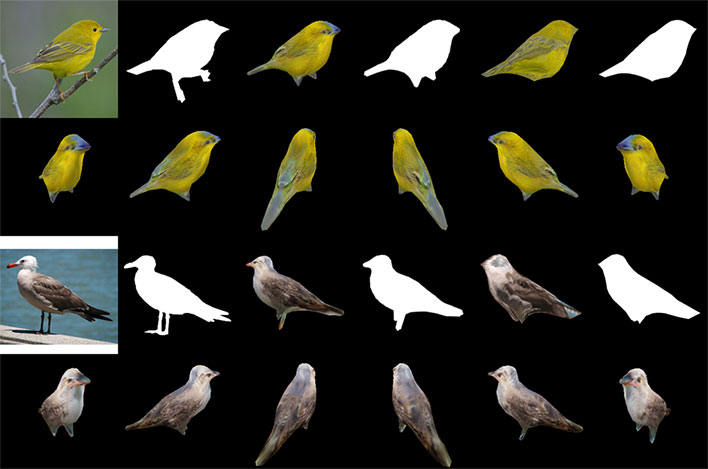

Researchers at NVIDIA trained their model on a bunch of datasets, including a set collection of bird images. Once trained, a system could look at a 2D image of a bird and a produce a 3D model with the right shape and texture.

"This is essentially the first time ever that you can take just about any 2D image and predict relevant 3D properties," says Jun Gao, one of a team of researchers who collaborated on DIB-R.

Beyond robotics, NVIDIA also sees this as being handy in transforming 2D images of dinosaurs and other extinct animals, into lifelike 3D images in quick fashion (under a second).