NVIDIA Releases Open-Source NeMo Guardrails To Help Keep AI Chatbots Safe And Secure

The rush by big tech to push out AI chatbots before they're fully cooked has it feeling a little bit like the Wild West. This comes with a plethora of concerns, not of the least of which is the spread of misinformation, but there are safety and security issues at play as well. To help wrangle the suddenly rapid emergence of advanced AI chatbots, NVIDIA is releasing an open-source solution that developers can use to guide generative AI applications.

It's called NeMo Guardrails and it's designed to ensure that AI chatbots and other smart applications built on top of large language models (LLMs) are "accurate, appropriate, on topic, and secure."

"Today’s release comes as many industries are adopting LLMs, the powerful engines behind these AI apps. They’re answering customers’ questions, summarizing lengthy documents, even writing software and accelerating drug design.

NeMo Guardrails is designed to help users keep this new class of AI-powered applications safe," NVIDIA stated in a blog post.

AI chatbots and other related tools have the potential to streamline tasks like writing code, revolutionize customer service, and much more. However, they're far from infallible in their current state. A recent report highlighted how employees at Google slammed the company's Bard AI chatbot as a "pathological liar" and being "worse than useless."

Not pick specifically on Bard, but it was also caught plagiarizing an article and then claiming screenshots showing the dirty deed were fake (they were not).

It's important to remember that these AI chatbots, whether powered by ChatGPT or LaMDA or whatever else, are essentially beta tools. Most of them carry disclaimers to that end. At the same time, despite some hiccups these tools are not going away. That's where NVIDIA's NeMo Guardrails come into play.

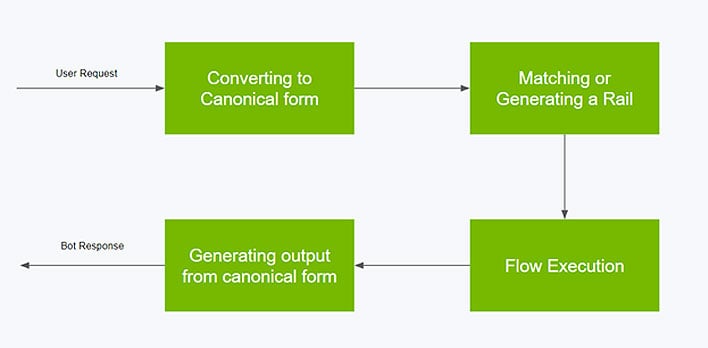

Example of a process flow of a user interaction with NeMo Guardrails (Source: NVIDIA)

NVIDIA said it designed NeMo Guardrails to work with all LLMs, including OpenAI's ChatGPT tool. Using the open-source solution, developers can set up three kinds of boundaries, including topical guardrails that prevent apps from wading into undesired areas (like a customer service bot chatting about the weather), safety guardrails to ensure apps response accurately and appropriately (they can ensure references are only made to credible sources), and security guardrails to restrict apps to making connections only to external third-party software that are known to be safe.

According to NVIDIA, just about any software developer can use NeMo Guardrails, not just machine learning experts and data scientists. It only takes a few lines of code to quickly create rules.

"Since NeMo Guardrails is open source, it can work with all the tools that enterprise app developers use.

For example, it can run on top of LangChain, an open-source toolkit that developers are rapidly adopting to plug third-party applications into the power of LLMs," NVIDIA adds.

You can check out NVIDIA's blog post on NeMo Guardrails for more info, including how to start using its new tool.