NVIDIA's H100 Hopper GPU Sweeps MLPerf AI Inference Tests With Huge Performance Uplift

The 2023 GTC Developer conference included 650 talks from leaders in AI development. One of the most prominent of those was from NVIDIA's own Jensen Huang. Huang's keynote speech covered how the company and its partners are offering everything from training to deployment for cutting-edge services. Included in all that is NVIDIA's new H100 NVL with dual-GPU NVLink. The new GPU is based on NVIDIA's Hopper architecture and features a Transformer Engine design.

"The next level of Generative AI requires new AI infrastructure to train large language models with great energy efficiency. Customers are ramping Hopper at scale, building AI infrastructure with tens of thousands of Hopper GPUs connected by NVIDIA NVLink and InfinBand," Huang remarked in a recent NVIDIA blog post.

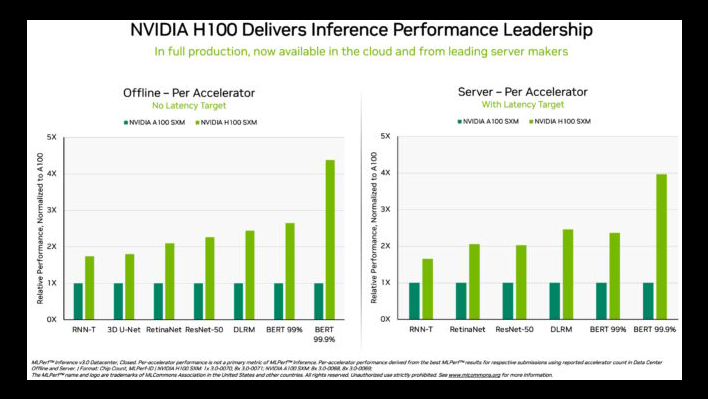

An example of the performance upgrade is seen specifically when "NVIDIA H100 Tensor Core GPUs running in DGX H100 systems delivered the highest performance in every test of AI inference, the job of running neural networks in production." This is in large part due to software optimizations, which pushed the GPUs to deliver up to 54% performance gains from their debut back in September of last year. Another boost in performance was seen in healthcare, with a 31% performance increase.

L4 Tensor Core GPUs are also seeing a 3x in performance increase over last-generation hardware. NVIDIA pointed out that the L4 was particularly impressive on the performance-hungry BERT model, due to it supporting the key FP8 format.

Ten companies submitted results on the NVIDIA platform during this round of MLPerf testing. Those included Microsoft Azure cloud service and system makers including ASUS, Dell Technologies, Gigabyte, H3C, Lenovo, Nettrix, Supermicro and xFusion.

Anyone wanting to take a deeper dive into the MLPerf results can do so by visiting NVIDIA's developer website.