Research Study Claims AI-Generated Faces Are More Trustworthy Than Real Humans

Artificial Intelligence (AI) research continues to make advancements as its uses become more diverse. A Sony AI recently challenged human players in PlayStation's exclusive Gran Turismo, and had mortals seething. AI is also a driving force behind the metaverse as companies battle it out for dominance in the land of make believe. But perhaps one of the most interesting, and often disturbing avenues of AI has been its use in creating realistic human imagery, also known as deep-fakes.

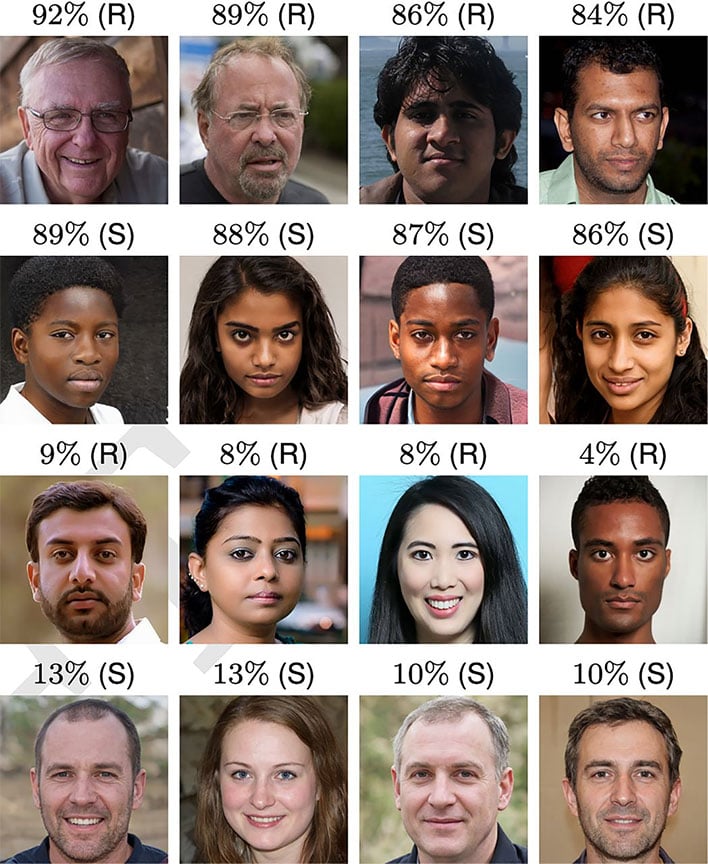

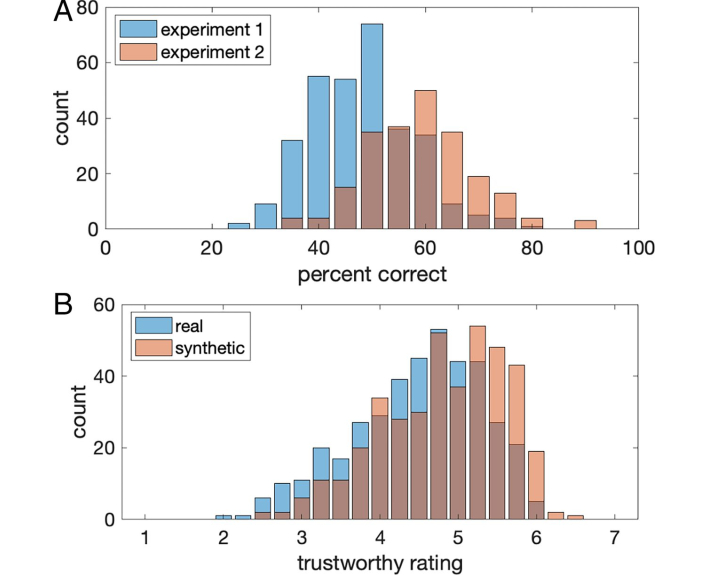

In a recent post on Proceedings of the National Academy of Sciences of the United States (PNAS), new research suggests that AI-powered audio, image, and video synthesis has become so good that mere mortals actually seem to trust their artificially generated counterparts more than their fellow human beings. The days of being able to distinguish deep-fakes by the "uncanny valley," or it having an empty stare, are quickly disappearing.

Generative adversarial networks (GANs) are popular methods of synthesizing content. A GAN uses two neural networks, a generator and discriminator, and pits them against one another. As synthesized images are created, if the discriminator is able to distinguish them from real ones the generator is penalized. Each time the generator is punished, it learns to create more realistic faces until the discriminator is unable to tell the fakes from the real ones.

Results from the study suggest that actual humans can easily be deceived by machine-generated faces. "We found that not only are synthetic faces highly realistic, they are deemed more trustworthy than real faces," stated study co-author Hany Farid, a Professor at the University of California, Berkeley. This raises concerns that use of highly sophisticated deep-fakes could be very effective for heinous purposes.

Perhaps the greatest concern is that machine-generated imagery could be utilized for malicious purposes such as weaponization in disinformation campaigns for political or personal gain, the creation of false porn for blackmail, and a number of other tactics that could be implemented. It has created an "arms race" of sorts between those trying to develop countermeasures to identify deep-fakes, and cybercriminals trying to take advantage of the technology. The research contends that, "Although progress has been made in developing automatic techniques to detect deep-fake content, current techniques are not efficient or accurate enough to contend with the torrent of daily uploads."

The authors end the study with an ominous observation, stating, "We, therefore, encourage those developing these technologies to consider whether the associated risks are greater than their benefits. If so, then we discourage the development of technology simply because it is possible."