Facebook, Michigan State University Researchers Develop Tech To Pinpoint Origin Of Deepfakes

While there may be funny and impressive deepfakes out there, the technology poses a risk to trustworthy media and public figures. However, companies are working to prevent this sort of thing by developing robust deep fake detection tools. Facebook is the latest to join the group through reverse engineering generative models from a single deepfake.

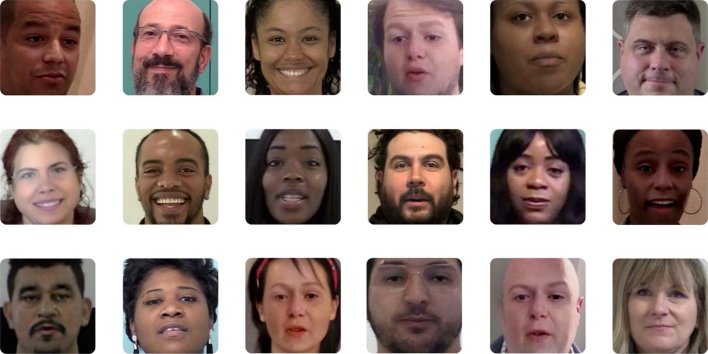

As time goes on, figuring out whether an image is real or fake has become increasingly difficult as new deepfake generative models are created. For example, things could end poorly if the U.S. President were deepfaked into a video where he says something defamatory about another country, which could spark hostilities or even war if not identified. To combat this and take things a step further than current deepfake detection techniques, Facebook is implementing a “reverse engineering” method that takes unique patterns or fingerprints of sorts in images to attribute them to a specific AI model.

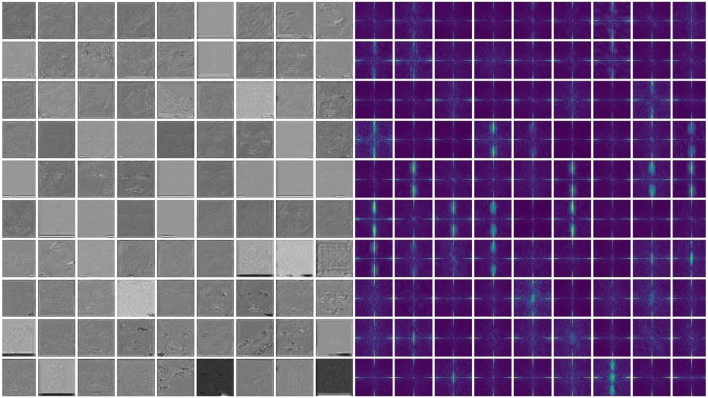

estimated fingerprint on the left and a corresponding frequency spectrum on the right."

While this may seem like a lot to understand, the long and short of it is that this research “pushes the boundaries of understanding in deepfake detection, introducing the concept of model parsing that is more suited to real-world deployment.” Hopefully, this will also help keep deepfakes in the realm of being silly and fun, rather than becoming a threat to democracy, journalism, and communications for all people.