This Clever Trick Turns A $99 AMD Ryzen APU Into A 16GB AI Accelerator

This type of AI image generation wants two things: lots of RAM and as much parallel compute throughput as you can throw at the problem. GPUs are obviously excellent for this kind of task because they consist of little anymore besides massive parallel compute arrays. However, if you're running the AI on your GPU, it needs to fit into the GPU's memory, which means cards with less than 8GB of video RAM need special accommodations that can really stifle performance.

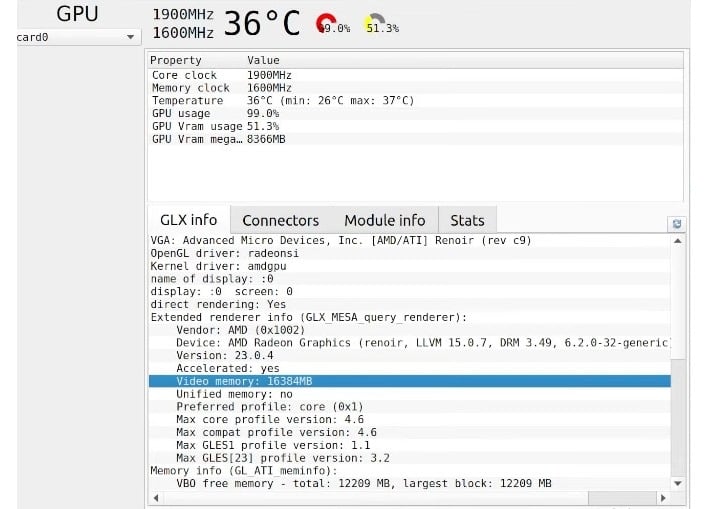

Systems with integrated graphics are typically configured such that a certain amount of system memory is walled-off, and dedicated to the graphics processor. Then, the system can additionally allocate extra memory to the GPU if it is needed. However, this capability typically maxes out at around 4GB. Well, by fiddling with firmware settings (or kernel options in Linux), you can assign as much as 16GB of system RAM to be dedicated to the onboard graphics.

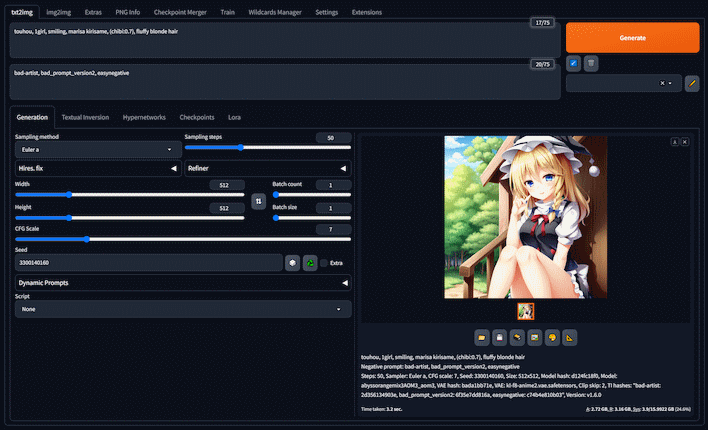

As it turns out, memory bandwidth isn't that big of a deal for this task, so an integrated GPU with gobs of RAM assigned to it can actually perform okay in Stable Diffusion. We haven't tested it for ourselves, but Reddit user /u/chain-77 says that he can generate a fifty-step 512x512 image in a little under two minutes. That's quite slow compared to any recent discrete GPU, but it's more than four times as fast as CPU-based generation on our eight-core Ryzen 7 5800X.

The Redditor, who is also known as "Tech-Practice" on Xwitter and YouTube, achieved this performance under Linux on a Ryzen 5 4600G. That's a six-core Zen 2 processor with a Vega-based integrated GPU hosting 7 compute units, giving it 448 shaders. The GPU clocks up to 1.9GHz, giving this integrated part some 1.7 TFLOPS of FP32 compute. That's not bad for an integrated GPU, and although even a lowly Radeon RX 6400 is more than twice as fast, it'll struggle with higher-resolution image generation due to its low local memory.

It's an interesting experiment, for sure. The poster says that the big available video memory also allows the integrated Vega GPU to run a wide variety of other AI applications that would fail on much faster discrete GPUs with less onboard memory. As we said above, if your model doesn't fit in RAM, it doesn't work. Many AI models are big enough that they aren't going to fit in the 8GB of video RAM commonly available on discrete GPUs, but doubling the capacity can allow you to use the model with an integrated GPU—albeit at a glacial pace.

If we're honest, at least for the specific task of AI image generation, it's difficult to recommend this path to someone interested in using the function as a tool rather than as a toy. A lowly GeForce RTX 3060 12GB card can be had for under $300 USD, and that will reduce your generation times on this same task from just under two minutes on the Vega IGP all the way down to about 10 seconds per image. It has 12GB of video RAM onboard, which is plenty to do large batch generations of four, six, or even eight images at once, depending on the image size. Of course, it has to be said that this is an extra $300 on top of the price of the rest of the system.

Still, we have to admire the ingenuity of /u/chain-77. It takes some moxie to even attempt to run this kind of workload on an integrated GPU, and the results are actually worthwhile; the six Zen 2 CPU cores of the Ryzen 5 4600G won't be as fast as our Zen 3 processor was, so it's a more-than-4x speedup over CPU generation. Folks looking to fool around with AI may want to look into the memory settings available in their system's UEFI setup.