Samsung Unveils GDDR6W Graphics Memory Enabling 2X Capacity And A Huge Performance Lift

When we're talking about a memory interface, there are a bunch of salient specifications, but the two most important are generally its width and its transfer rate. Usually, when we hear about new memory, the main marketing merit is its sky-high transfer rate, but Samsung's taking a different tack with GDDR6W.

Specifically for PC GPUs, memory interface width tends to vary based on product segment. The widest non-HBM memory interfaces have been as big as 512-bits, although current cards top out at 384-bit width. Making a massively-parallel memory interface can open the door to mega-sized bandwidth, but there are all kinds of limitations implied with that practice.

For starters, you need enough memory chips to fill the interface. Typical GDDR memory chips have used 32-bit interfaces, which means you need fully 16 chips to fill up a 512-bit interface. That's a plethora of packages to pile onto a PCB, and a whole lot of traces you need to route through said board, too. This can lead to practices like placing parts on the back of the board, such as NVIDIA did with its high-end GeForce RTX 3000 cards. That's a sub-optimal solution, because those DRAM packages aren't being cooled effectively.

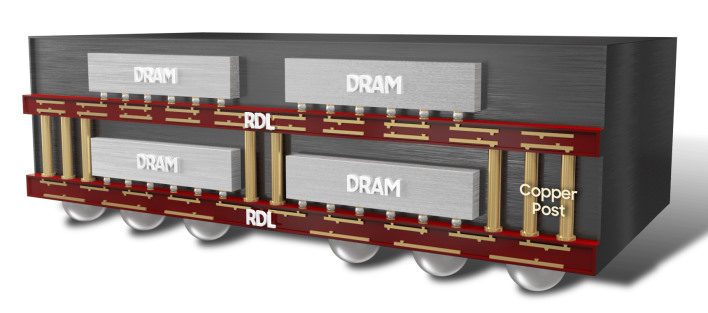

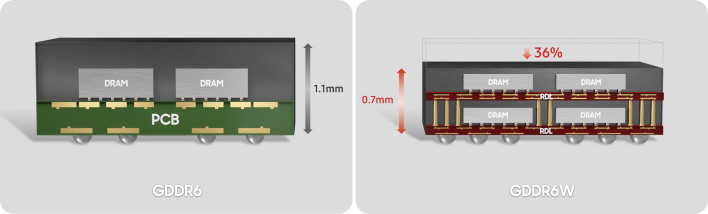

So what is GDDR6W? Basically, it's GDDR6, vertically stacked, although the reality is a little more involved than that. Essentially, Samsung has figured out how to stack silicon on silicon with no PCB involved at all. This lets them offer GDDR6 packages that are twice as dense as their previous packages while offering double the interface width (x64) and also decreasing overall package height by 36%.

To re-state all that, a GDDR6W package can have twice the memory capacity of a GDDR6 package, and connects to two 32-bit interfaces, all while being just 0.7mm tall—significantly shorter than the 1.1mm of one of the company's previous GDDR6 packages. Notably, there are no sacrifices in terms of transfer rate required to achieve these gains, which means that GPUs using the new RAM could have the same capacity and bandwidth in half the space.

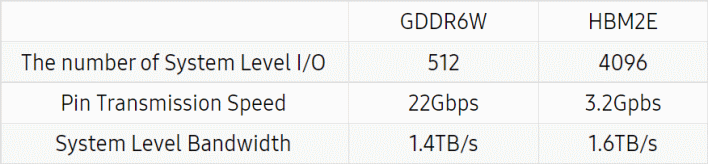

Samsung's buzzword-laden PR makes comparisons against HBM2E, which requires drastically more connections between the processor and the memory package than traditional GDDR DRAM. Despite that, thanks to its extremely high transfer rate, Samsung's GDDR6W can come within striking distance of the performance of HBM2E.

The company points out that it can achieve this using stanadard BGA; that is, without using an interposer layer, unlike HBM. On the other hand, we'd point out that HBM2e scales beyond the mentioned 3.2 Gbps transfer rate, and also that extant HBM2e GPUs are already using more than four stacks, as repesented in the comparison. Then again, the cost of HBM in general is comparatively very high, with chip and wire packaging versus standard commercial DRAM BGA packages.

Still, GDDR6W is certainly a step forward in terms of memory density and bandwidth. It will be fascinating to see if AMD, NVIDIA, or Intel deploy the technology in conjunction wtih future GPUs and graphics cards.

Specifically for PC GPUs, memory interface width tends to vary based on product segment. The widest non-HBM memory interfaces have been as big as 512-bits, although current cards top out at 384-bit width. Making a massively-parallel memory interface can open the door to mega-sized bandwidth, but there are all kinds of limitations implied with that practice.

For starters, you need enough memory chips to fill the interface. Typical GDDR memory chips have used 32-bit interfaces, which means you need fully 16 chips to fill up a 512-bit interface. That's a plethora of packages to pile onto a PCB, and a whole lot of traces you need to route through said board, too. This can lead to practices like placing parts on the back of the board, such as NVIDIA did with its high-end GeForce RTX 3000 cards. That's a sub-optimal solution, because those DRAM packages aren't being cooled effectively.

So what is GDDR6W? Basically, it's GDDR6, vertically stacked, although the reality is a little more involved than that. Essentially, Samsung has figured out how to stack silicon on silicon with no PCB involved at all. This lets them offer GDDR6 packages that are twice as dense as their previous packages while offering double the interface width (x64) and also decreasing overall package height by 36%.

To re-state all that, a GDDR6W package can have twice the memory capacity of a GDDR6 package, and connects to two 32-bit interfaces, all while being just 0.7mm tall—significantly shorter than the 1.1mm of one of the company's previous GDDR6 packages. Notably, there are no sacrifices in terms of transfer rate required to achieve these gains, which means that GPUs using the new RAM could have the same capacity and bandwidth in half the space.

Samsung's buzzword-laden PR makes comparisons against HBM2E, which requires drastically more connections between the processor and the memory package than traditional GDDR DRAM. Despite that, thanks to its extremely high transfer rate, Samsung's GDDR6W can come within striking distance of the performance of HBM2E.

The company points out that it can achieve this using stanadard BGA; that is, without using an interposer layer, unlike HBM. On the other hand, we'd point out that HBM2e scales beyond the mentioned 3.2 Gbps transfer rate, and also that extant HBM2e GPUs are already using more than four stacks, as repesented in the comparison. Then again, the cost of HBM in general is comparatively very high, with chip and wire packaging versus standard commercial DRAM BGA packages.

Still, GDDR6W is certainly a step forward in terms of memory density and bandwidth. It will be fascinating to see if AMD, NVIDIA, or Intel deploy the technology in conjunction wtih future GPUs and graphics cards.