Tesla's In-House Supercomputer Taps NVIDIA A100 GPUs For 1.8 ExaFLOPs Of Performance

Have you ever had a friend or family member casually drop big news on you, as if the subject they are talking about is an everyday occurrence? For example, "Yeah, the weather was great this weekend, I got a lot of yard work done. Next month I'm going to the moon with Elon Musk. Oh, I bought a new hedge trimmer at Walmart, it works great!." Well speaking of Musk, Tesla is that friend, after having casually announced it owns one of the most powerful supercomputers ever built.

How powerful, exactly? Oh, just 1.8 exaFLOPs, which is equivalent to 1,800 petaFLOps, or 1.8 quintillion floating point operations per second. And this is not even Dojo, which Musk teased last year as being under development, calling it a "beast!" and able to "process truly vast amounts of video data to train its autopilot technology.

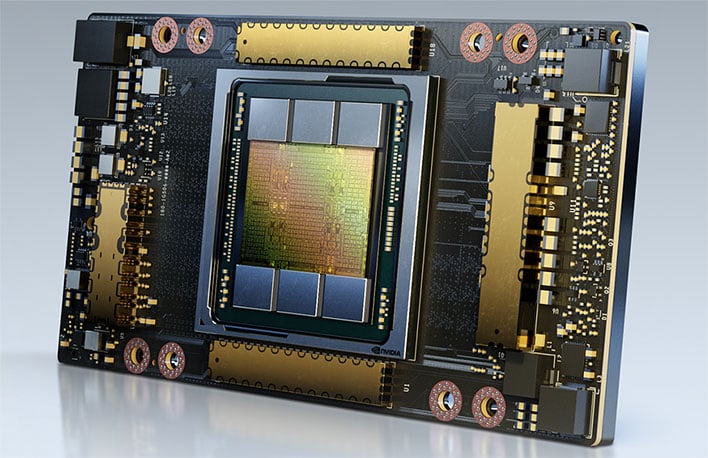

Dojo will come sometime later. In the meantime, Tesla senior director of artificial intelligence, Andrej Karpathy, revealed at the 4th International Joint Conference on Computer Vision and Pattern Recognition (CCVRP 2021 for short, because that's a mouthful) already leverages a supercomputing cluster powered by hundreds of nodes and thousands of A100 machine learning GPUs based on Ampere.

Here are the highlights of Tesla's in-house supercomputer...

- 720 nodes 9f 8x A100 80GB (5,760 GPUs total)

- 1.8 exaFLOPS (720 nodes * 312 TFLOPs-FP16-A100 * 8 GPU/nodes)

- 10 petabytes of "hot tier" NVMe storage @ 1.6 terabytes per second

- 640 terabits per second of total switching capacity

Karpathy called it an "insane" supercomputer that is actively being used by Tesla to handle the kind of massive datasets needed to continue developing and fine tuning the company's autonomous technologies. Tesla has not actually benchmarked the system, but Karpathy believes it would settle in towards the front section of the pack.

Why is such immense power needed? Tesla's vehicles record video from eight different cameras, each running at 36 frames per second. That works out to around 1 million 10-second video clips, totaling a staggering 1.5 petabytes of data. All that data is used to train Tesla's deep neural networks for its autopilot and self-driving technologies, and t hat is that is where a supercomputer of this magnitude comes into play.

This also enables Tesla's engineers to be able to experiment and develop new solutions.

"Computer vision is the bread and butter of what we do and enables Autopilot. For that to work, you need to train a massive neural network and experiment a lot," Karpathy added. "That’s why we’ve invested a lot into the compute."

The investment is ongoing. Karpathy said Tesla is still working on Dojo, which we suspect is an extension of its existing supercomputer rather than a brand new one, but is not yet ready to provide additional details.