Intel Xeon Scalable Debuts: Dual Xeon Platinum 8176 With 112 Threads Tested

Xeon Scalable Processor Platform Details And AMD Naples Comparison

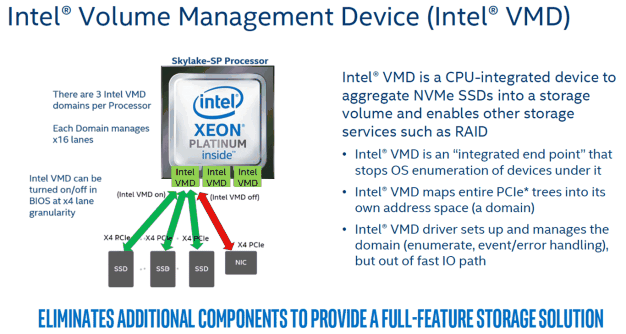

The new Intel Xeon Scalable series processors based on Skylake-SP also feature a new Volume Management Device (VMD) built-in. This feature will seem similar to VROC (Virtual RAID On CPU), which debuted with the Core X series and X299 chipset, but VMD is what actually enables VROC.

With Intel VMD, up to 24 drives can be configured in a single RAID volume, which is directly attached to the processor. There are three VMD domains within each CPU, that can manage 16 PCI Express lanes. RAID can be configured within a single domain, across domains, or even across CPUs. The arrays can also be bootable if you remain within one VMD domain. RAID across multiple domains, however, will not be bootable. Intel VMD is also compatible with other vendors drives, provided the NVMe driver integrates support for VMD.

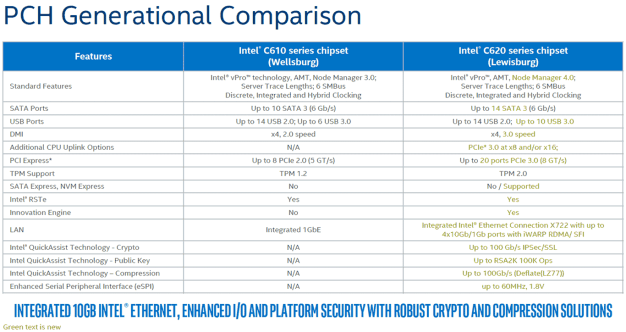

Along with the new Xeon Scalable series processors comes a new PCH (platform controller hub). The new Intel C620 (Lewisburg) series chipset is beefed up in a number of ways versus the previous-gen C610. There are more SATA and USB ports available, additional PCI Express lanes (up to 20), and support for NVMe and TPM 2.0. The C620 also offers support for integrated Intel X722 networking, with up to four 10GbE ports. The platform offers support for Intel's Innovation Engine, for using differentiated firmwares, and support for Intel QuickAssist Technology.

There will inevitably be comparisons made to AMD's EPYC enterprise processors, codenamed Naples. To quickly summarize AMD's alternative, the company has essentially linked together 4, 8-core dies on a single package, to build a 32-core / 64-thread processor, that also features ton of PCI Express connectivity -- 128 lanes to be exact. A pair of EPYC processors can be used in a single server for a grand total of 64-cores / 128-threads of compute. EPYC also supports 8-channel memory configurations -- two channels per die.

AMD contends that its Infinity Fabric and its ZEN micro-architecture affords greater flexibility, with minimal performance impact. Intel's newest Xeons, however, use monolithic die with every processor core integrated on a single piece of silicon. Between the monilithic die, its new mesh interconnect, larger L2, and Skylake's architecture improvements, Intel boasts better integration with processors that are purpose-built for data centers.

We have yet to perform any independent tests on AMD's EPYC, though we did find some performance data available in SiSoft's database, which appears in the multi-core efficiency tests a couple of pages ahead. Though, ultimately, what we'd expect to see in a Xeon Scalable vs. AMD EPYC battle at this juncture are mixed results. Intel's architecture will pull ahead with some workloads, while AMD's additional cores and I/O will allow it to pull head in others.

AMD does have a big disadvantage with virtualization, however. It remains to be seen how virtual machines will behave when data has to not only jump across cores / CCXs, but across dies as well. A 10-core virtual server, for example, would have to leverage 8-cores from one die and 2-cores from another. The additional latency that will inevitably come from having to send data from one die to another, will likely affect performance negatively with some workloads.

Intel was also quick to point out that there is currently no virtual machine interoperability between Xeons and EPYC. If AMD had a major presence in the data center that would be less of an issue, but as of today, Intel has virtually all of the market cornered, so IT admins managing many virtual machines on Intel hardware would have to re-create them from the ground-up on EPYC.

With Intel VMD, up to 24 drives can be configured in a single RAID volume, which is directly attached to the processor. There are three VMD domains within each CPU, that can manage 16 PCI Express lanes. RAID can be configured within a single domain, across domains, or even across CPUs. The arrays can also be bootable if you remain within one VMD domain. RAID across multiple domains, however, will not be bootable. Intel VMD is also compatible with other vendors drives, provided the NVMe driver integrates support for VMD.

Along with the new Xeon Scalable series processors comes a new PCH (platform controller hub). The new Intel C620 (Lewisburg) series chipset is beefed up in a number of ways versus the previous-gen C610. There are more SATA and USB ports available, additional PCI Express lanes (up to 20), and support for NVMe and TPM 2.0. The C620 also offers support for integrated Intel X722 networking, with up to four 10GbE ports. The platform offers support for Intel's Innovation Engine, for using differentiated firmwares, and support for Intel QuickAssist Technology.

There will inevitably be comparisons made to AMD's EPYC enterprise processors, codenamed Naples. To quickly summarize AMD's alternative, the company has essentially linked together 4, 8-core dies on a single package, to build a 32-core / 64-thread processor, that also features ton of PCI Express connectivity -- 128 lanes to be exact. A pair of EPYC processors can be used in a single server for a grand total of 64-cores / 128-threads of compute. EPYC also supports 8-channel memory configurations -- two channels per die.

AMD contends that its Infinity Fabric and its ZEN micro-architecture affords greater flexibility, with minimal performance impact. Intel's newest Xeons, however, use monolithic die with every processor core integrated on a single piece of silicon. Between the monilithic die, its new mesh interconnect, larger L2, and Skylake's architecture improvements, Intel boasts better integration with processors that are purpose-built for data centers.

We have yet to perform any independent tests on AMD's EPYC, though we did find some performance data available in SiSoft's database, which appears in the multi-core efficiency tests a couple of pages ahead. Though, ultimately, what we'd expect to see in a Xeon Scalable vs. AMD EPYC battle at this juncture are mixed results. Intel's architecture will pull ahead with some workloads, while AMD's additional cores and I/O will allow it to pull head in others.

AMD does have a big disadvantage with virtualization, however. It remains to be seen how virtual machines will behave when data has to not only jump across cores / CCXs, but across dies as well. A 10-core virtual server, for example, would have to leverage 8-cores from one die and 2-cores from another. The additional latency that will inevitably come from having to send data from one die to another, will likely affect performance negatively with some workloads.

Intel was also quick to point out that there is currently no virtual machine interoperability between Xeons and EPYC. If AMD had a major presence in the data center that would be less of an issue, but as of today, Intel has virtually all of the market cornered, so IT admins managing many virtual machines on Intel hardware would have to re-create them from the ground-up on EPYC.