Exploring NVIDIA Jetson Orin Nano: AI And Robotics In The Palm Of Your Hand

Experimenting with NVIDIA's JetPack 5.1 SDK and Isaac, Plus Key Takeaways

Our testing time with the Jetson Orin Nano Developer Kit was done with a preview version of JetPack 5.1.1 that includes support for the new Nano architecture. It came pre-flashed on a 64GB SD card, but no other setup work had been done, so when we fired up the system we needed to create a user account and walk through the normal Ubuntu Desktop startup procedure. That means we had a fresh out-of-the-box experience exactly like what buyers of this kit would have.

Benchmarking An AI Edge Device

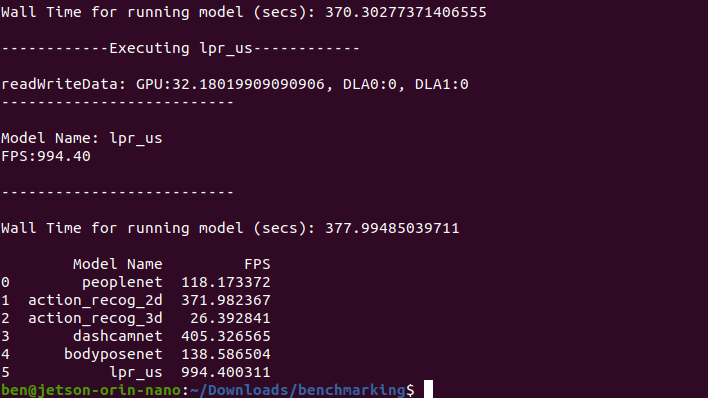

Just like we did with the Jetson AGX Orin Developer Kit, we can test the AI capabilities of the Jetson Orin Nano in a scripted benchmark provided by the company. The scripts do involve running AI models provided by NVIDIA on NVIDIA hardware, and since they're built for Tensor cores in its GPUs, they're not really applicable across multiple platforms. Unfortunately, that means there are no direct comparisons to other AI platforms like AMD Xilinx's Kria KR260 or KV260, for example, but that's fine.

Each test represents anywhere from seven to ten minutes of running a model against pre-recorded video and measuring performance. Metrics are presented above in terms of frames of video per second, and as you can see most of them run faster than real-time, and in a few cases much faster than real-time. The one exception is action recognition on 3D video, which employs stereo video from a pair of cameras, which lets the AI model infer depth by overlaying the two, much like our own eyes do.

If we compare these results against those obtained from the Jetson AGX Orin Developer Kit, which was running the older JetPack 5.0, we can see that even with the newly enhanced JetPack 5.1 SDK, there's still a huge performance delta. However, the quoted INT8 TOPS spec is more than 6x in favor of the AGX Orin, yet the faster Orin was only about 4x faster in these benchmarks. That in and of itself demonstrates how far JetPack has come in the last 12 months, as performance here would probably be much lower without those improvements.

Isaac And The Learning Machine

We also had the benefit of full access to NVIDIA's cloud platform via VMWare Horizon. This let us use Isaac's AI modeling in an end-to-end workflow situation. NVIDIA provides for all developers several pre-trained AI models for a variety of tasks, and developers have the option of fine-tuning a model's training by generating additional materials for the AI to consume and train in the cloud.We used the People Segmentation model from NVIDIA's enterprise cloud services, NGC. That model had already been trained with a dataset with various camera heights, crowd densities, and fields of view. But it's just a starter model, and even NVIDIA itself say that using the model on a near-to-ground robot can result in poor accuracy because the original dataset didn't include a suitable camera angle.

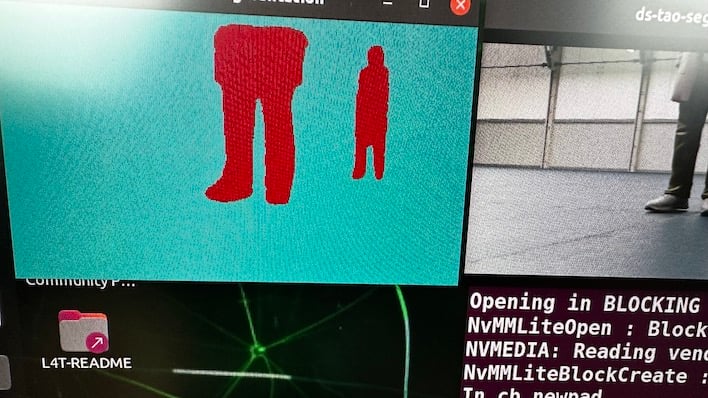

Red indicates an obstacle that the AI sees. In motion, this flickers and a robot may not see you.

Instead, we could use NVIDIA's AI procedural tools to generate video that had the correct camera angles that our low-down robot would need to see where it's going. We got to use Omniverse Replicator in Isaac Sim to create scenes, objects, and people, and added randomization to generate additional variations in the data. The synthetic data created by OR is already annotated, so the AI training portion knows exactly what it's looking at.

We started out by downloading the pre-trained model, running it in a Docker container that hosted an AI application, and running it in an application stored in a Docker container. Once we're done training the AI, we can run it again and see the results of our efforts. For now, however, the important thing to see is that this model just doesn't see shoes very well. In the photo above, the image on the left is what the robot detected, vs. what it actually saw in the simulation on the right.

The result is that a robot trained on just this model might not see your feet as it strolls across a warehouse, and just might smash your toes. We don't want that, so let's get to optimizing.

Our optimization demo was somewhat straightforward, but the way it worked is that Omniverse Replicator generated a bunch of data on which to train the AI, and then download an updated model. We did this through a Jupyter Notebook, which is one part educational tool and one part scripting interface, so we could track our progress as we went.

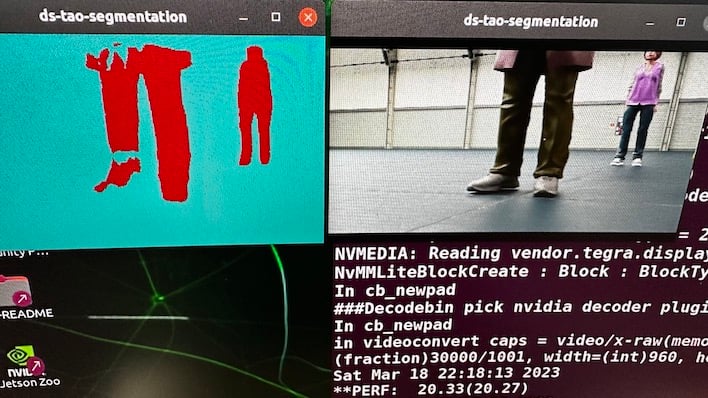

Once the training process was complete, we could then download the optimized model and run it again against the same test video, simulating what a robot low to the ground would see. In this next photo, we can see obstacles much more clearly, and nobody on the warehouse floor is likely to get their toes mashed by a passing robot.

In this use case, the AI model was only around 65% confident in what it saw, because even though it couldn't see everything, it did see objects rapidly appearing and disappearing, or changing in shape. Once the training optimization process was complete with data from Omniverse Replicator, however, the model was much more confident that it was seeing things clearly, and estimated that it was about 95% accurate. The only way to be sure would be to deploy it to a robot and turn it loose with a bunch of dummies standing in a room, but that's not exactly equipment that we had on hand.

Given that this was a guided exercise, it was pretty easy to complete. However, this was a way for folks like us who aren't specialized AI engineers to go through the process and better understand what's going on. At the very least, the tools seem to be relatively simple to use, and it's just a matter of knowing what you need to generate, telling Omniverse Generator to create it, training the model and then testing it out. We're sure there's a lot of trial and error involved, but iterations don't seem all that difficult to complete, as NVIDIA has iterated on this process for a while now.

Using PeopleNet Transformer

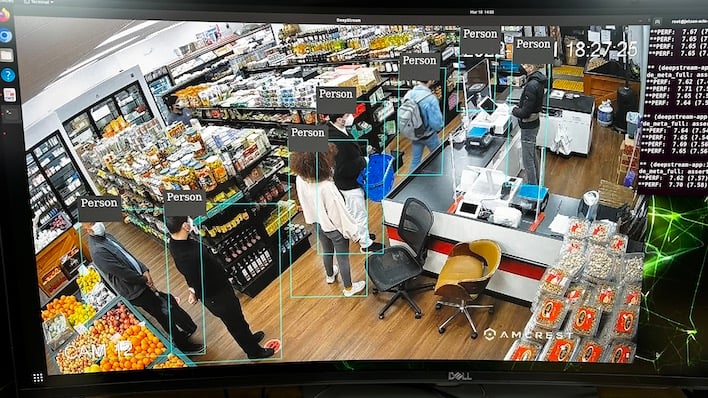

Another use case that we were able to test was a security camera in a convenience store or supermarket. AI needs to identify people and track them as they progress through the store, and possibly across multiple cameras at different angles. It might not be something that needs to be run all the time, but in the event of a robbery, it would make sense to set the AI loose on recorded footage. The PeopleNet transformer model does this sort of thing exactly, and NVIDIA provided access to an application using this model to identify folks.

This application draws a box around each distinct person that it sees, and tracks them as they wait in line or stroll the aisles of this convenience store. The one downside to running this on Jetson Orin Nano is that it only runs at around 7.5 frames per second, which is way slower than real-time on video that generally runs at 30 fps, or even 60 fps. A faster module, for instance the 32GB Jetson AGX Orin in the bigger Developer Kit, should be able to tear through this in something approaching realtime, at least for 30 fps video. However, for demonstration purposes, we can see that the AI is accurate and quick to identify people in each frame, even if it couldn't process all the frames exactly as they happen.

Jetson Orin Nano is small. Really small.

Jetson Orin Nano Developer Kit Key Takeaways

NVIDIA had already created something impressive in the Jetson AGX Orin a year ago, but now it's taken those vast capabilities and scaled them down into a much more affordable and power-efficient package. The Jetson Orin Nano Developer Kit is more likely to be the sort of thing we'll see in AI edge devices like warehouse robotics or computer vision applications. The module's affordable price, strong AI inferencing abilities, and low power requirements make it a great candidate to deploy into a large fleet.Perhaps even more impressive, however, is the software stack that forms the backbone of the developer experience. Tools to generate AI training materials or quickly and easily deploy updates over the air make the whole process from design to implementation much easier to manage. These are capabilities we just haven't seen in other robotics and computer vision platforms that we've tested, and that could make a big difference in accelerating the process of taking an early concept from an initial thought to an actual working machine. NVIDIA is committed to more than just edge devices too; the company has a complete robotics and vision AI software stack that includes really handy features like generated training content and a cloud architecture that allows for speedy model training. We just haven't seen anything quite like it in competing platforms so far, and it's applicable to all of NVIDIA's AI offerings.

If the Jetson AGX Orin Developer Kit was a fully-featured but somewhat cost prohibitive platform, the scaled down version found in the Jetson Orin Nano Developer Kit may just be the ticket a robotics creator needs. It doesn't have the focus on TSN or legacy IO like we've recently seen on other platforms, but there are a host of applications that just don't need that IO. Add in the speed at which one of these units can work and it's easy to see that the Jetson Orin Nano is a affordable, flexible, and powerful way to dive into the world of computer vision, robotics, and AI all in one package.