AMD-Xilinx Kria KV260 Starter Kit: Exploring Machine Vision AI

Xilinx Kria KV260 Vision AI Starter Kit: Let's Start Coding, Conclusions

Getting Started with NLP Smartvision

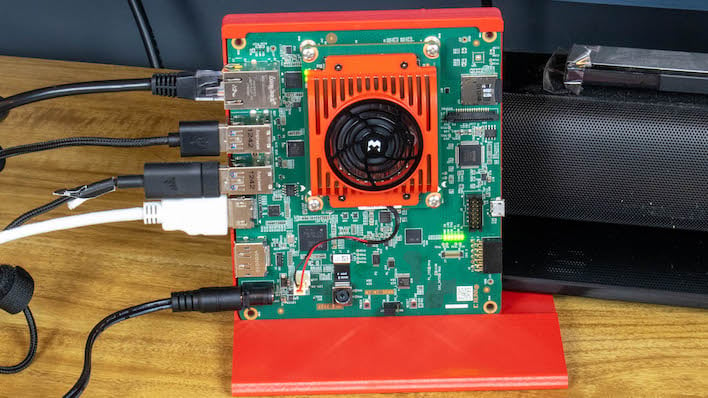

The Kria KV260 has an FPGA at its heart, so in Xilinx's parlance, we have to load an accelerator to the FPGA. Basically this is a compiled app built using Xilinx's Vitis platform, which is an integrated development environment (IDE) used to create applications using C++ or Python. Using a series of simple xlnx-config commands, we can download an accelerator directly from Xilinx, install it to our machine, and then load and unload it in the FPGA. Once the accelerator is loaded, then it's off to the races.Xilinx recommends new users first load up the NLP Smartvision accelerator and take it for a spin prior to diving in. This will both build familiarity with Xilinx's command line utilities as well as step through the process of running an accelerator on the FPGA. This can all be accomplished in one fell swoop using a single command line outlined on the Xilinx wiki, or installing manually (documented on the same page a couple paragraphs lower). Whatever method you choose, once the accelerator is loaded, it's time to take it for a spin. It works with either the included 13-megapixel MIPI camera from the accessory kit or a standard USB webcam based on the argument passed through the command line.

Remember that this is all performed with on-device processing. The KV260 has an Ethernet connection, but that's just for grabbing software and getting online more generally. We're not sending the video stream to the cloud or processing it in a data center. While the KV260 Vision AI Starter Kit is a very affordably priced platform, it's still a platform doing the work itself. It's also sipping power; while the accessory kit includes a 36-watt AC adapter, it was only pulling about 15 to 16 Watts from the wall while running the app.

For more specific power consumption figures for just the FPGA, we could run the app and then fire up a second terminal. Using the Xilinx command line tools we could see the FPGA by itself was consuming about 6,500 mW, or 6.5 Watts. Compared to the Jetson AGX Orin, which again uses GPU resources and has a higher thermal budget, this is almost nothing. In fact, just under seven watts is about half the most-conservative setting on the Orin, which has to turn off over half of its resources to hit that target. There's no configurable thermal budget here, so this does represent something of a cap. Still, Xilinx's tools reported that it could track at a rock-steady 30 frames per second with a latency under 30 milliseconds. It's fast and fluid while sipping power.

NLP Smartvision has three different modes: Face Detect, Plate Detect, and Object Detect. Face Detect and Object Detect are pretty straightforward based on their names, but what about Plate Detect? Remember earlier when we talked about traffic enforcement? That's right, it's license plate detection. The AI needs to be able to read a license plate number in order to send a ticket when a car runs a red light or speeds in a construction zone. NLP Smartvision includes an implementation to do just that.

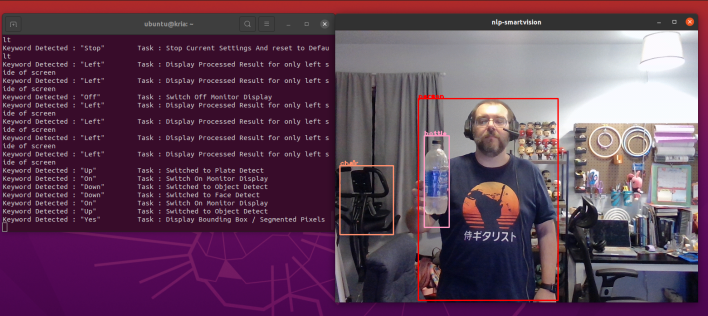

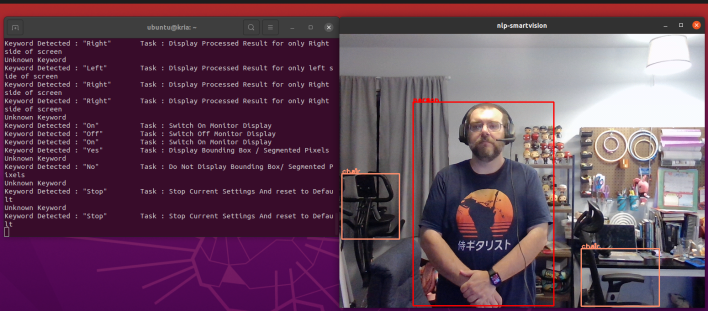

The interface for NLP Smartvision is pretty minimal, and when one considers that NLP stands for Natural Language Processing, it's also intuitive. There's rudimentary voice recognition built in, which listens for certain key words that tell the app to cycle through the different modes and modify the settings. Say a keyword and the app responds by taking the corresponding action and logging it to the console in the terminal window. To be clear, voice recognition is not the main purpose of this app, but it occasionally had issues with keywords and my Midwestern accent, and often would report an unknown keyword based on sound in the environment when I hadn't said anything at all. At any rate, it was functional.

Meanwhile, the video feed to the camera is displayed on the screen, and NLP Smartvision's detection mechanisms get to work drawing boxes around items. Face detection works well, with a box around the different faces in the room. The game room in my house has a couple of shelves full of Funko Pop vinyl figures, and I specifically put it in the shot to see if it could fool NLP Smartvision. The answer is no, and the app only tracked actual faces in the frame. This is good stuff.

The Object Detection model looks for objects it can recognize in the room. I could occasionally fool it from time to time. My stationary bike was identified as a chair, and a cup of water was instead labeled as a bottle. However, it also correctly labeled a bottle of water as a bottle, and could identify just the arm of an office chair with the right name. It's not perfect, but it's certainly a proof of concept that works well enough for developers to get started.

Lastly, we didn't have any old license plates lying around, but we could print some faux license plates onto paper. NLP Smartvision had absolutely no trouble identifying large strings of text as a license plate and highlighting the values in boxes. This is exactly the sort of thing an automated ticketing system needs, much to the chagrin of red light runners and speeders across the globe.

Xilinx Code Samples

Developers interested in figuring out how NLP Smartvision -- or any of the company's K26 apps, for that matter -- works can visit the Xilinx repos on GitHub. The app itself is actually fairly minimal. The C++ code on GitHub references the actual AI models in the Vitis-AI repository, and this is where developers can see the sausage get made. There happens to be a whole lot of AI libraries already in Xilinx's back pocket, including the face, object, and license plate detectors, but also applications for medical screenings, retina scans for biometric authentication, vehicle classifications, and much more. We could spend days digging through the Xilinx AI libraries repository, and so can anybody with an eye towards machine learning in FPGAs.What's great about Xilinx's Vitis platform is that it doesn't require any FPGA programming experience. Those who want to get into it can learn all about doing HDL design with Vivado, but anybody can start with the company's pre-configured AI accelerators and tune them with programs written in C++ or Python using industry-standard tools like Tensorflow or Pytorch. The company provides documentation on how to, for example, migrate from CUDA to Vitis, and has been courting developers since before the KV26's release in 2021.

As you can see, NLP Smartvision is far from the only app available for testing on the Kria KV260. In fact, Xilinx has gone so far as to set up an app store full of the company's own demo apps as well as vision AI tools provided for sale by third-party developers. We could browse the App Store for Kria SoMs in Firefox included in the Ubuntu image and download the software. The accelerators provided for evaluation purposes by third parties have a time limit to them. At the end of the timeframe, users can subscribe or buy perpetual licenses for the accelerators they wish to keep directly through Xilinx. This effectively turns Xilinx into the Apple App Store or Google Play of the AI world, providing both the hardware and a means to acquire software to use. AI Tools as a Service (ATaaS, you read it here first at HotHardware) is a new and unexplored land of opportunity, and Xilinx seems to have the concept worked out.

Exploring the Xilinx App Store for Kria

There are plenty of other apps in the App Store for Kria if your situation is set up for them. In the case of AI Box with ReID (short for Re-Identification), we really needed a network of cameras in different rooms that feed into a single Kria device. AI Box with ReID can track people as they move through a building or campus full of buildings and outline each person in a box of their own specific color. Watching people as they go from camera to camera is something that a security team would have to do manually by monitoring different feeds, but now it can be done via AI on the device itself.Xilinx's other public Vision AI app is defect detection, which inspects photos of objects for even the tiniest flaw in the object itself. The first obvious target for defect detection is in manufacturing. A MIPI camera installed on the board can take images of, for example, a PCB and look for cold solder joints or other defects on the device. The company's own demo, however, goes into agriculture and looks for defects on pieces of fruit, where it can detect spots on an apple with 90% accuracy. Xilinx says that kind of accuracy makes it good enough for a wide array of automated manufacturing applications where it can help reduce the number of defective items that make it out of the factory and into customers' hands.

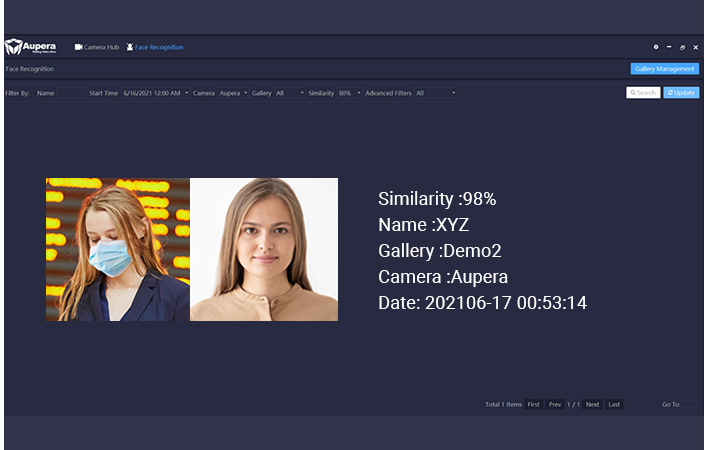

One of the more interesting and potentially controversial apps available for evaluation is Aupera's Face Recognition for Kria tool. The application is capable of recognizing people it's seen before, which is handy for tracking movements through multiple cameras. It can also detect when someone is wearing a mask and even recognize people it's seen before when they're wearing masks, something that was no doubt very handy when we were all still masking up during the early stages of COVID-19. This one needs a library of photos to go through and will show the results when they match. Of course, facial recognition has gone through its ups and downs from an ethical standpoint, but companies are continuing to work towards eliminating false positives and improve their methods.

In the case of Xilinx's apps, all the code is available online in the company's GitHub repositories right alongside the AI libraries and the source for the Kria xlnx-config application. The company's commitment to open source is a big boon for developers, although it's something that just about every player in the space does. NVIDIA posts code for its benchmarks and test apps, and so do other board makers. The goal is to make these platforms as friendly towards developers and customers as possible, so that they can easily deploy apps and buy plenty of edge devices like the K26 SoM. To that end, Xilinx has done an admirable job.

Xilinx Kria KV260 Vision AI Starter Kit: Conclusions

It probably goes without saying, but we'll say it anyway: the Kria KV260 Vision AI Starter Kit from AMD-Xilinx is not meant to compete with ultra high-end solutions like the Jetson AGX Orin Developer Kit we looked at earlier this year. Not only does the Jetson cost about seven times more just to buy it, the KV260 consumes far less power and performs its AI acceleration tasks in a completely different way, using an FGPA rather than GPU resources. The Xilinx KV260 makes it easy to dip your toes into the water or dive right in to making edge devices at an affordable price point of just $199, and making use of accessories you probably have just laying around the office.Saving money is nice, but having sample code that walks through the process of collecting data, pushing it to the AI accelerator, and pulling out results is even better. Just like any other manufacturer who wants to be taken seriously, Xilinx has open-sourced a whole lot of source code. Even better, its tools are also open source, so anybody who wants to contribute to the company's controllers and its modified Linux kernel can do so, as well.

The only caveat we had will no doubt be resolved pretty quickly, and that was in getting started. We did run into a few issues getting our KV260 off the ground with Ubuntu 22.04. That operating system is still in beta, but it's got the full support of Canonical itself along with Xilinx, and should be easier to get running when it exits beta in the third quarter of this year. For now, Ubuntu 20.04.3 is stable and robust, and really easy to get going. And those who prefer to go the USB COM route can use the slimmer PetaLinux distribution to get going in a tethered fashion. Even this caveat is full of alternate methods to get started. In our experience, it's solid today as long as you don't venture too far off the release-level software. Betas are betas for a reason, after all.

In total, the Xilinx Kria KV260 Vision AI Starter Kit is a speedy, affordable, and easy to grasp (for an experienced developer) way to get into machine learning accelerated on an FPGA. There are no power-hungry GPU or resources that will go to waste, but there's still enough horsepower here to get vision AI tasks done. Not only do you get over 250,000 programmable logic cells for developing your own AI accelerators, there's also tons of connectivity via 10 Gig Ethernet, MIPI camera points, USB, and more. With a good start to the number of apps available on the Kria App Store, Xilinx has built an easy mechanism for developers to monetize their creations, as well. Amateur AI fans or seasoned machine learning vets alike are bound to find something of value in the KV260 Vision AI -- and its sibling KR260 Robotics AI -- Starter Kits.