AMD Unveils EPYC With 3D V-Cache, Beastly Dual-Die Instinct MI200 GPU For Massive HPC Workloads

For chip companies, bigger profit margins can be found in the data center market, and that's often where we see new innovations manifest first before trickling into the consumer space. To wit, we know AMD is prepping a Zen 3 refresh with stacked 3D V-cache. While we patiently wait, AMD today also just introduced 3rd Gen EPYC processors with 3D V-cache. Combined with its also-new Instinct MI200 GPU accelerators, AMD claims to be arming data centers and high performance computing (HPC) customers with the arsenal they need for the exascale era.

That's the theme of today's unveiling—the need for exascale-capable hardware is now upon us, and AMD aims to fill that need with these new big iron products. Let's start the discussion with AMD's upgraded EPYC server processors, which remain compatible with the SP3 socket (so it's a drop-in upgrade, essentially).

AMD's 3rd Gen EPYC lineup based on Zen 3, now with 3D V-cache on board, is still being offered with up to 64 cores and 128 threads of big iron muscle. Only now they're beefed up with three times the L3 cache, equating 804MB of total cache per socket, for what AMD claims will deliver a massive 50 percent average uplift across targeted workloads. Up to now, the figure that's been associated with 3D V-cache is 15 percent, which is how much AMD said gaming applications stand to gain from its eventual Zen 3+ lineup.

It's not just about comparing with its own existing product stack, though. AMD is claiming a boosted performance advantage over Intel's Xeon processors. For example, AMD says a 2P EYPC 75F3 system with 32 cores outpaces a 2P Xeon 8362 system (also with 32 cores) by up to 33 percent in Ansys Mechanical (Finite Element Analysis), up to 34 percent in Altair Radioss (Structural Analysis), and up to 40 percent in Ansys CFX (Fluid Dynamics).

AMD's message is that its updated EPYC processors are tailor built for technical computing workloads. The addition of 3D V-cache allows them to crunch through things like crash simulations, chemical engineering, design verification, and the such at a much faster pace.

AMD Unveils Instinct MI200 Accelerators With Up To 128GB Of HBM2e

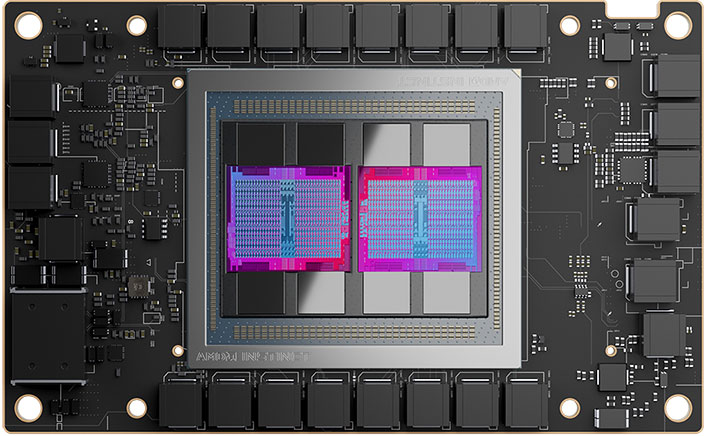

Upgraded EPYC processors are only part of the equation. AMD also announced new Instinct MI200 series accelerators that are the first to support a multi-die GPU, and also the first to support a heaping 128GB of HMB2e memory.

The new series is built on AMD's CDNA 2 architecture, and culminates in the Instinct MI250X. According to AMD, the flagship accelerator delivers up to 4.9X better performance than "competitive accelerators" for double-precision (FP64) HPC applications, and tops 380 TFLOPs of peak theoretical half-precision (FP16) for AI workloads.

"AMD Instinct MI200 accelerators deliver leadership HPC and AI performance, helping scientists make generational leaps in research that can dramatically shorten the time between initial hypothesis and discovery," said AMD's Forrest Norrod. "With key innovations in architecture, packaging, and system design, the AMD Instinct MI200 series accelerators are the most advanced data center GPUs ever, providing exceptional performance for supercomputers and data centers to solve the world's most complex problems."

The primary competition here is NVIDIA and its Ampere architecture. According to AMD, the Instinct MI250X offers a "quantum leap in HPC and AI performance" over its rival's A100 product.

As for the specs, the Instinct MI250X wields 220 compute units and 14,080 stream processors. It also features the full 128GB HBM2e memory allotment, clocked at 1.6GHz and tied to a 8,192-bit bus, for up to 3.2TB/s of memory bandwidth. There's also a non-X variant (Instinct MI250) with 208 compute units, 13,312 stream processors, and the same memory configuration.

The multi-die design with AMD's new 2.5D Elevated Fanout Bridge (EFB) technology enables 1.8X more cores and 2.7X higher memory bandwidth compared to AMD's previous gen GPUs, the company says. It also benefits from up to eight Infinity Fabric links connecting the Instinct MI200 series to EPYC CPUs in the node, for a unified CPU/GPU memory coherency.

AMD's overarching pitch is that data centers can combine its latest accelerators with its upgraded EPYC chips and ROCm 5.0 open software platform to "propel new discovers for the exascale era." Specifically, AMD points to things like tackling climate change and vaccine research, as two possible examples.

The Instinct MI250X and MI250 are both available in the open-hardware compute accelerator module or OCP accelerator module form factor, while the latter will also be offered in a PCIe card form factor in OEM servers. Along with the upgraded EPYC chips, all of this will hit the market in the first quarter of next year.