Plug And Play Brain Prosthesis Leverages Machine Learning To Help Paralyzed Patients Control Computers

Imagine a world where you could control a computer with your mind. Researchers at the University of California, San Francisco Weill Institute for Neurosciences have created a brain-computer interface (BCI) that is reliant on machine learning. Paralyzed individuals were able to control a computer cursor with their brain activity without the need for constant retraining.

Past brain-computer interfaces (BCIs) “...used ‘pin-cushion’ style arrays of sharp electrodes” that would penetrate the brain. These arrays would allow for more “sensitive recordings”, but they were relatively short-lived. Researchers would need to reset and recalibrate their systems every single day and participants would essentially need to start from scratch every time they used the system. It could take them hours to relearn movements and oftentimes participants were forced to give up before they could achieve mastery. These systems therefore did not complement the way the brain naturally works and it was difficult to understand their full extent.

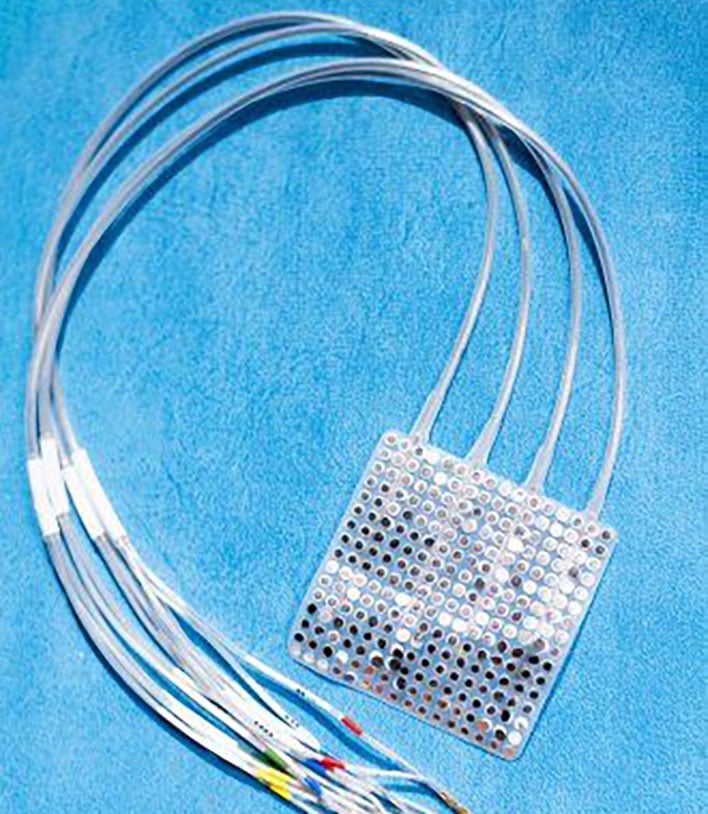

The researchers have instead created a BCI algorithm that utilizes “...machine learning to match brain activity recorded by the ECoG electrodes to the user’s desired cursor movements.” An ECoG array is a pad of electrodes that can be placed on the brain. Unlike past “pin-cushion” style arrays, the ECoG array can be used for “long-term” recordings.

Participants in the study would “...begin by imagining specific neck and wrist movements while watching the cursor move across the screen.” The algorithm would then gradually begin to match the cursor movement to the brain activity. The researchers at first would reset their new system everyday, but eventually started to permit the algorithm to update without resetting it. It took only a few days with a little lost time before participants were immediately able to achieve “top level performance.”

The whole system eventually began to mimic some of the ways the human brain behaves. For example, participants were able to “amplify patterns of neural activity” that were the most useful in controlling the system, while getting rid of less useful activity. Participants were also able to create their own specific patterns of how to use the system. These kinds of developments had been impossible when the system needed to be reset daily.

The research was conducted as part of an effort to create “brain-controlled prosthetic limbs.” The use of the BCI algorithm and ECoG array is a game-changing development. It could be especially important for individuals without limbs and those with paralysis.

Scientists at RMIT University in Melbourne, Australia also recently developed electronic skin that is able to replicate the way human skin feels pain. The new technology relies on a combination of pressure, heat, and pain sensors. The scientists believe that their creation could be particularly useful in robotics and for helping those with damaged skin.

Past brain-computer interfaces (BCIs) “...used ‘pin-cushion’ style arrays of sharp electrodes” that would penetrate the brain. These arrays would allow for more “sensitive recordings”, but they were relatively short-lived. Researchers would need to reset and recalibrate their systems every single day and participants would essentially need to start from scratch every time they used the system. It could take them hours to relearn movements and oftentimes participants were forced to give up before they could achieve mastery. These systems therefore did not complement the way the brain naturally works and it was difficult to understand their full extent.

The researchers have instead created a BCI algorithm that utilizes “...machine learning to match brain activity recorded by the ECoG electrodes to the user’s desired cursor movements.” An ECoG array is a pad of electrodes that can be placed on the brain. Unlike past “pin-cushion” style arrays, the ECoG array can be used for “long-term” recordings.

ECoG electrode array. Photo by Noah Berger via UCSF.edu

Participants in the study would “...begin by imagining specific neck and wrist movements while watching the cursor move across the screen.” The algorithm would then gradually begin to match the cursor movement to the brain activity. The researchers at first would reset their new system everyday, but eventually started to permit the algorithm to update without resetting it. It took only a few days with a little lost time before participants were immediately able to achieve “top level performance.”

The whole system eventually began to mimic some of the ways the human brain behaves. For example, participants were able to “amplify patterns of neural activity” that were the most useful in controlling the system, while getting rid of less useful activity. Participants were also able to create their own specific patterns of how to use the system. These kinds of developments had been impossible when the system needed to be reset daily.

The research was conducted as part of an effort to create “brain-controlled prosthetic limbs.” The use of the BCI algorithm and ECoG array is a game-changing development. It could be especially important for individuals without limbs and those with paralysis.

Scientists at RMIT University in Melbourne, Australia also recently developed electronic skin that is able to replicate the way human skin feels pain. The new technology relies on a combination of pressure, heat, and pain sensors. The scientists believe that their creation could be particularly useful in robotics and for helping those with damaged skin.