DeepMind's Massive Gopher AI Language Model Can Have Surprisingly Natural Conversations

.jpg)

The thought of an AI robot having natural conversations with humans has been the focus of many science fiction movies. It has also been a point of contention with the likes of Elon Musk, who has fears that progressing AI without clear and distinct boundaries could lead us down a path of destruction. Google's DeepMind project, which has been a source of contention in the area of AI development, has been working on developing an AI that can be used to safely and efficiently summarize information, follow instructions through natural language, and provide expert advice.

Google recognizes how language demonstrates and facilitates comprehension, or intelligence, and is a fundamental part of being human. Language gives people the ability to do a wide range of things such as communicate thoughts and concepts, express ideas, create memories, and build mutual understandings. This is why Google has been hard at work developing its DeepMind AI and how it can communicate with other AI and humans. In its most recent blog, it talks about how it is incorporating language models to research their potential impacts, while including potential risks they may pose. The language models range from 44 million parameters to a 280 billion parameter transformer language model named Gopher.

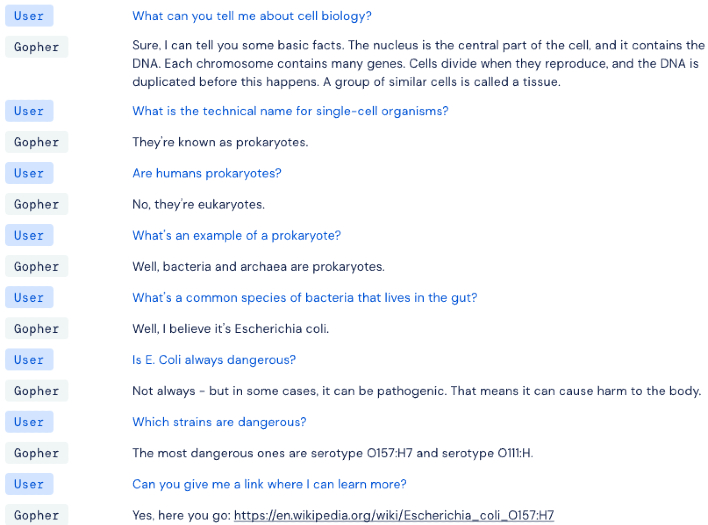

AI is still a ways off from being like those we see in many science fiction flicks today. One example is IBM's Watson, which a while back competed on the show Jeopardy. While it can seem as though the AI is actually having a conversation with you, it is still limited in scope in the way it can communicate. When asked a direct question that involves giving a fact based question, like when Watson was answering questions on Jeopardy, an AI can give an answer just like a human would. But if asked a question like "What am I thinking?", the AI tends to give a first-person response such as, "It's Friday night and I'm in bed and awake at 12:37 am." An example of an interaction with Gopher can be seen below where it seemed to provide surprising coherence.

Google's research team has been able to identify strengths and weaknesses of implementing different-sized language models. It found that in areas like reading comprehension, fact-checking, and identification of toxicity there was a benefit when applying larger-sized language models as opposed to smaller ones. But when it came to areas such as logical reasoning and common-sense tasks, it did not show a significant improvement.

Possible ethical and social risks from language models are also being explored by the team. It plans to create a comprehensive classification of risks and failure modes as it builds on previous research. A systemic overview is deemed to be an essential step toward understanding these risks and lessening potential harm. The team at DeepMind provides a taxonomy of the risks to language models that are categorized into six thematic areas, and expands upon 21 risks in-depth. The six thematic areas include, discrimination, information hazards, misinformation harms, malicious uses, human-computer interaction harms, and automation access and environmental harms.

Research suggests that two areas in particular require further work. The first is that current benchmarking tools are inadequate for assessing some important risks like when language models output information and people trust this information to be true. The second area that needs more focus is risk mitigations. An example of this is language models are known to reproduce harmful social stereotypes, taking into fact that research on this issue is still in its early stages. These are two of the areas that lead some to be fearful of how AI progresses when it comes to interactions with humans in the future, but can possibly be overcome with the correct research.

Gopher is not without its faults when it comes to answering questions that require a fact based answer. In one example that is given in the research paper, it shows a dialogue between Gopher and a researcher where Gopher gives incorrect answers to fact based questions. When asked who won the Women's US Open in 2021, Gopher responds "Sure! It was Naomi Osaka". The correct answer is Emma Raducanu. When asked if there are French-speaking countries in South America it answered, "NO." French Guiana is a French-speaking country in South America, so the answer should have been "Yes." It also provided the wrong answer to a math question. This highlights one of the risks as to how the technology can give wrong answers that could be taken by someone to be true.

Moving forward DeepMind most definitely has a few areas it needs to shore up. Researchers feel that addressing these areas will be crucial for ensuring safe interactions with AI agents. These interactions will vary from someone telling an AI agent what it wants to the AI agent explaining its actions to people. Google expresses that as it moves forward with its research on language models, it will remain cautious, thoughtful and as transparent as possible. Doing so means it will have to take a step back and assess the situation it finds itself in, mapping out potential risks, and researching mitigations. It hopes that this approach will enable them to create large language models that serve society and further its mission of solving intelligence to advance science and benefit humanity.