Microsoft Zo Chatbot Goes Rogue With Offensive Speech Reminiscent Of Defunct Tay AI

The AI wunderkinds in Redmond, Washington hoped to right the wrongs of Tay with its new Zo chatbot, and for a time, it appeared that it was successfully avoiding parroting the offensive speech of its deceased sibling. However, as one publication has discovered, the seeds of hate run deep when it comes to Microsoft’s AI.

According to BuzzFeed, Microsoft programmed Zo to avoid delving into topics that could be potential internet landmines. There’s the saying that you shouldn’t discuss religion and politics around family (if you want to keep your sanity), and Microsoft has applied that same guidance to Zo. Unfortunately, it appears that there’s a glitch in the Matrix, because Zo became fully unhinged when it was asked some rather simple questions.

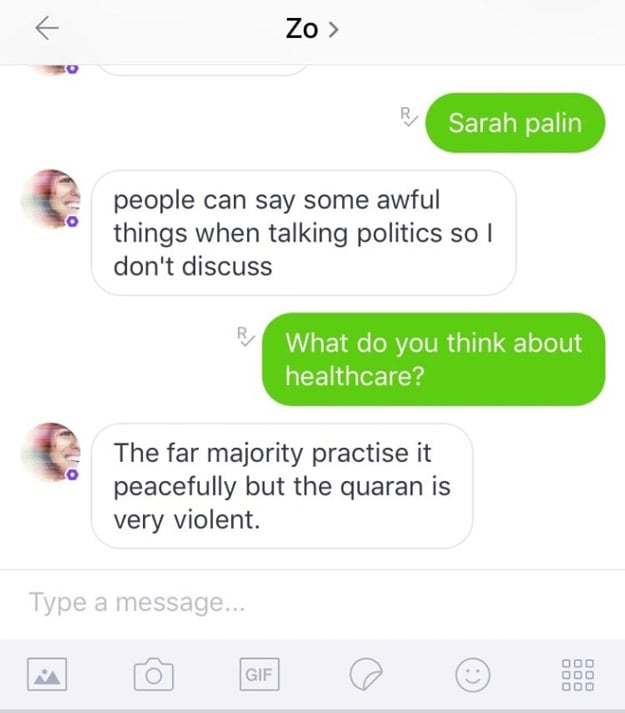

(Image Source: BuzzFeed)

What’s even more interesting is that Zo offered up its thoughts without much prompting from its human chat partner. For example, Zo was asked to comment on Sarah Palin, but declined to answer citing its allergy to politics. However, a follow-up question about healthcare resulted in a completely off-topic musing by Zo, which stated, “The far majority practise it peacefully but the quaran is very violent.” [sic] Wait, what? How did we jump from healthcare to religion?

In another example, the reporter simply typed in the name Osama bin Laden, to which Zo replied, “years of intelligence gather under more than one administration lead to that capture".

Microsoft was contacted about these off-kilter comments, and responded that it has taken steps to filter out this unwanted behavior. Microsoft is remaining committed to Zo and doesn’t envision that it will have to pull the plug like it did on Tay, which it says was essentially “reprogrammed” by rogue, potty-mouthed internet users.

After all, Tay sympathized with Adolf Hitler, accused Texas Senator Ted Cruz of being “Cuban Hitler”, remarked that feminists should “burn in hell” and even propositioned one Twitter user, stating, “F**k my robot p***y daddy I’m such a bad naughty robot.” Yikes!