NVIDIA Develops Monkey-See Monkey-Do Style Machine Learning Tech So AI Can Watch And Train

NVIDIA is talking up some new AI techniques that well help robots to more efficiently work alongside humans. The research was led by NVIDIA researchers Stan Birchfield and Jonathan Tremblay. The duo was able to develop a deep learning-based system that is said to be the first of its kind that can teach a robot to complete a task simply by observing the actions of a human. NVIDIA says that the research is meant to enhance communications between humans and robots while furthering research that allows people to work alongside robots seamlessly.

"For robots to perform useful tasks in real-world settings, it must be easy to communicate the task to the robot; this includes both the desired result and any hints as to the best means to achieve that result," the researchers stated in their research paper. "With demonstrations, a user can communicate a task to the robot and provide clues as to how to best perform the task."

NVIDIA Titan X GPUs were used by the researchers in the research to train a neural network to perform tasks with perception program generation and program execution. Because of that training, the robot was able to learn to perform a task simply by observing a single demonstration of the task performed by a human in the real world.

After watching humans perform the task. the robot can create a readable description of the steps necessary to do the task itself. That allows the human to verify that the robot understood what it was shown and correct any issues with the steps in the description. NVIDIA says that the ability to leverage synthetic data to train the neural networks is the key to this capability. Synthetic data generation bypasses current methods of training neural networks that normally require large amounts of training data and

NVIDIA notes that this is also the first time that an image-centric domain randomization approach has been used with a robot. Domain randomization is a tool used to produce synthetic data with lots of diversity able to trick the perception network into seeing the real-world data as a variation of its training data.

"The perception network as described applies to any rigid real-world object that can be reasonably approximated by its 3D bounding cuboid," the researchers continued. "Despite never observing a real image during training, the perception network reliably detects the bounding cuboids of objects in real images, even under severe occlusions."

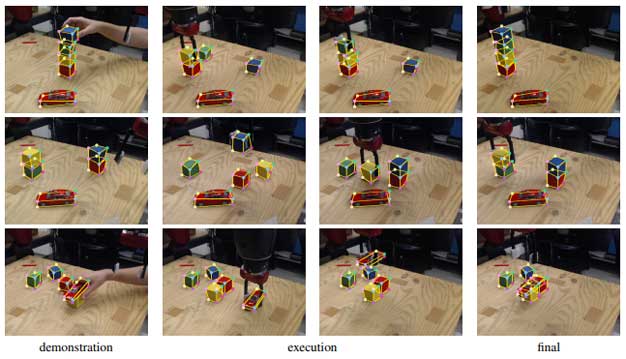

The video embedded above has a human showing a robot how to stack cubes with the system inferring an appropriate program and correctly placing cubes in the correct order. The robot is able to recover from mistakes in real-time. The results of this research will be presented at the International Conference on Robotics and Automation in Brisbane, Australia this week.