NVIDIA Grace Next-Gen Arm CPU Delivers 10x Performance Lift For Giant-Scale AI And HPC Workloads

During its GTC 2021 keynote today, NVIDIA unveiled a new product for high performance computing (HPC) clients, its first-ever data center CPU called Grace. Based on Arm's architecture, NVIDIA claims Grace serves up 10x better performance than the fastest servers on the market currently, for complex artificial intelligence and HPC workloads.

To be clear, though this is NVIDIA's first data center CPU, it is not intended to compete head-to-head against Intel's Xeon lineup and AMD's EPYC processors. NVIDIA made a point to say this, noting it continues to provide full support for all big iron server CPUs, including x86, Arm, and Power architectures.

Instead, Grace is more of a niche product, in that it is designed specifically to be "tightly coupled" with NVIDIA's GPUs to open the spigot, so to speak, by removing bottlenecks for complex giant-model AI and HPC applications. In such scenarios, that is where the claimed 10x performance jump comes into play, compared to today's high-end NVIDIA DGX-based systems (which run on x86 CPUs).

"Leading-edge AI and data science are pushing today’s computer architecture beyond its limits—processing unthinkable amounts of data," said Jensen Huang, founder and CEO of NVIDIA. "Using

licensed Arm IP, NVIDIA has designed Grace as a CPU specifically for giant-scale AI and HPC.

Coupled with the GPU and DPU, Grace gives us the third foundational technology for computing,

and the ability to re-architect the data center to advance AI. NVIDIA is now a three-chip

company."

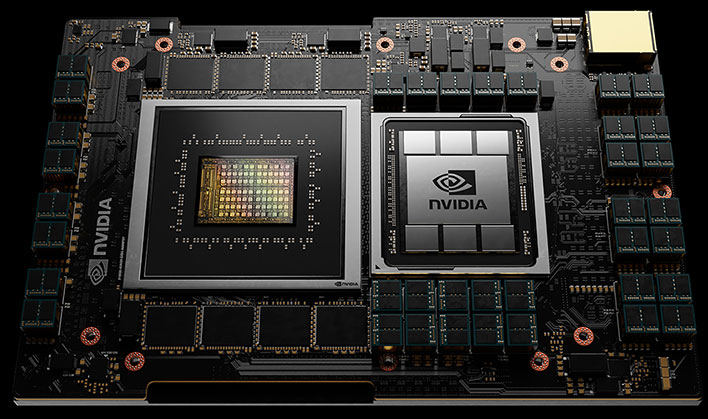

NVIDIA is not ready to talk about core counts, but did say that Grace is built on a 5-nanometer manufacturing process. Shown above is the entire Grace module, which plops the new CPU next to an NVIDIA GPU, with a high-speed interconnect between the two. Using NVLink, Grace serves up over 900GB/s of bandwidth between the CPU and GPU. All told, this is capable of training of trillion-parameter models, and do inference, all in real-time.

Interestingly, NVIDIA said it worked with its partners to create a data center version of LPDDR5 memory for Grace. It brings the benefits of error correcting code (ECC) technology, while deliver over 500GB/s of memory bandwidth. And because LPDDR5 was designed for the mobile and embedded space, it is energy efficient.

NVIDIA is holding back some fine-grain details about the underlying Arm architecture, but did say it is based on Neoverse.

Grace will come in out 2023, if all goes to plan. NVIDIA notes that while most data centers will be served by existing CPUs, Grace will slip into niche roles, having already lined up orders by the Swiss National Computing Centre (CSCS) and the US Department of Energy's Los Alamos National Laboratory, both of which will build supercomputers around Grace.