NVIDIA Unlocks Higher Quality Textures With Neural Compression For 4X VRAM Savings

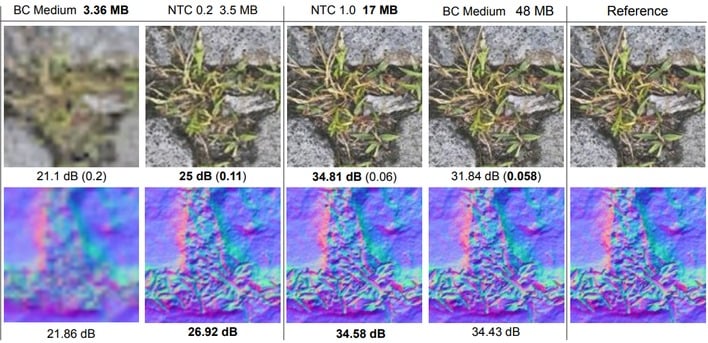

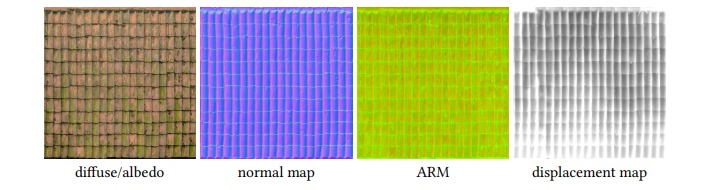

What if we could use a neural network to improve video game textures? That's basically the question that NVIDIA's new whitepaper asks. Titled "Random-Access Neural Compression of Material Textures", the paper describes a novel method of compressing game material textures that can store up to 16x more data in the same space as traditional block-based compression methods.

If you read the above, you're probably expecting me to tell you that NVIDIA's just running a neural upscale on a low-detail stored texture. To be clear, that's not what's happening; in fact, it's not even close. Instead, NVIDIA's researchers went at the problem of texture compression using AI as their scalpel to carve away the cruft from old texture compression methods and store only the absolutely necessary data.

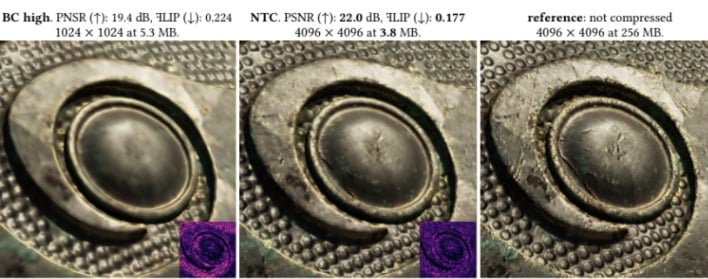

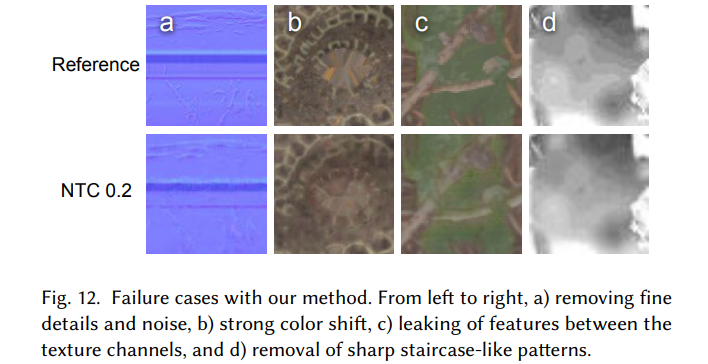

One of the major problems with neural image upscalers is that while they frequently have a high degree of perceptual quality—in other words, their output "looks good"—it is often actually quite far from the original image in terms of objective details. This "objective distortion" was a major concern of the authors, and reducing this quality so that the final output image is very close to the source was a priority.

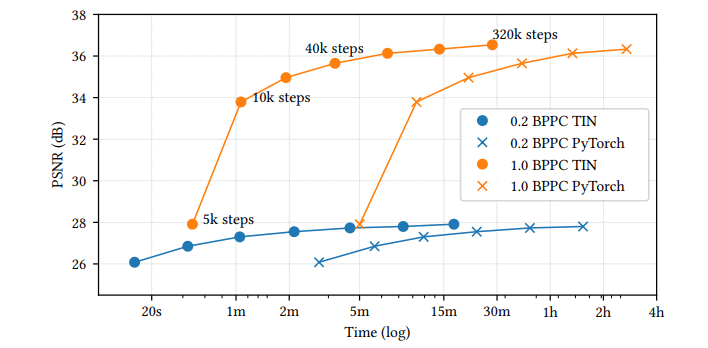

The developers do admit that there is a small performance cost to this method, but they don't go into much detail beyond saying that it's more expensive than traditional texture filtering. They do say that it's fast enough for games, though—at least, on GPUs with dedicated matrix math processors. They also remark that it probably won't have much impact on game performance as the neural operations can be done in parallel on the GPU while it is busy with other stuff.

There are some limitations of the format, of course. The biggest one is that it requires the texture data to be very carefully authored, because any alignment problems (which might go unnoticed with traditional compression) will rapidly reduce the quality of the final neural compression. You also can't use traditional texture filtering with this method, which includes anisotropic filtering.

It's still early days for Neural Texture Compression, and the authors didn't include any examples of what it would look like inside a complete 3D scene, so unfortunately we really don't know whether this method is truly appropriate for games. Still, it has the potential to drastically improve the look of games as video memory demands continue to expand.