ARM Details Project Trillium Machine Learning Processor To Drive AI To the Edge

ARM Project Trillium NPU Details (Continued)

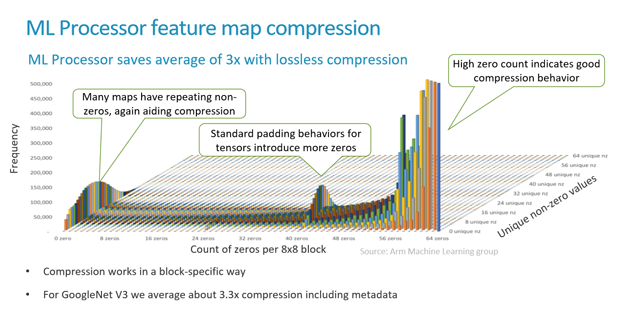

Arm’s architecture exploits common data types to losslessly compress feature maps through masking the high occurrence of repeating zeros in a given 8x8 block. Arm claims this can yield compression ratios of around 3x. SRAM, where partial workloads and data are stored, takes up a sizeable amount of die space and power budget, so any opportunity to reduce needed SRAM capacity is welcome. For reference, Arm aims for approximately 1MB of SRAM across 16 compute engines.

Arm also applies compression to weights along with pruning to further reduce bandwidth requirements. Weights are analogous to a biological synapse which connects two neurons in the neural network. Pruning “weak” synapses allows the hardware to skip over computation that would not have a significant impact upon the end result. Any bit saved here reduces the need to make a call out to DRAM as well, for additional improvements to latency and power consumption.

Finally, the Programmable Layer Engine (PLE) keeps the architecture flexible as the field of machine learning evolves. The PLE enables the ML processor to incorporate new operators that may arise with a minimal instruction set of its own to cut down on complexity. The PLE is used to process results from the MAC engine before writing the data back out to SRAM.

All together, Arm touts its new ML processor as capable of 4.6 TOPs in real-world use cases with an impressive efficiency of around 3 TOPs/W. Arm has stated they are targeting both 16nm and 7nm fabrication nodes, though it is not disclosing expected die area just yet. The ML processor is expected to be quite small relative to Arm's own CPU and GPU designs, however.

Arm unites workloads across the NPU, CPUs, and GPUs with a common software library as well. The core of this is “Arm NN” which bridges between popular third party neural network frameworks -- such as TensorFlow, Caffe, and Android NNAPI -- and the different processor types. Code can be written generically and run on whatever processing unit is available. For instance, a budget-oriented device with a traditional CPU and GPU setup is able to run the same NN code as a high-end device with a discrete ML processor – it just does so more slowly. Arm also offers support for third party IP to be integrated into the stack as necessary.

This heterogeneous platform also enables developers to make efficiency-conscious decisions. A device may have a ML processor on-board, but for a brief ML workload (e.g. analyzing a single frame) it may make more sense to run it on the already-powered CPU rather than lighting up the NPU.

Arm NN is available for developers looking to target Android and Embedded Linux with support for Cortex-A CPUs and Mali GPUs now and will offer immediate support for Arm’s ML processor upon release.