AMD Unveils 3rd Gen EPYC Milan-X Data Center CPUs With 3D V-Cache For A Huge Performance Uplift

Following up on its launch of the Ryzen 7 5800X3D in the consumer sector, AMD today unveiled its 3rd Gen EPYC "Milan-X" processors for the data center with similar stacked 3D V-cache enhancements and chip packaging technology. Compared to its non-stacked 3rd Gen EPYC processors, AMD is claiming up to a massive 66 performance uplift across a variety of targeted technical computing workloads by effectively tripling the L3 cache available on its EPYC lineup.

The keyword there is "targeted." AMD is upfront that Milan-X EPYC CPUs were designed to benefit specialized workloads, and so it won't be the best solution for every possible application. Workloads that are good fits are ones that are sensitive to L3 cache sizes, obviously. AMD is a little more specific, saying workloads with high L3 cache capacity misses (data sets that are often too large for L3 cache) and those that have high L3 cache conflict misses (data pulled into cache with low associativity) will see the biggest performance gains from all the extra cache.

On the other hand, AMD's non-stacked 3rd Gen EPYC processors are better choices for workloads with L3 cache miss rates near zero, high L3 cache coherency misses (data that is highly shared between cores), and CPU intensive tasks that may only stream data or use it once, rather than operating on it iteratively, AMD notes.

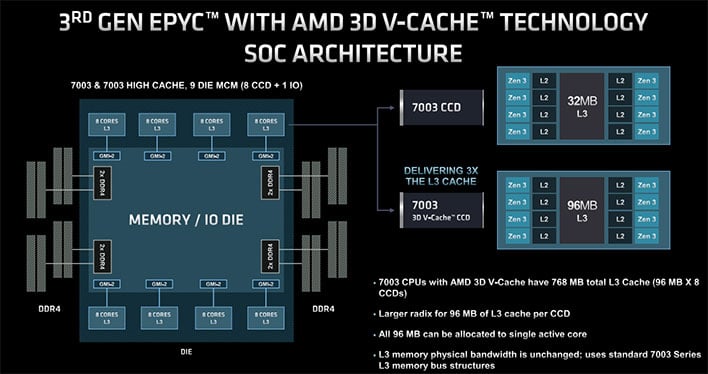

Shown above, is a logical overview of the Milan-X SoC topology, with a central I/O die and eight surrounding core chiplet dies (CCDs). To highlight the differences between Milan and Milan-X, there are two expanded views in the upper right. The top one shows a Milan CCD with eight Zen 3 CPU cores, each with its own L2 cache and a 32MB shared L3 cache module. Then underneath, we see Milan-X with triple the amount of L3 cache.

One thing to note is that, with this shared cache arrangement, any of the cores can tap into however much it needs. So for example all eight cores could divvy it up and use 12MB apiece, or a single core could utilize the entire 96MB.

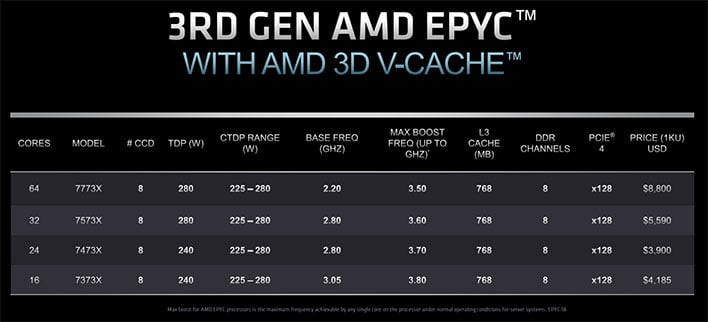

AMD 3rd Gen EPYC Milan-X Processor Models And Specifications

AMD is introducing four new 3rd Gen EPYC processors models with stacked 3D V-cache. Each one sports eight CCDs containing 96MB of L3 cache apiece, for a total of 768MB. AMD is keeping the lineup rather easy to parse by simply attaching an "X" at the end of the model designation, to denote that it features 3D V-cache.

At the top of the stack is the EPYC 7773X. This is a 64-core/128-thread CPU with a 2.2GHz base clock and 3.5GHz max boost clock. The other three options include the EPYC 7573X (32-core/64-thread, 2.8GHz to 3.6GHz), EPYC 7473X (24-core/48-thread, 2.8GHz to 3.7GHz), and EPYC 7373X (16-core/32-thread, 3.05GHz to 3.8GHz). All of these support 8-channel memory and 128 PCIe 4.0 lanes. So in keeping things simple, it boils down to a choice of how many cores and threads a customer needs, with mostly nominal clock speed differences across the board (specifically the boost clocks).

Furthermore, AMD says these chips are engineered to work within the same power and thermal design specifications of existing Milan high-frequency parts. What this all means is a data center customer has the flexibility to choose a high frequency Milan CPU, or a Milan-X version with a whole lot of extra L3 cache within the same platform, along with the same number of memory and I/O channels.

As for pricing, customers are looking at a 20 percent premium on average for Milan-X, compared to the equivalent non-X model.

Targeted Workloads And Performance Expectations

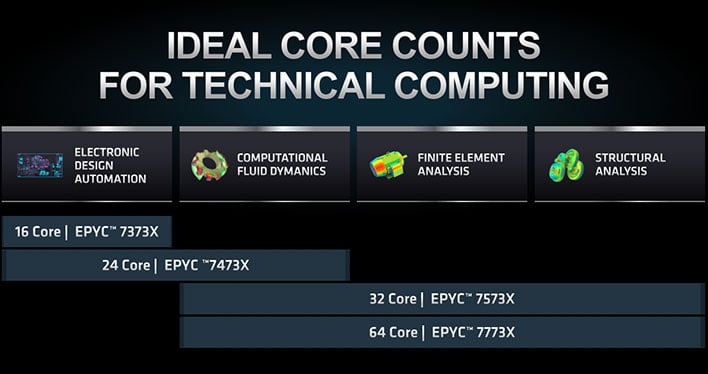

The decision to settle on four Milan-X SKUs was not an arbitrary one. AMD says it designed the lineup with explicit targets for each model, with each having a particular workload affinity. On the 'lower' end (if you want to call it that), it starts with electronic design automation (EDA) workloads, which are typically lighter-threaded. AMD says these types of applications benefit from a large cache per core ratio, and thrive on memory bandwidth. As such, the 16-core EPYC 7373X and 24-core EPYC 7473X are the best candidates, with a caveat.

"While both 16 and 24-core Milan-X parts offer significant benefits to EDA customers, the structure of their software license agreement may be the deciding factor in which model they choose," AMD says.

Meanwhile, the 32-core EPYC 7573X and 64-core EPYC 7773X are particularly suited for computational fluid dynamics (CFD), finite element analysis (FEA), and structural analysis (SA) workloads. These are all high threaded and scale when throwing more cores at the task. And likewise, there are licensing model considerations in these areas as well, that may dictate which SKU is the ideal choice.

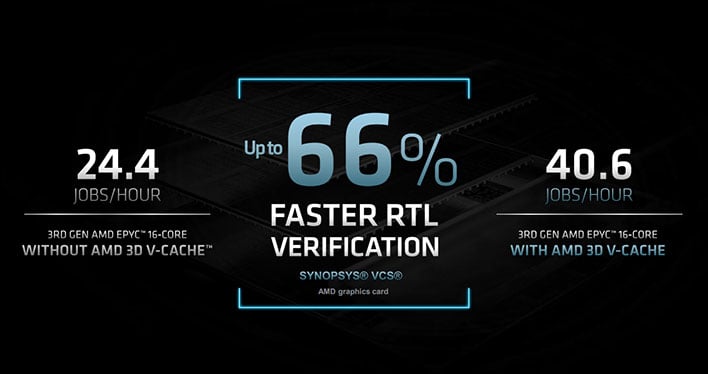

Licensing model considerations aside, AMD is touting huge performance gains in tasks that are suited to take advantage of bigger bundles of L3 cache. From AMD's vantage point, EDA is the core of the semiconductor industry, and RTL simulation accounts for most of the work in digital circuit simulation. And it's precisely here where AMD says a 16-core Milan-X processor can accelerate workloads by 66 percent over the fastest 16-core Milan part.

"To put that into context, the expected performance uplift for EDA tools is 8-12 percent per generation," AMD says.

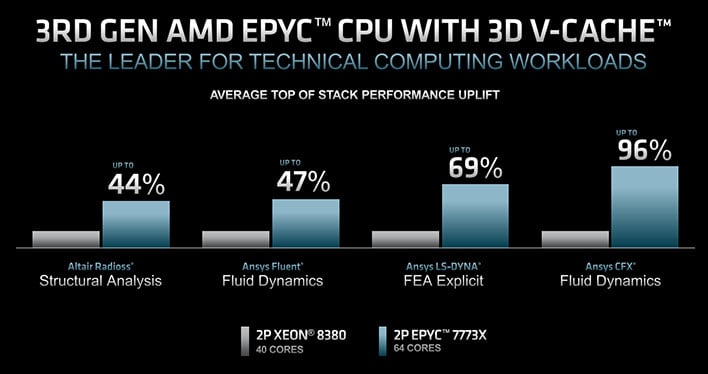

Of course, AMD is not solely interested in comparing Milan-X to Milan, there's also the competition to consider. Namely, Intel and its Xeon processor family.

Comparing top-of-the-stack solutions, AMD claims a server configured with dual EPYC 7773X processors offers up to a 96 percent performance advantage over a server with dual Xeon 8380 processors. That's in large part because the EPYC server (in this example) has significantly more cores and threads at its disposal than the competing Xeon setup. In other targeted applications, AMD touts a 44 percent to 69 percent performance advantage in this same comparison.

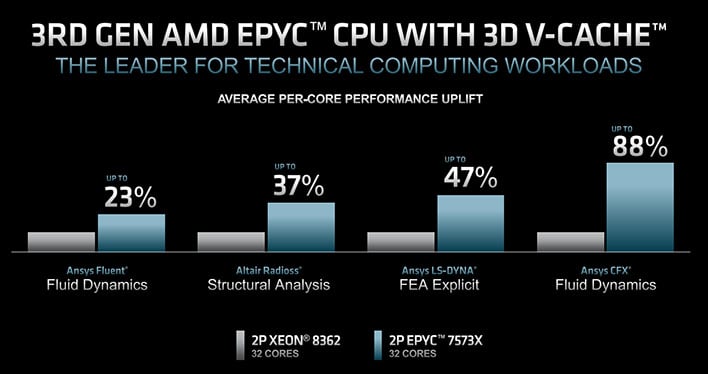

More cores and threads equating to better performance is not exactly shocking, but what happens when evening the playing field? According to AMD's own benchmarks, a 2P EPYC 7573X system (32 cores per chip) versus a 2P Xeon 8362 system (also 32 cores per chip) still results in a significant performance uplift for Milan-X.

In a core-to-core showdown, AMD is claiming customers will see Milan-X outpace the competition by up to 23 percent in FD workloads, 37 percent in SA workloads, 47 percent in FEA workloads, and 88 percent in FD workloads.

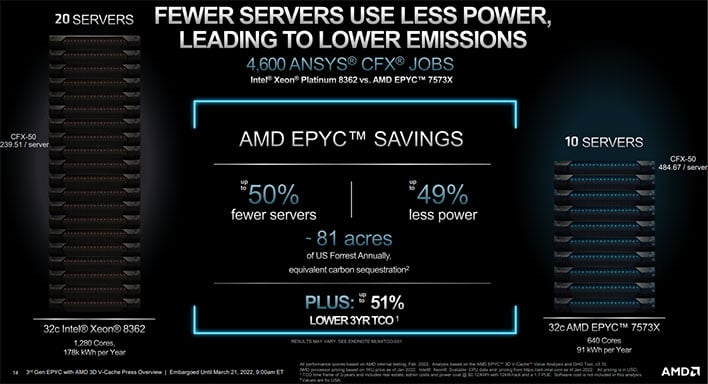

Outside of the raw performance metrics AMD is claiming, the company says organization can cut costs, power consumption, and emissions by employing fewer Milan-X servers than what would be required if choosing Intel Xeon solutions.

The claim here is that in a best case scenario, it would take half the number of Milan-X servers compared to Xeon, to get the same work done. Not only does this translate into a cost advantage in terms of hardware and power, but organizations could also potentially spend a lot less on software licensing and total cost of ownership (TCO).

Naturally we'll have to wait for independent benchmarks to see if all these claims hold true. It shouldn't take long, however, as Milan-X is already shipping to customers such as Altair, Cisco, Dell, Lenovo, Microsoft (Azure), Supermicro, and others.