Intel 4th Gen Xeon Scalable Sapphire Rapids Performance Review

Intel 4th Gen Xeon Scalable: Sapphire Rapids Tested In A Variety Of Data Center Workloads

| Intel 4th Generation Xeon Processors (Sapphire Rapids) Intel’s tiled chiplet approach with specialized accelerators could open the door for some truly custom server recipes for individual customer demands.

|

|||

|

|

||

Intel officially launched its 4th Gen Xeon Scalable CPUs on Tuesday, codenamed Sapphire Rapids. These innovative datacenter processors are destined to face off against AMD’s fourth generation EPYC “Genoa” CPUs. AMD EPYC server processors have been clawing market share from Intel as of late, and this go round has reached something of a fever pitch. Today, we are putting these platforms head-to-head to see where the strengths and weaknesses lie for each.

As we discussed during the Genoa launch, these two chip juggernauts have embraced differing strategies, making comparisons somewhat murkier. AMD has focused on delivering maximum general compute performance to satisfy a wide range of customers and applications. EPYC Genoa processors can supply up to 96 CPU cores per socket and flexes 12-channel DDR5 memory and PCIe Gen 5 interfaces, to name a few other spec sheet topping features. In exchange, the microarchitecture offers relatively little in the way of “specialized” silicon, though it has brought support for specialized instructions like AVX-512.

In contrast, Intel’s 4th Gen Sapphire Rapids Xeon CPUs sound comparatively scaled back. These processors top out at 60 CPU cores per socket. While these chips also support DDR5 memory and PCIe Gen 5 as well, DDR5 support is limited to 8 channels. The repercussion of this is lower peak memory bandwidth, though we must say 8 channels still offer a healthy amount for most workloads.

Intel is instead bringing a bit of special sauce in the form of workload accelerators. Intel reckons that by including an array of purpose-built silicon, it can not only complete certain tasks more quickly and power efficiently, but also free up CPU cores to handle the remaining general-purpose work. This generation marks something of an inflection point in Intel's design strategy, and we can expect to see the lineup of accelerators expand if the bet pays off.

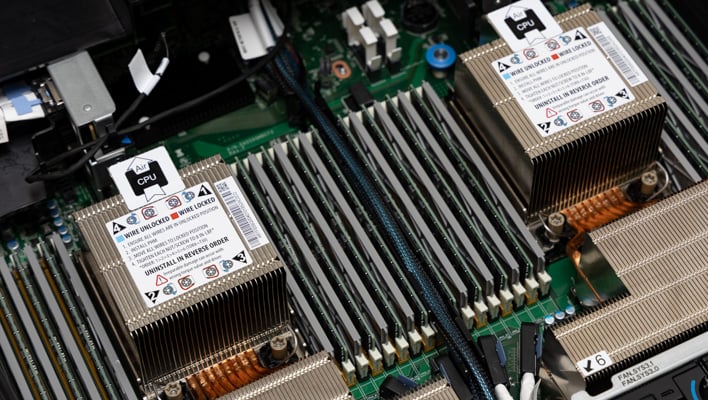

For our in-house testing, Intel sent over a 2U reference server platform, along with three sets of chips. These include a pair of the processors employed in our accelerator workload preview back in October of last year, which have been revealed to be the Xeon Platinum 8490H with a full 60-cores apiece. In addition, we have a pair of the 56-core Xeon Platinum 8480+ chips and a pair of the 32-core Xeon Platinum 8462Y+, for a healthy amount of cores and threads overall.

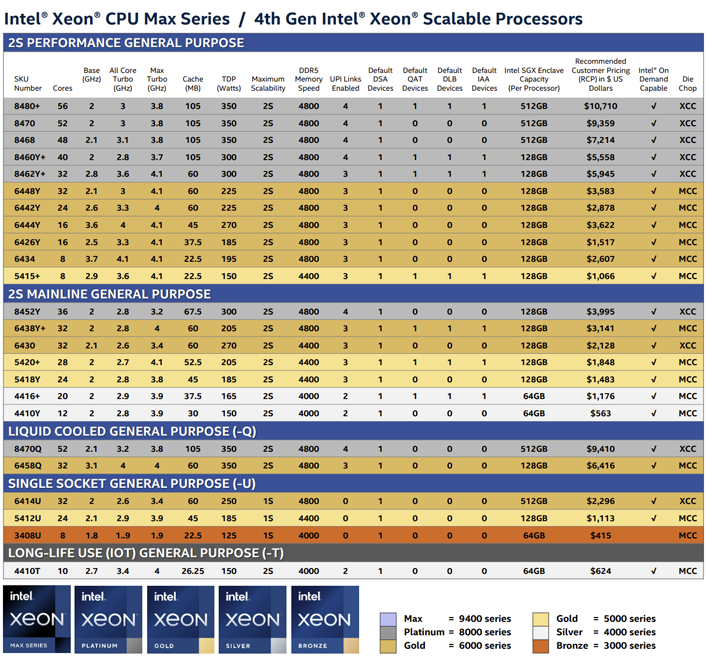

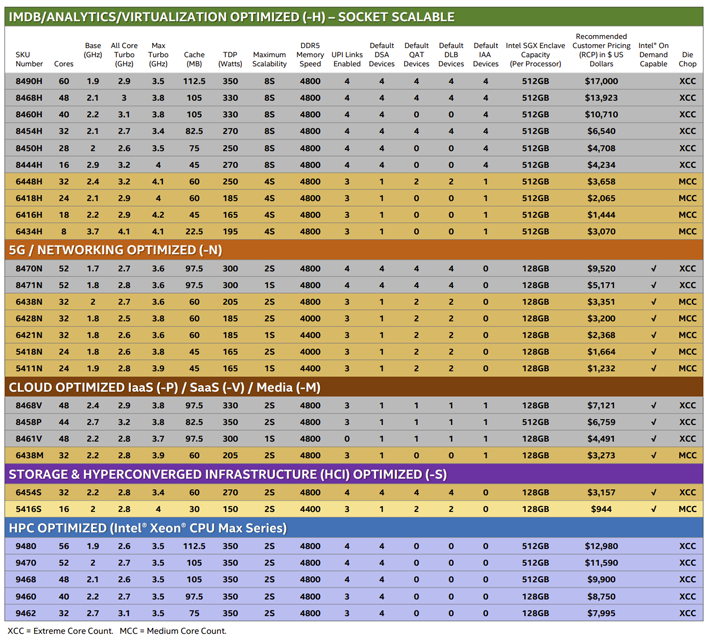

Intel 4th Gen Xeon Scalable Processors: Meet The Family, And It's A Big One

All of these processors differ by more than just core counts, but also differ by clock frequencies, cache, and even accelerator counts. In that last respect, the 8480+ and 8462Y+ are most similar. The plus (+) in these SKUs indicates that they arrive with a single accelerator of each type enabled. The 8462Y+ has less total cache at 60MB to the 8480+’s 105MB, but the total cache per core is identical. The 8462Y+ does get to push higher clocks as a result with a higher base clock (2.8GHz vs 2.0GHz) and a higher turbo clock (4.1GHz vs 3.8GHz). Both of these are classified as “2S Performance General Purpose” processors by Intel.

The 60-core 8490H, by contrast, stands to be more capable. It ships fully enabled with four of each accelerator (one per tile), and expands total cache to 112.5MB, keeping the cache-per-core ratio the same. It is also able to scale up to 8 socket systems where the other two SKUs are limited to a 2-socket setup. A tradeoff of its power is lower clock speeds, with a base clock of 1.9GHz and max turbo up to 3.5GHz. It also holds the lineup’s most expensive pricing at $17,000 a pop, though true pricing of datacenter chips is always a bit hazier than the Recommended Consumer Pricing can attest when these competitors are vying for design wins.

The real takeaway from this is that Intel’s 4th Gen Xeon lineup offers flexibility with wide-ranging capabilities and features for various types of workloads. Prospective buyers need to carefully consider the intended application and a particular SKU’s performance in that respect. This is particularly true for the specialized Networking, Cloud, and Storage/HCI options which we do not have available for testing here.

Enough with the overview, though, let’s fire-up these systems on the test bench and see how they fare in practice.