God Of War PC Gameplay And Performance Review: Nailed It

God of War: Game Performance And Graphics Benchmarks

HotHardware's God Of War Testing Environment

| Hardware Used: AMD Ryzen 7 5800X (3.8 GHz - 4.9 GHz, 8-Core) ASRock X570 Taichi (AMD X570 Chipset) 32GB G.SKILL DDR4-3800 AORUS NVMe Gen4 SSD Integrated Audio Integrated Network EVGA GeForce RTX 2080 SUPER XC ULTRA NVIDIA GeForce RTX 3070 Ti Founders Edition AMD Radeon RX 6800 XT |

Relevant Software: Windows 10 Pro 21H1 AMD Radeon Software v22.1.1 NVIDIA GeForce Drivers v511.23 |

|

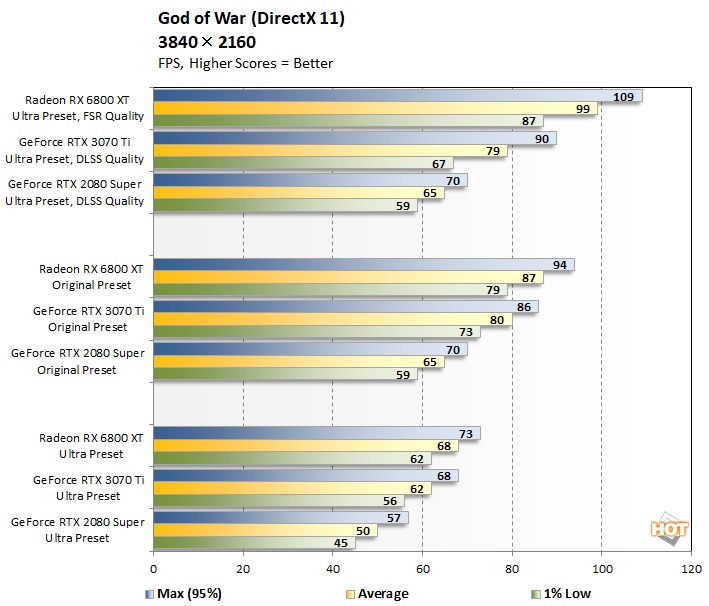

Only the Radeon card is capable of maintaining a framerate over 60 FPS in combat at native 4K with ultra settings, although if you use my recommended settings I'd expect the GeForce RTX 3070 Ti to manage just fine as well. The older Turing card just doesn't have the memory bandwidth for such a high resolution with maxed-out settings, although with a solid G-SYNC display it'd really be just fine. Reducing the game's settings helps all three cards pick up the average over 60 FPS, but the trade-off is pretty dire.

Instead, we'd recommend enabling your choice of resolution scaling, whether you use NVIDIA's DLSS, AMD's FSR, or the built-in temporal resolution scaler. We initially tested all three cards using AMD's FSR solution to ensure performance parity, but upon realizing DLSS has nearly identical performance we switched our GeForce results to DLSS data, as that's more likely to be how most players will enjoy the game. Using Ultra settings with either upscaler turned to "Quality" gives us a pre-upscaling resolution of 2560×1440, and despite losing over half the pixels, it still looks quite good.

The main limiter on performance at 4K UHD resolution is the fixed-function graphics hardware on the GPU; it's a ton of work to shade and fill 8 megapixels. Enabling upscaling (and thus dropping the resolution) drastically lightens that workload, and so we see the cards spread out a little, mostly stratified by fill-rate and memory bandwidth. The Radeon card is the standout here; playing in 4K with max settings and FSR enabled it manages an average framerate of nearly 100 FPS. Neither of the other cards turn in a shabby performance, though; all three are more than enjoyable at these settings.

|

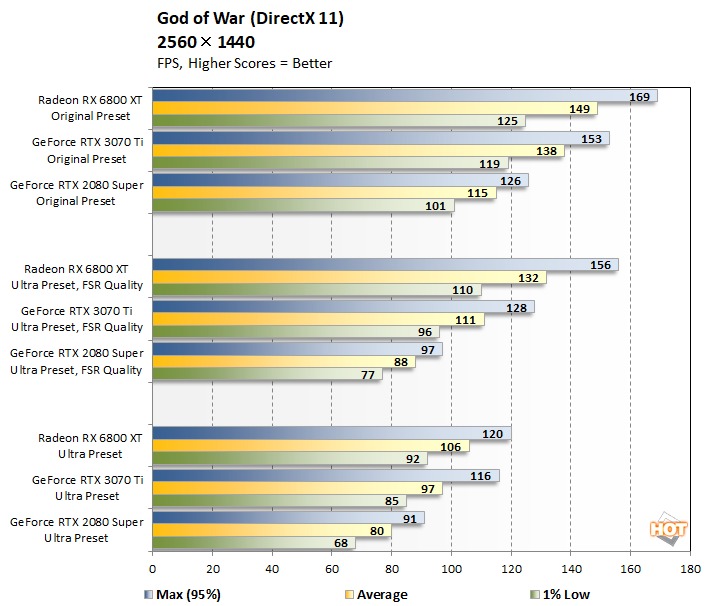

Notably, we see higher performance at 2560×1440 native compared to the results we just looked at using FSR (or DLSS) with a 4K output resolution. As a reminder, those two settings (2560× native and 4K with FSR "Quality") have the same internal render resolution. Some of that is down to the extra work required by the smart upscaling, but we suspect that the game might also be scaling content LODs based on the output resolution.

That's not unusual in modern games, and in subjective testing we couldn't tell the difference, but it's our best explanation for why we see such improved performance playing in the native QHD resolution. All three of these cards are more than capable of maintaining 60 FPS in native QHD with ultra settings, so from this point on the data is only really relevant to PC gamers with high-refresh-rate monitors.

|

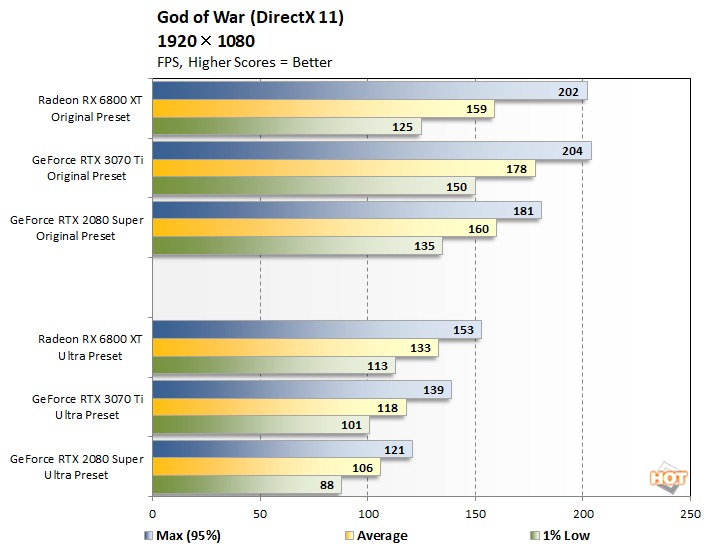

There's no need to employ resolution scaling at this setting, and even with the arguably-excessive Ultra preset, all three graphics cards put up performances that practically mandate a 120-Hz display. Using my recommended settings, I expect that all three cards will maintain a minimum frame rate over 100 FPS.

It would be easy to look at the data for the Original preset tests here and draw some concerning conclusions regarding the Radeon RX 6800 XT. Examining the saved sensor data in CapFrameX, we do see consistently higher CPU usage from the Radeon card, which is what we've come to expect from Radeons in DirectX 11 titles. It's possible that our Radeon card is running into a CPU limitation at these unrealistically low settings, but we think it's worth noting that the 1% low framerate is still over 120 FPS.

|

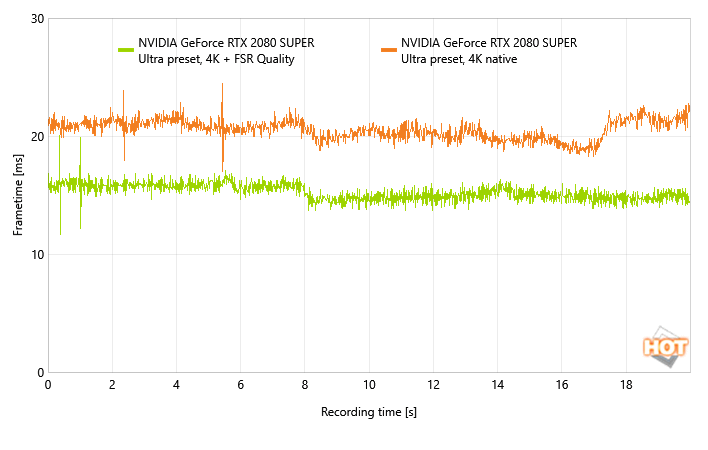

Indeed, God of War on PC is an exceptionally smooth-performing game. Even when performance drops, such as when I was testing the RTX 2080 SUPER in 4K UHD native, it remains fairly consistent. There are the occasional very minor hitches here and there, but these are generally when loading new content or during rapid camera movement and are to be expected. Overall, the title is impressively fluid most of the time.

DLSS Or FSR For God Of War? Both Are Good Options But One Is Superior

A lot of arguments have been made both in favor of and against the idea of comparing NVIDIA's Deep Learning Super Sampling (DLSS) and AMD's FidelityFX Super Resolution (FSR). It's true that they are fundamentally different technologies. DLSS uses the tensor processors on RTX GPUs to do temporal image upscaling through AI inferencing. This requires fairly deep integration into the game engine, so it can't be easily implemented in a driver-level toggle.

By contrast, FSR is simpler, but that's also arguably its strength. When upscaling, FSR just performs a Lanczos upsample before applying FidelityFX Contrast Adaptive Sharpening. FSR doesn't know anything about the scene geometry, and it only operates on a single final-pass rendered frame at a time. However, thanks to that approach, it's also drastically easier to implement, and it can work on essentially any graphics card.

God of War is one of the few games to ship with support for both technologies, and thus it gives us a lovely chance to compare the two directly. There's not much of a comparison to be had, though, honestly. As I mentioned earlier, the performance of the two techniques is nearly identical, with perhaps a slight edge in FSR's favor. The visual output of the two methods is quite different, though. You can see for yourself in these screenshot cutouts.

DLSS' primary fault in past implementations has been noticeable temporal artifacting and I was indeed able to pick it out in a couple of scenes with dark geometry scrolling past light backgrounds, but you really have to look for it; it's not something you'll ever notice during gameplay. DLSS in this game looks fantastic and I recommend it without reservation. Some folks have commented that DLSS is not available to them despite possessing a capable GeForce RTX graphics card. At the time of this writing there is currently an experimental beta branch on Steam that you can download which resolves this issue.

Don't mistake me though; FSR doesn't look bad at all, and both methods are preferable to the smeary TAA upscale of the regular resolution scaler. DLSS is visibly crisper and sharper, though. To some degree that may be because the specific version of DLSS implemented by Jetpack Interactive in this port performs an aggressive sharpening pass after upscaling. I've seen some players express that they feel it's overly sharpened, giving an almost comic-book like vibe to the image. I don't feel that way, but I understand how they could.

I also compared FSR on the GeForce RTX 3070 Ti with the output of FSR on the Radeon RX 6800 XT to make sure that it was truly an apples-to-apples comparison. You can see a couple of example images from that testing above, along with a comparison to DLSS and native 4K rendering.

Unfortunately, I wasn't able to match the angle as exactly in some shots due to the forced shakycam in cutscenes, but you can still see that regardless of vendor, FSR looks a lot more like simple upscaling than it does AI-enhanced DLSS or native. Again, FSR is not ugly, it's just softer and not as sharp. Some people may even prefer the look. Another curious consequence of the sharpening performed by both DLSS and FSR, is that it un-does some of the distant blur applied by the Depth of Field effect. This is clearer in the full-resolution shots, which you can download here from our Google Drive repository.

If FSR is your only choice in God of War, we'd certainly recommend using it, especially if it lets you stay at recommended settings, or if you can't quite get a stable frame rate at your monitor's native resolution (say, when playing on a 4K UHD monitor with a Radeon RX 5700 XT or similar.) If you do have the option of using DLSS, though, do so. The infinitesimal visual difference between native and DLSS is well worth the gains in performance.

God Of War On The PC Is Fantastic, And Other Key Take-Aways

God of War is between 20 and 30 hours long for a typical play-through, or around fifty hours if you intend to do and see everything. It has a lot of qualities to recommend it. It's a rare gem; a fully-offline game with no DLC to buy. $50 and you get the full package, some thirty-plus hours of single-father feels and Nordic myth mayhem. That in and of itself is almost enough to warrant a recommendation.Folks who enjoy getting engrossed in a game's setting, who want to become captivated by its narrative and deeply-invested in its characters, will absolutely love this game. The voice acting is a top-class affair, with main characters Kratos and Atreus especially being expertly performed. The pacing of the game is pretty good too, at least until the latter half, where it's somewhat under the player's control.

God Of War For PC