NVIDIA GeForce RTX Explored: What You Need To Know About Turing

NVIDIA GeForce RTX Turing Architecture - New Features, DLSS, Ray-Tracing

One of Turing’s main claims to fame is real-time ray tracing. While the GPUs do have capabilities that allow for much faster rendering of ray traced imagery, keep in mind that what we’ve all seen in pre-release games and demos to date are hybrid rendering techniques that leverage traditional rasterization alongside real time ray tracing in some portions of a scene using NVIDIA’s RTX platform software APIs and SDKs.

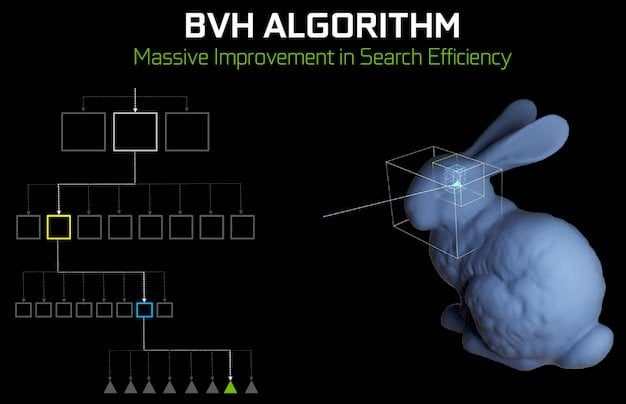

The RT cores and algorithms that accelerate the ray tracing do so using a technique developed by NVIDIA. The GeForce RTX series uses Bounding Volume Hierarchies to efficiently drill down and trace the path of a ray.

With existing GPUs, using Bounding Volume Hierarchies (or BVH), and emulating what the GeForce RTX series can process, is relatively slow. As we’ve mentioned, the GeForce GTX 1080 Ti is 10x slower than a RTX 2080 Ti with a similar ray tracing workload.

The GeForce RTX series, however, accelerates the process in hardware. The RT Core includes two specialized units. The first unit does bounding box tests, and the second unit does ray-triangle intersection tests. A ray probe is launched, and if a ray is present in a particular volume, it progresses down the tree and evaluates another, and when there is a hit it’s sent down the pipeline to the SMs for shading. The RT cores in Turing accelerate the BVH traversal and ray-triangle intersection evaluation. While the RT cores are doing their thing, the SMs are also available to perform other rasterization graphics processes or compute work.

But what does all of this fancy, highly complex ray-traced graphics processing mean for games? In short, real-time ray tracing allows for dramatically more realistic lighting and reflection rendering, along with how light bounces off and reacts with objects in a scene. Combined with traditional rasterization rendering, ray tracing can provide a much higher level of in-game realism and visual fidelity than previously possible. The screen shot above from Metro Exodus, as well as the live Battlefield V demo above, showcase what real-time ray tracing can do in action and the effects are impressive.

NVIDIA’s new GeForce RTX 2080 Ti, for example, comes equipped with 552 Tensor cores. These are specialized execution units designed for performing tensor generalized matrix operations (math calculations) that are required for Deep Learning. NVIDIA introduced Tensor cores in its Volta GPU architecture but the Tensor cores in Turing have been optimized for inferencing. Inferencing is one of the most fundamental capabilities of machine learning, where the machine analyzes images at the pixel level and literally learns shapes, patterns, coloring, etc., to recognize images very quickly. Is it a dog, a cat, a mouse? You get the idea. And specifically, Turing’s Tensor cores can operate in INT8 and INT4 precision modes for inferencing workloads that don’t the need the FP16 level of precision of Volta Tensor units; or in other words, inferencing for gaming graphics and specifically DLSS (and other new features).

NVIDIA NGX is the new deep learning-based neural graphics framework of NVIDIA’s RTX platform. NGX uses deep neural networks (DNNs) to perform AI-driven functions that accelerate graphics rendering operations and new features like DLSS (Deep Learning Super Sampling), AI InPainting or content-aware image manipulation, AI Slow-Mo for very high quality slow-motion video, and AI Super Rez smart image resolution resizing. All of these AI-powered features will be accessible via the NGX API which will couple hardware and software together at the driver level.

NGX services are managed with NVIDIA’s GeForce Experience (GFE or QPX in the case of Quadro cards) software and enabled to run on the GPU, which will not function on NVIDIA architectures prior to Turing. GFE will communicate with NGX to determine what games and apps are installed on the system and will download relevant DNN models for use. NGX DNN models can also interface with CUDA, DirectX and Vulkan to optimize performance while NGX services run on Turing’s Tensor cores. Note, these models are also fairly light-weight and we’re told are only megabytes in size.

So, that’s the hardware and software behind DLSS, but how does NVIDIA train these Deep Neural Network models in games, for better, faster anti-aliasing? That’s where the rubber will meet the road for DLSS adoption in games. NVIDIA will work with game developers for “free” and have them send them their games to feed the beast, so to speak; the beast being an NVIDIA DGX-1 AI research supercomputer. This powerful AI server will then learn the game visuals frame by frame, comparing them to a “ground truth” golden sample of image quality with 64x super-sampling (64xSS). 64xSS shades at 64 different offsets in a pixel and provides extreme detail, along with ideally anti-aliased rendering. The AI neural network is then tasked with producing an image output, measuring the difference between it and the 64xSS ground truth image quality target, and adjusting its weights accordingly to perfect the image on the next iteration; and the process continues until the model is built.

The result is both high anti-aliasing image quality in addition to high frame rates. The image above is indicative of what DLSS can achieve in its 2X mode, which is almost indistinguishable from the 64xSS ground truth sample. However, in the video demo depicted in the slide above, you’re seeing DLSS standard mode compared to TAA (Temporal Anti-Aliasing) running at 4K in Epic’s Infiltrator demo using Unreal Engine 4. The machine running TAA at 4K on a GeForce GTX 1080 Ti is slogging it out at just playable frame rates north of 30 FPS. However, the GeForce RTX 2080 Ti with DLSS on (and TAA off) at 4K is able to crest 80 FPS.

Live and in person, we couldn’t tell the difference in image quality, except that the RTX 2080 Ti powered machine had a much faster frame rate. See for yourself...

Regardless, the key here is going to be how well NVIDIA is able to execute on getting DLSS modes enabled in new and existing game titles. At first blush, it would seem the feature would be a no-brainer for most devs looking to improve performance and image quality for their games on NVIDIA hardware. In addition, as you can imagine, the more games NVIDIA’s DNNs are fed, the better they’ll get at DLSS, potentially also enabling other image quality features not seen before in games, in the future.

As scene rendering complexity continues to increase, with thousands of individual objects on the screen to apply shader processes to and render, hundreds of thousands of draw calls from the CPU to the GPU can become a bottleneck. However, Turing's new Task Shader stage can now process a list of many objects being sent to the GPU concurrently for processing in parallel, as the Mesh Shaders are then fired to shade triangles to be rasterized.

You can think of the Task Shader as somewhat of a dispatch pre-processor that alleviates the CPU bottleneck for object draw calls. NVIDIA claims it can enable an increase in the number of objects that can be displayed in real time at gaming frame rates by “an order of magnitude.” In addition, Turing now enables new Variable Rate Shading techniques that can not only allow higher levels of detail, but also boost performance.

VRS or Variable Rate Shading allows developers much finer-grained control of shading rates in any given scene, when powered by an NVIDIA Turing GPU. Each 16x16 pixel region of a scene can now have different level of shader detail and a different shading rate as a result. The diagram below shows the level of shader detail in 16x16 pixel regions, with the traditional 1x1 blue grid coloring each and every individual pixel. However, with VRS there are 6 additional lower resolution shader rates that can be applied in the 16x16 pixel region, thus also allowing for a possible increase in shader rates in certain areas, because VRS can conserve shader processing resources in other areas of the scene. The image below illustrates different level of detail for the car at full rate (in blue), or near the car once per every four pixels (in green), while the road on the left and right hand sides of the scene can be shaded once every 8 pixels at lower detail (in yellow).

The car example above is generally what’s referred to as Content Adaptive Shading. NVIDIA claims developers can implement content-based shading rate reductions without modifying their existing rendering pipeline and with only small changes to shader code. However, there are also other adaptive shading techniques like Motion Adaption Shading. Just as it sounds, Motion Adaptive Shading allows for shading rate reduction based on what your eye can actually see for objects in motion. Imagine yourself on a train, looking out the window. Your eyes can fix on an object or a certain area and pick up specific details of it. However, other areas outside that window will be blurred in your periphery, due to the motion of the train. It’s obviously wasteful to shade areas that are motion-blurred at full resolution, so this technique offers a reduced sampling rate, but enough so that it’s visually acceptable to the eye and also at a higher frame rate as a result. Motion Adaptive Shading can also be combined with Content Adaptive Shading for even better shader efficiency.

Other examples of VRS shading optimization techniques include Foveated Rendering, where shading is done at variable rates based on an area of a scene’s viewing angle to the human eye, and Texture Space shading, where game developers can reuse shader output in areas of a scene that are identical, rather than re-shading those areas wastefully.

Finally, Turing has better Multiview Rendering at more arbitrary points versus NVIDIA’s previous-generation architectures, with better granularity as well, for VR workloads. As VR devices and head mounted displays gain wider field of views and higher resolution, NVIDIA claims Turing has greater flexibility to take advantage of redundant geometry processing in VR application workloads. Turing’s new Variable Rate Shading techniques, as well as its more flexible Multiview Rendering capabilities will take time for developers to adopt, but the net gain could be over a 20 percent speed-up in graphically rich scenes and game engines, but with comparable or higher image quality as a result of these optimizations.

The RT cores and algorithms that accelerate the ray tracing do so using a technique developed by NVIDIA. The GeForce RTX series uses Bounding Volume Hierarchies to efficiently drill down and trace the path of a ray.

With existing GPUs, using Bounding Volume Hierarchies (or BVH), and emulating what the GeForce RTX series can process, is relatively slow. As we’ve mentioned, the GeForce GTX 1080 Ti is 10x slower than a RTX 2080 Ti with a similar ray tracing workload.

The GeForce RTX series, however, accelerates the process in hardware. The RT Core includes two specialized units. The first unit does bounding box tests, and the second unit does ray-triangle intersection tests. A ray probe is launched, and if a ray is present in a particular volume, it progresses down the tree and evaluates another, and when there is a hit it’s sent down the pipeline to the SMs for shading. The RT cores in Turing accelerate the BVH traversal and ray-triangle intersection evaluation. While the RT cores are doing their thing, the SMs are also available to perform other rasterization graphics processes or compute work.

But what does all of this fancy, highly complex ray-traced graphics processing mean for games? In short, real-time ray tracing allows for dramatically more realistic lighting and reflection rendering, along with how light bounces off and reacts with objects in a scene. Combined with traditional rasterization rendering, ray tracing can provide a much higher level of in-game realism and visual fidelity than previously possible. The screen shot above from Metro Exodus, as well as the live Battlefield V demo above, showcase what real-time ray tracing can do in action and the effects are impressive.

Tensor Cores, Machine Learning, NGX, And DLSS

DLSS, or Deep Learning Super Sampling, is one of the more interesting and talked about features of NVIDIA’s new Turing architecture and GeForce RTX cards. However, to understand how DLSS works, we need to go back to the hardware and software that powers it, to better understand how NVIDIA gets the job done for impressive visuals with minimal performance impact. First, let’s talk about the Tensor cores in Turing as they relate to DLSS.NVIDIA’s new GeForce RTX 2080 Ti, for example, comes equipped with 552 Tensor cores. These are specialized execution units designed for performing tensor generalized matrix operations (math calculations) that are required for Deep Learning. NVIDIA introduced Tensor cores in its Volta GPU architecture but the Tensor cores in Turing have been optimized for inferencing. Inferencing is one of the most fundamental capabilities of machine learning, where the machine analyzes images at the pixel level and literally learns shapes, patterns, coloring, etc., to recognize images very quickly. Is it a dog, a cat, a mouse? You get the idea. And specifically, Turing’s Tensor cores can operate in INT8 and INT4 precision modes for inferencing workloads that don’t the need the FP16 level of precision of Volta Tensor units; or in other words, inferencing for gaming graphics and specifically DLSS (and other new features).

NVIDIA NGX is the new deep learning-based neural graphics framework of NVIDIA’s RTX platform. NGX uses deep neural networks (DNNs) to perform AI-driven functions that accelerate graphics rendering operations and new features like DLSS (Deep Learning Super Sampling), AI InPainting or content-aware image manipulation, AI Slow-Mo for very high quality slow-motion video, and AI Super Rez smart image resolution resizing. All of these AI-powered features will be accessible via the NGX API which will couple hardware and software together at the driver level.

NGX services are managed with NVIDIA’s GeForce Experience (GFE or QPX in the case of Quadro cards) software and enabled to run on the GPU, which will not function on NVIDIA architectures prior to Turing. GFE will communicate with NGX to determine what games and apps are installed on the system and will download relevant DNN models for use. NGX DNN models can also interface with CUDA, DirectX and Vulkan to optimize performance while NGX services run on Turing’s Tensor cores. Note, these models are also fairly light-weight and we’re told are only megabytes in size.

So, that’s the hardware and software behind DLSS, but how does NVIDIA train these Deep Neural Network models in games, for better, faster anti-aliasing? That’s where the rubber will meet the road for DLSS adoption in games. NVIDIA will work with game developers for “free” and have them send them their games to feed the beast, so to speak; the beast being an NVIDIA DGX-1 AI research supercomputer. This powerful AI server will then learn the game visuals frame by frame, comparing them to a “ground truth” golden sample of image quality with 64x super-sampling (64xSS). 64xSS shades at 64 different offsets in a pixel and provides extreme detail, along with ideally anti-aliased rendering. The AI neural network is then tasked with producing an image output, measuring the difference between it and the 64xSS ground truth image quality target, and adjusting its weights accordingly to perfect the image on the next iteration; and the process continues until the model is built.

The result is both high anti-aliasing image quality in addition to high frame rates. The image above is indicative of what DLSS can achieve in its 2X mode, which is almost indistinguishable from the 64xSS ground truth sample. However, in the video demo depicted in the slide above, you’re seeing DLSS standard mode compared to TAA (Temporal Anti-Aliasing) running at 4K in Epic’s Infiltrator demo using Unreal Engine 4. The machine running TAA at 4K on a GeForce GTX 1080 Ti is slogging it out at just playable frame rates north of 30 FPS. However, the GeForce RTX 2080 Ti with DLSS on (and TAA off) at 4K is able to crest 80 FPS.

Live and in person, we couldn’t tell the difference in image quality, except that the RTX 2080 Ti powered machine had a much faster frame rate. See for yourself...

Turing Mesh Shaders And Variable Rate Shading

NVIDIA’s Turing architecture also introduces new shading techniques that are enabled by a new type of shader technology incorporated into the GPU pipeline. Game developers will now be able to take advantage of Mesh Shaders and Task Shaders in Turing that are much more flexible in terms of how they operate. Both shader types use what NVIDIA calls a “cooperative thread model,” instead of a single-threaded programming model.As scene rendering complexity continues to increase, with thousands of individual objects on the screen to apply shader processes to and render, hundreds of thousands of draw calls from the CPU to the GPU can become a bottleneck. However, Turing's new Task Shader stage can now process a list of many objects being sent to the GPU concurrently for processing in parallel, as the Mesh Shaders are then fired to shade triangles to be rasterized.

You can think of the Task Shader as somewhat of a dispatch pre-processor that alleviates the CPU bottleneck for object draw calls. NVIDIA claims it can enable an increase in the number of objects that can be displayed in real time at gaming frame rates by “an order of magnitude.” In addition, Turing now enables new Variable Rate Shading techniques that can not only allow higher levels of detail, but also boost performance.

VRS or Variable Rate Shading allows developers much finer-grained control of shading rates in any given scene, when powered by an NVIDIA Turing GPU. Each 16x16 pixel region of a scene can now have different level of shader detail and a different shading rate as a result. The diagram below shows the level of shader detail in 16x16 pixel regions, with the traditional 1x1 blue grid coloring each and every individual pixel. However, with VRS there are 6 additional lower resolution shader rates that can be applied in the 16x16 pixel region, thus also allowing for a possible increase in shader rates in certain areas, because VRS can conserve shader processing resources in other areas of the scene. The image below illustrates different level of detail for the car at full rate (in blue), or near the car once per every four pixels (in green), while the road on the left and right hand sides of the scene can be shaded once every 8 pixels at lower detail (in yellow).

The car example above is generally what’s referred to as Content Adaptive Shading. NVIDIA claims developers can implement content-based shading rate reductions without modifying their existing rendering pipeline and with only small changes to shader code. However, there are also other adaptive shading techniques like Motion Adaption Shading. Just as it sounds, Motion Adaptive Shading allows for shading rate reduction based on what your eye can actually see for objects in motion. Imagine yourself on a train, looking out the window. Your eyes can fix on an object or a certain area and pick up specific details of it. However, other areas outside that window will be blurred in your periphery, due to the motion of the train. It’s obviously wasteful to shade areas that are motion-blurred at full resolution, so this technique offers a reduced sampling rate, but enough so that it’s visually acceptable to the eye and also at a higher frame rate as a result. Motion Adaptive Shading can also be combined with Content Adaptive Shading for even better shader efficiency.

Other examples of VRS shading optimization techniques include Foveated Rendering, where shading is done at variable rates based on an area of a scene’s viewing angle to the human eye, and Texture Space shading, where game developers can reuse shader output in areas of a scene that are identical, rather than re-shading those areas wastefully.

Finally, Turing has better Multiview Rendering at more arbitrary points versus NVIDIA’s previous-generation architectures, with better granularity as well, for VR workloads. As VR devices and head mounted displays gain wider field of views and higher resolution, NVIDIA claims Turing has greater flexibility to take advantage of redundant geometry processing in VR application workloads. Turing’s new Variable Rate Shading techniques, as well as its more flexible Multiview Rendering capabilities will take time for developers to adopt, but the net gain could be over a 20 percent speed-up in graphically rich scenes and game engines, but with comparable or higher image quality as a result of these optimizations.